The landscape of artificial intelligence continues to evolve quickly, with breakthroughs which push the limits of what models can achieve in reasoning, efficiency and versatility of application. The latest version of Nvidia – the Llama Nemotron Super V1.5—Represent a remarkable jump both in performance and conviviality, in particular for aging tasks and with high intensity of reasoning. This article provides an in -depth overview of the technical progress and the practical implications of Llama Nemotron Super V1.5, which should empower developers and businesses with advanced AI capabilities.

Overview: Llama Nemotron Super V1.5 in context

The Nvidia Nemotron family is known to rely on the highlights of the strongest open source and improve them with improved precision, efficiency and transparency. Llama Nemotron Super V1.5 is the most recent and most advanced iteration, explicitly designed for reasoning scenarios with high issues such as mathematics, sciences, code generation and agency features.

What distinguishes the Nemotron Super V1.5 separately?

The model is designed for:

- Deliver advanced details for Scientific, mathematics, coding and agents.

- Reach 3x higher flow Compared to previous models, which makes it both faster and more profitable for deployment.

- Operate effectively on a Single GPUrestoration of individual developers with applications on a company scale.

Technical innovations behind the model

1. Post-training on high signal data

Nemotron Super V1.5 is based on the effective reasoning foundation established by Llama Nemotron Ultra. The advancement of Super V1.5 comes from Post-training refinement using a new owner data setwhich is strongly focused on high reasoning tasks. These targeted data amplify the model's capacities in complex problems and in several stages.

2. Search for neural architecture and pruning for efficiency

An important innovation in v1.5 is the Use of neural architecture and advanced pruning techniques::

- By optimizing the structure of the network, NVIDIA increased the flow rate (inference speed) without sacrificing precision.

- The models now run faster, allowing more complex reasoning per unit of calculation and maintain lower inference costs.

- The possibility of deploying on a single GPU minimizes material general costs, which makes powerful AI accessible to small teams as well as large organizations.

3. Benchmarks and performance

Through a large set of public and internal references, Lama Nemotron Super V1.5 constantly leads its weight categoryespecially in the tasks that require:

- Reasoning in several stages.

- Use of structured tools.

- Next instruction, code synthesis and agent workflows.

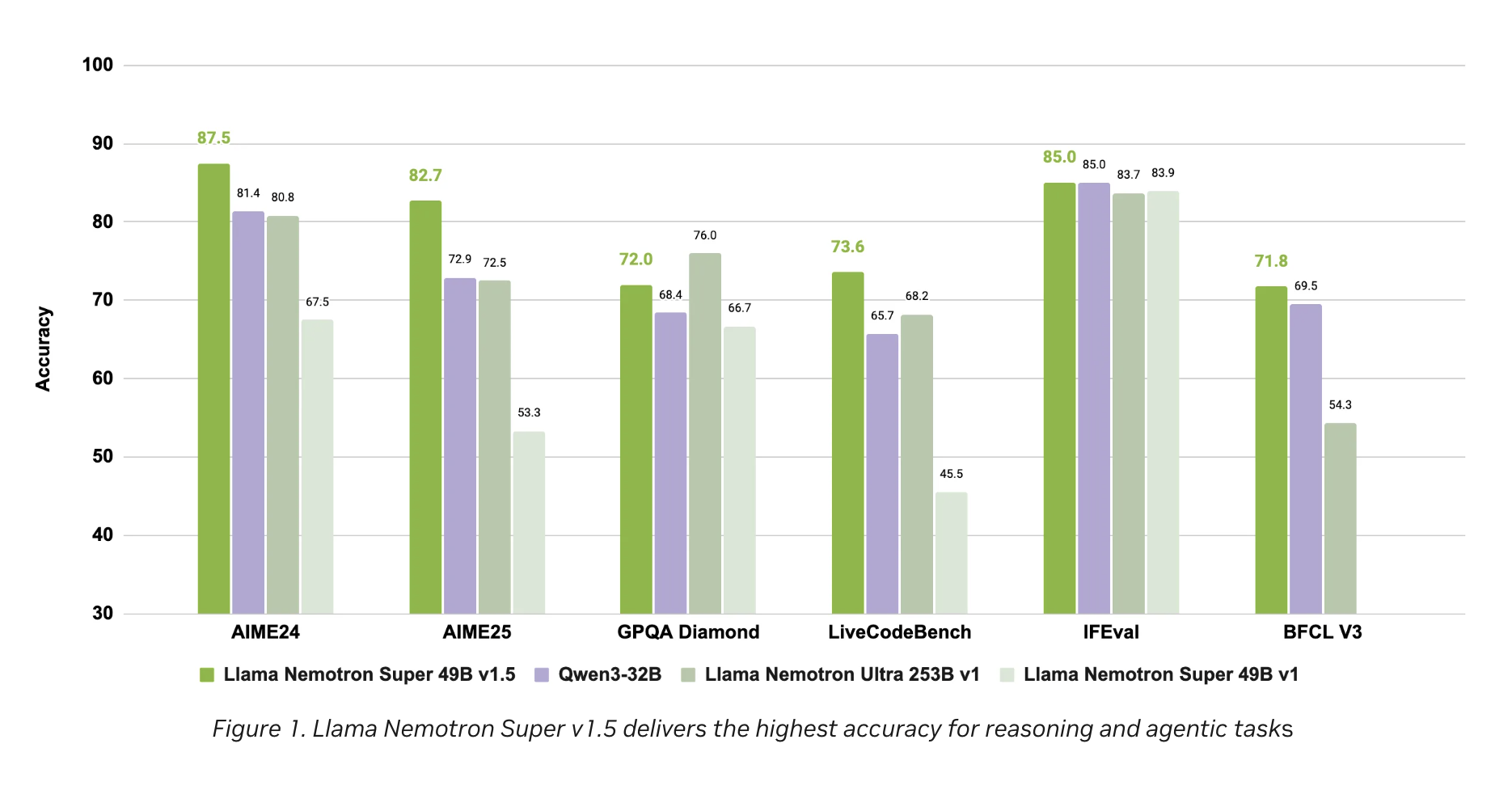

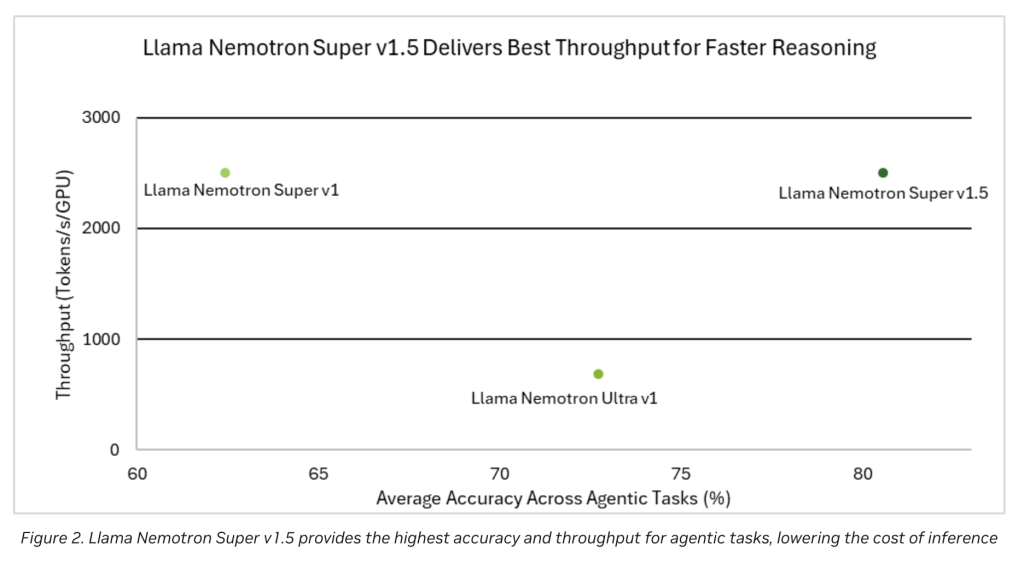

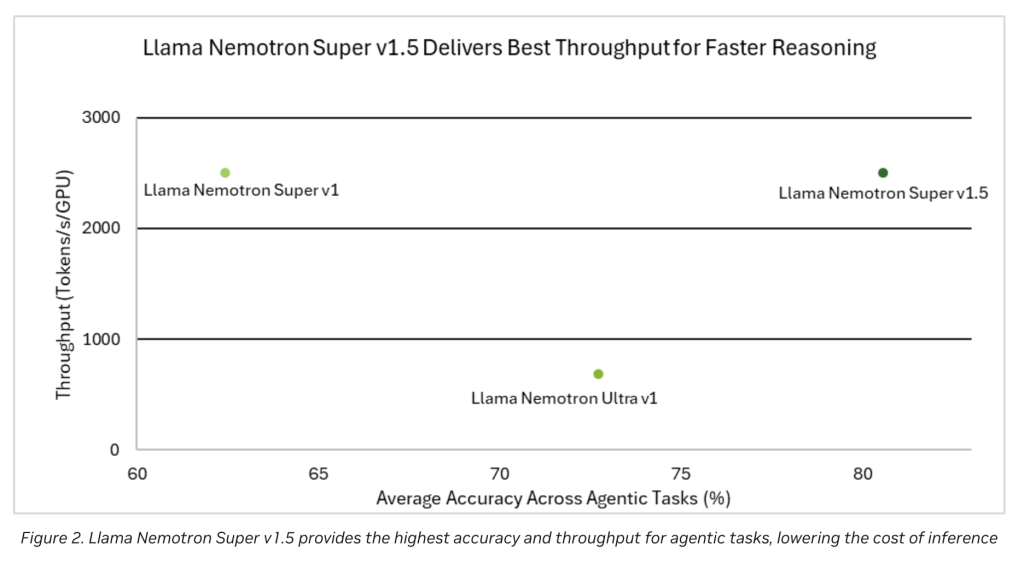

Performance graphics (see figures 1 and 2 in publication notes) obviously demonstrate:

- Highest precision rate for basic reasoning and agent tasks Compared to the main open models of similar size.

- The highest flowtranslated by faster treatment and inference for reduced operating costs.

Characteristics and key advantages

Precision of peak edges in reasoning

Refinement on high signal data sets guarantees that Llama Nemotron Super V1.5 excels to respond to sophisticated science queries, solve complex mathematical problems and generate a reliable and holdable code. This is crucial for real world agents of the real world who must interact, reason and act reliably in applications.

Flow and operational efficiency

- 3x higher speed: Optimizations allow the model to process more requests per second, which makes it suitable for real -time use cases and large volume applications.

- Lower calculation costs: Effective architecture design and ability to execute on a single GPU removes scaling barriers for many organizations.

- Reduction of the complexity of deployment: By minimizing material requirements while increasing performance, deployment pipelines can be rationalized on all platforms.

Built for agent applications

Lama Nemotron Super V1.5 does not only consist in answering questions – it is suitable for Agent TasksWhere AI models must operate proactively, follow the instructions, call functions and integrate into tools and workflows. This adaptability makes the model an ideal base for:

- Conversational agents.

- Autonomous code assistants.

- Science and research tools on AI.

- Intelligent automation agents deployed in corporate workflows.

Practical deployment

The model is Available now For practical experience and integration:

- Interactive access: Directly at Nvidia Build (build.nvidia.com), allowing users and developers to test their capacities in live scenarios.

- Open download of the model: Available on an embrace face, ready for deployment in a personalized infrastructure or inclusion in wider AI pipelines.

How Nemotron Super V1.5 advances the ecosystem

Open weight and community impact

The philosophy of Nvidia continues, Nemotron Super V1.5 is published in the form of an open model. This transparency favors:

- Benchmarking and feedback quickly focused on the community.

- Easier customization for specialized areas.

- A greater collective examination and iteration, guaranteeing models of confidence and robust robust AI emerging in all areas.

Company and research preparation

With its unique mixture of performance, efficiency and opening, Super V1.5 is suitable for becoming The backbone of new generation AI agents In:

- Knowledge management of the company.

- Customer support automation.

- Advanced research and scientific IT.

Alignment with best AI practices

By combining High quality synthetic data sets From Nvidia and advanced model refinement techniques, the Nemotron Super V1.5 adheres to advanced standards in:

- Transparency in training data and methods.

- Rigorous quality assurance for the model outings.

- Ai responsible and interpretable.

Conclusion: A new era for IA reasoning models

Llama Nemotron Super V1.5 is a significant advance in the landscape of Open Source, offering high -level reasoning skills, transforming efficiency and large applicability. For developers to build reliable AI agents – whether for individual projects or complex corporate solutions – this version marks a step, establishing new standards in terms of precision and debit.

With NVIDIA's continuous commitment to openness, efficiency and community collaboration, Llama Nemotron Super V1.5 is about to accelerate the development of smarter and more capable AI agents designed for the various challenges of tomorrow.

Discover the Open Source And Technical details. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our Subseubdredit 100k + ml and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.