Introduction

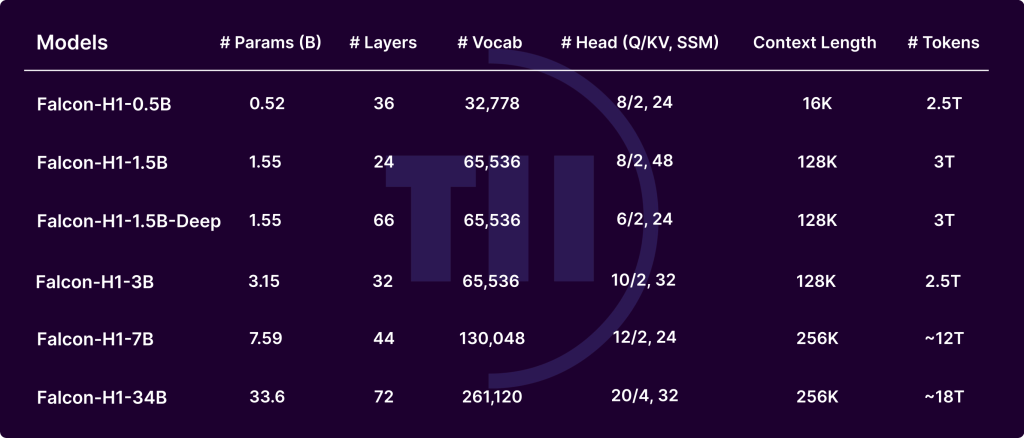

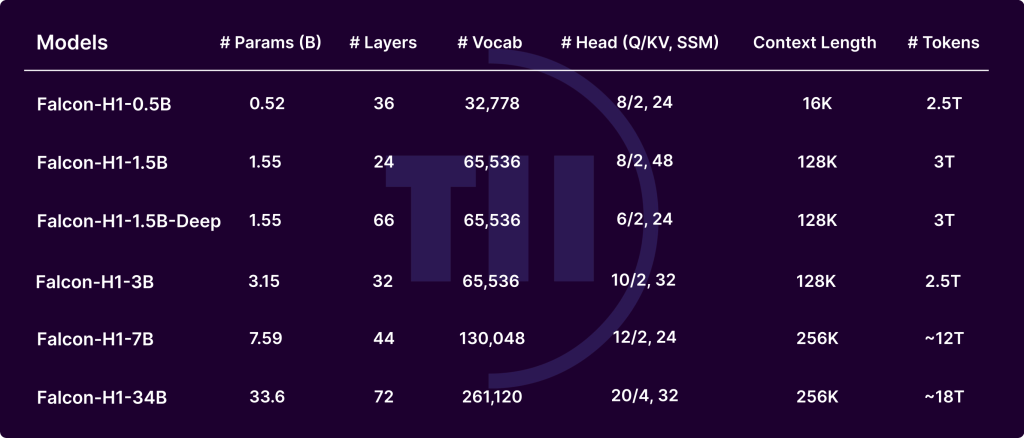

The Falcon-H1 series, developed by the Technology Innovation Institute (TII), marks an important progression in the evolution of large language models (LLM). By integrating attention based on the transformer with the Mamba-based state-based state-based (SSMS) in a parallel configuration, Falcon-H1 obtains exceptional performance, memory efficiency and scalability. Outs in several sizes (0.5b to 34b parameters) and versions (base, adjusted and quantified), the Falcon-H1 models redefine the compromise between the calculation budget and the quality of the output, offering an efficiency of the parameters greater than many contemporary models such as QWEN2.5-72B and LLAMA3.3-70B.

Key architectural innovations

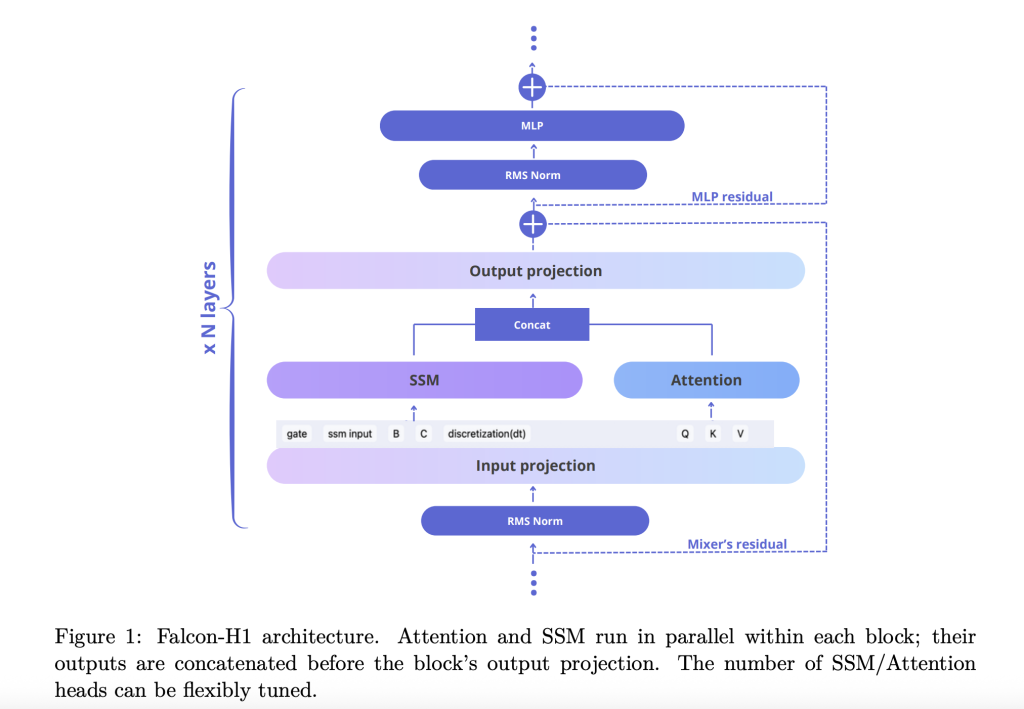

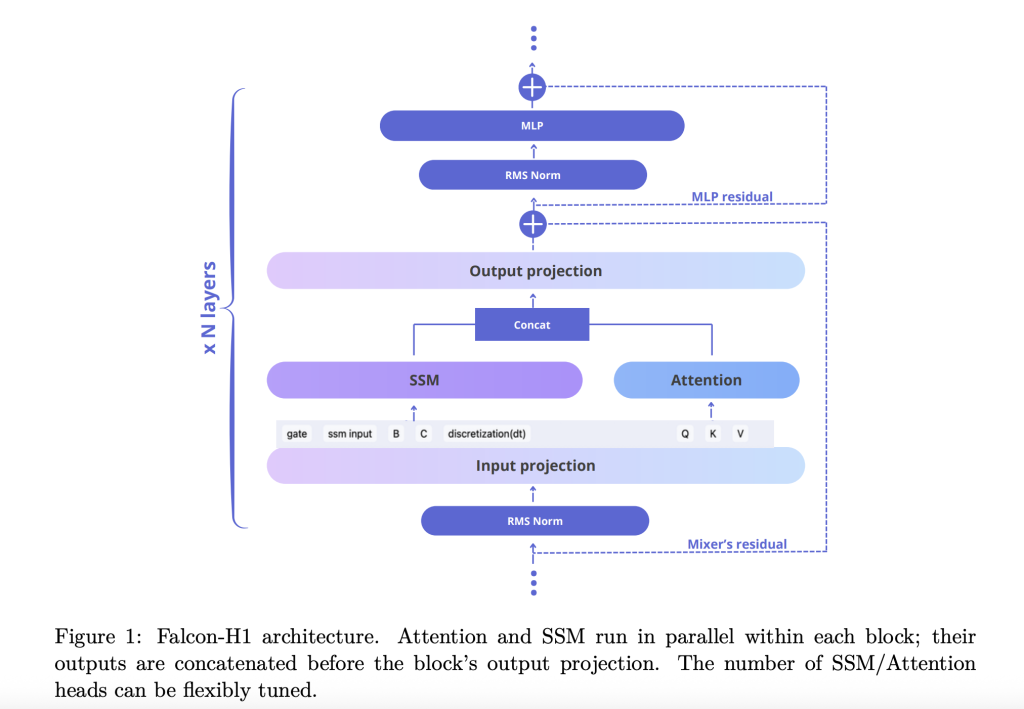

THE technical report Explain how Falcon-H1 adopts a novel Parallel hybrid architecture Where the attention and the SSM modules work simultaneously, and their outputs are crushed before the projection. This design deviates from traditional sequential integration and offers flexibility to adjust the number of attention and the SSM channels independently. The default configuration uses a 2: 1: 5 report for SSM, attention and MLP channels respectively, optimizing both efficiency and learning dynamics.

To further refine the model, Falcon-H1 explores:

- Channel allocation: Ablations show that the increase in attention channels deteriorates performance, while balancing SSM and MLP gives robust gains.

- Blocking configuration: The SA_M configuration (semi-pearllel with attention and the SSM runs together, followed by MLP) works better in loss of training and calculation efficiency.

- Rope base frequency: An unusually high basic frequency of 10 ^ 11 in the incorporations of rotary positional (rope) proved to be optimal, improving generalization during training in the long -term context.

- COMPROMMISE LANT-SOFORDER: Experiences show that deeper models surpass those wider in the budgets of fixed parameters. Falcon-H1-1.5B-DEEP (66 layers) surpasses many 3B and 7B models.

Tokenizer strategy

Falcon-H1 uses a suite of tokenizer coding of bytes of bytes (BPE) personalized with vocabulary sizes ranging from 32k to 261K. Key design choices include:

- Division of figure and punctuation: Empirically improves code performance and multilingual parameters.

- Latex token injection: Improves the precision of the model on mathematical benchmarks.

- Multilingual support: Covers 18 languages and 100+ scale, using optimized fertility and token bytes / metrics.

Corpus and pre-training data strategy

The Falcon-H1 models are formed by up to 18t of tokens from a 20t quasted chip corpus, comprising:

- High quality web data (Filtered Endweb)

- Multilingual data sets: Common crawl, wikipedia, arxiv, opensubitles and organized resources for 17 languages

- Code corpus: 67 languages, processed via minhash deduplication, Codebert quality filters and pii washing

- Mathematical data sets: Mathematics, GSM8K and Latex Crawls In internal latex

- Synthetic data: Rewritten from crude corpus using various llms, as well as manual style QA from subjects based on Wikipedia 30K

- Long -term context sequences: Improved via the filling of reasoning, reorganization and synthetic reasoning tasks up to 256k

Training infrastructure and methodology

The training used the maximum personalized update configuration (µp), taking charge of the smooth scaling between model sizes. Models use advanced parallelism strategies:

- Mixer parallelism (MP) And Context parallelism (CP): Improve flow for long context treatment

- Quantification: Output in BFLOAT16 and 4 -bit variants to facilitate on -board deployments

Evaluation and performance

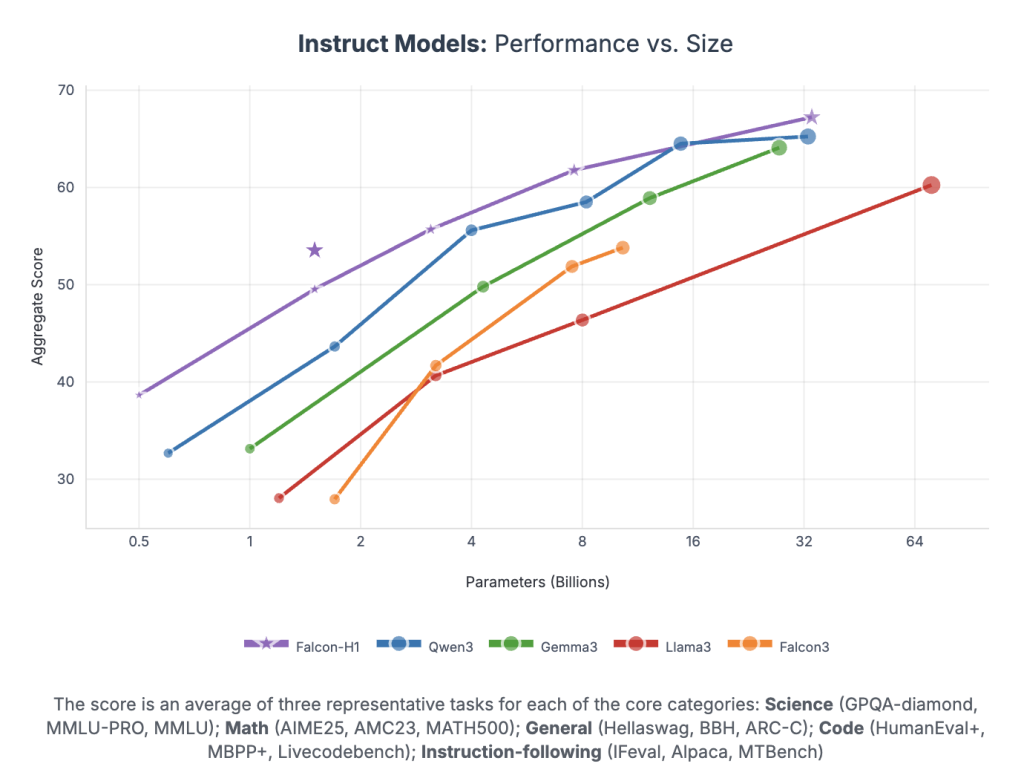

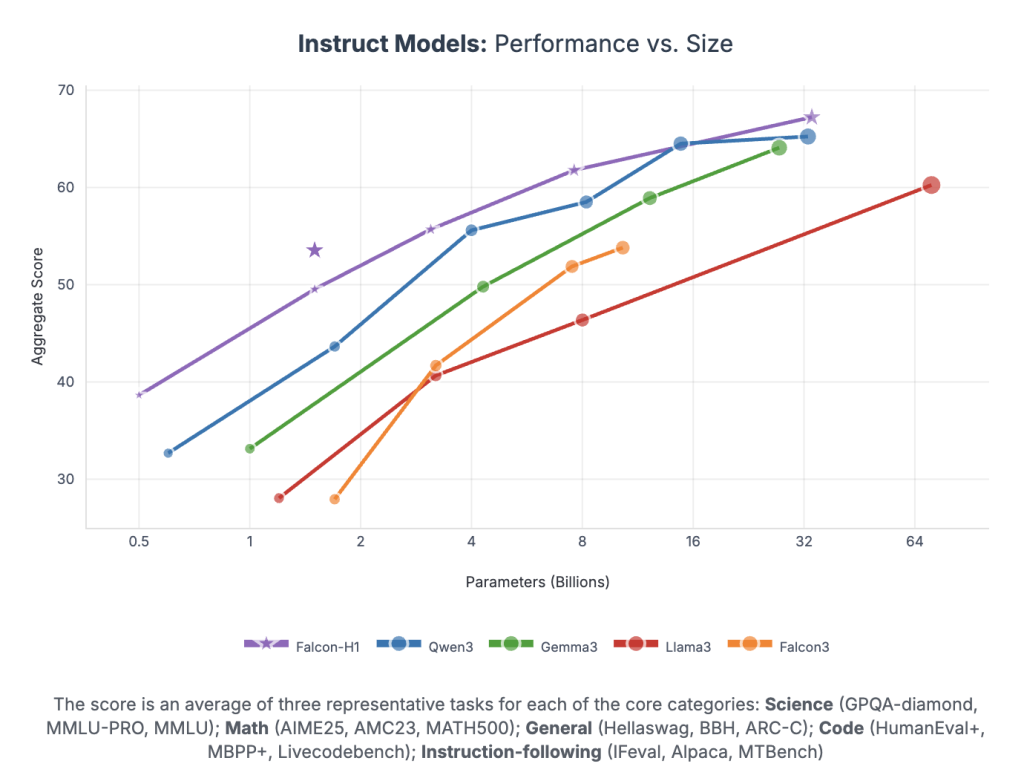

Falcon-H1 obtains unprecedented performance by parameter:

- Falcon-H1-34B-Instruct exceeds or associates models on a 70b scale such as Qwen2.5-72b and llama3.3-70b through reasoning, mathematics, monitoring and multilingual monitoring tasks

- Falcon-h1-1.5b deep RIVAUX 7B – 10B models

- Falcon-H1-0.5b Offer 7B performance of the era 2025

The references cover the MMLU, GSM8K, Humaneval and long context tasks. The models demonstrate a strong alignment via SFT and the direct optimization of preferences (DPO).

Conclusion

Falcon-H1 establishes a new standard for LLM of open weight by integrating parallel hybrid architectures, flexible tokenization, effective training dynamics and robust multilingual capacity. Its strategic SSM and attention combination allows unmatched performance in practical calculation and memory budgets, which makes it ideal for research and deployment in various environments.

Discover the Paper And Models on the embraced face. Do not hesitate to Consult our tutorial page on the AI agent and agency AI for various applications. Also, don't hesitate to follow us Twitter And don't forget to join our Subseubdredit 100k + ml and subscribe to Our newsletter.

Michal Sutter is a data science professional with a master's degree in data sciences from the University of Padova. With a solid base in statistical analysis, automatic learning and data engineering, Michal excels in transforming complex data sets into usable information.