In a office of the computer intelligence laboratory and artificial MIT intelligence (CSAIL), a soft robotic hand carefully loops its fingers to grasp a small object. The intriguing part is not the mechanical design or the integrated sensors – in fact, the hand contains none. Instead, the whole system is based on a single camera that looks at the robot movements and uses this visual data to control them.

This ability comes from a new system that CSAIL scientists have developed, offering a different perspective on robotic control. Rather than using hand -designed models or complex sensor networks, it allows robots to learn how their bodies react to control controls, only by vision. The approach, called Neuronal Jacobian Champs (NJF), gives robots a kind of body awareness. A Open access paper on work was published in Nature June 25.

“This work indicates a transition from the robots programming to teacher robots,” explains Sizhe Lester Li, doctoral student of Electric and IT engineering, Affilié Csail and principal researcher on work. “Today, many robotic tasks require extensive engineering and coding. In the future, we plan to show a robot what to do and let it learn to achieve the goal independently. ”

The motivation stems from a simple but powerful cropping: the main barrier with affordable and flexible robotics is not the equipment – its capacity control, which could be carried out in several ways. Traditional robots are designed to be rigid and rich in sensors, which facilitates the construction of a digital twin, a precise mathematical replica used for control. But when a robot is soft, deformable or irregular, these hypotheses collapse. Rather than forcing robots to match our models, NJF returns the script – giving robots the ability to learn their own internal model from observation.

Look at and learn

This decoupling of modeling and material design could considerably extend the design space for robotics. In gentle and bio-inspired robots, designers often integrate sensors or strengthen parts of the structure just to make modeling possible. NJF raises this constraint. The system does not need on -board sensors or design settings to make control possible. The designers are more free to explore unconventional and non -constrained morphologies without worrying about whether they can model or control them later.

“Think about how you learn to control your fingers: you turn, you observe, you adapt,” explains Li. “This is what our system does. He experiences random actions and determines the controls move the parts of the robot. ”

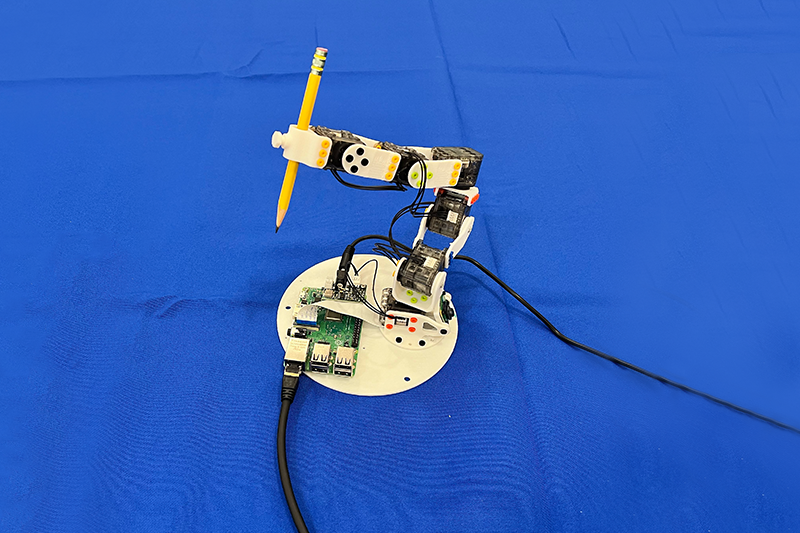

The system turned out to be robust on a range of robot types. The team tested the NJF on a soft pneumatic robotic hand capable of pinching and grasping, a rigid allegro hand, a 3D printed robotic arm and even a rotary platform without integrated sensors. In all cases, the system learned both the shape of the robot and the way it responded to the control signals, just from the vision and the random movement.

Researchers see potential far beyond the laboratory. Robots equipped with NJF could one day perform agricultural tasks with location precision in terms of centimeters, operate on construction sites without elaborate sensor networks or navigate in dynamic environments where traditional methods are breaking down.

At the heart of the NJF is a neural network which captures two intertwined aspects of the production mode of a robot: its three -dimensional geometry and its sensitivity to control entries. The system is based on the neural radiation fields (nerve), a technique that reconstructs 3D scenes of images by mapping the spatial coordinates with color and density values. The NJF extends this approach by learning not only the shape of the robot, but also a Jacobian field, a function that predicts how any point on the robot body moves in response to motor controls.

To form the model, the robot performs random movements while several cameras record the results. No human supervision or prior knowledge of the robot structure is required – the system simply deduces the relationship between control signals and movement by looking.

Once the workout is finished, the robot only needs a single monocular camera for a closed loop control in real time, operating at around 12 hertz. This allows him to observe himself continuously, plan and act responsible. This speed makes the NJF more viable than many simulators based on physics for gentle robots, which are often too intensive in calculation for real -time use.

In the first simulations, even the simple 2D fingers and the cursors were able to learn this cartography using only a few examples. By modeling how specific points deform or move in response to the action, the NJF builds a dense controlling card. This internal model allows it to generalize the movement through the body of the robot, even when the data is noisy or incomplete.

“What is really interesting is that the system understands alone which motors control which parts of the robot,” explains Li. “It is not programmed – it naturally emerges by learning, a bit like a person discovering the buttons on a new device.”

The future is soft

For decades, robotics has favored rigid and easily modeled machines – such as industrial arms found in factories – because their properties simplify control. But the field has evolved towards soft and bio-inspired robots which can adapt more fluid in the real world. The compromise? These robots are more difficult to model.

“Robotics often feels out of reach due to expensive sensors and complex programming. Our goal with the Neuronal Jacobian fields is to reduce the barrier, which makes robotics affordable, adaptable and accessible to more people. Vision is a resilient and reliable sensor “” It opens the door to robots which can operate in disorderly and unstructured environments, from farms to construction sites, without costly infrastructure. “

“Vision alone can provide the necessary indices for location and control – eliminating the need for GPS, external monitoring systems or complex integrated sensors. This opens the door to robust and adaptive behaviors in unstructured environments, from drones navigating inside or underground without cards to mobile manipulators working in truckers or work articles, and even robot robots Daniela Rus, professor of Electric and IT engineering and CSAIL. “By learning from visual feedback, these systems develop internal models of their own movement and their dynamics, allowing a flexible and self-supervised operation where traditional location methods would fail.”

Although the NJF training currently requires several cameras and must be redone for each robot, the researchers already imagine a more accessible version. In the future, amateurs could record the random movements of a robot with their phone, a bit like you take a video of a rental car before leaving and use these images to create a control model, without prior knowledge or special equipment required.

The system does not yet generalize through different robots, and it lacks strength or tactile detection, limiting its effectiveness on the spots rich in contact. But the team explores new ways of treating these limits: improving generalization, managing occlusions and prolonging the capacity of the model to reason on longer spatial and temporal horizons.

“Just as humans are developing an intuitive understanding of how their bodies move and reacts to orders, NJF gives robots this kind of self -awareness by vision alone”, explains Li. “This understanding is a foundation of flexible manipulation and control in real environments. Our work, essentially, reflects a broader trend of robotics: moving away from the manual programming of detailed models towards the teaching of robots by observation and interaction. ”

This article brought together computer vision and self-supervised learning work of Sitzmann Lab and expertise in soft robots of the RUS laboratory. Li, Sitzmann and Rus co-wrote the document with the subsidiaries of Csail Annan Zhang SM '22, doctoral student in electrical engineering and computer (EECS); Boyuan Chen, doctoral student in EECS; Hanna Matsik, undergraduate researcher in mechanical engineering; And Chao Liu, a post-doctoral student in the Laboratory of the Sensible city of MIT.

Research was supported by the Solomon Buchsbaum research fund through the MIT research support support committee, a MIT presidential scholarship, the National Science Foundation and the Gwangju Science and Technology Institute.