MIT researchers have created a periodic table that shows how more than 20 classic automatic learning algorithms are connected. The new framework highlights the way scientists could merge strategies from different methods to improve existing AI models or offer new ones.

For example, the researchers used their framework to combine elements of two different algorithms to create a new-image-classification algorithm which made 8% better than current current approaches.

The periodic table stems from a key idea: all these algorithms learn a specific type of relationship between data points. Although each algorithm can do this in a slightly different way, the basic mathematics behind each approach are the same.

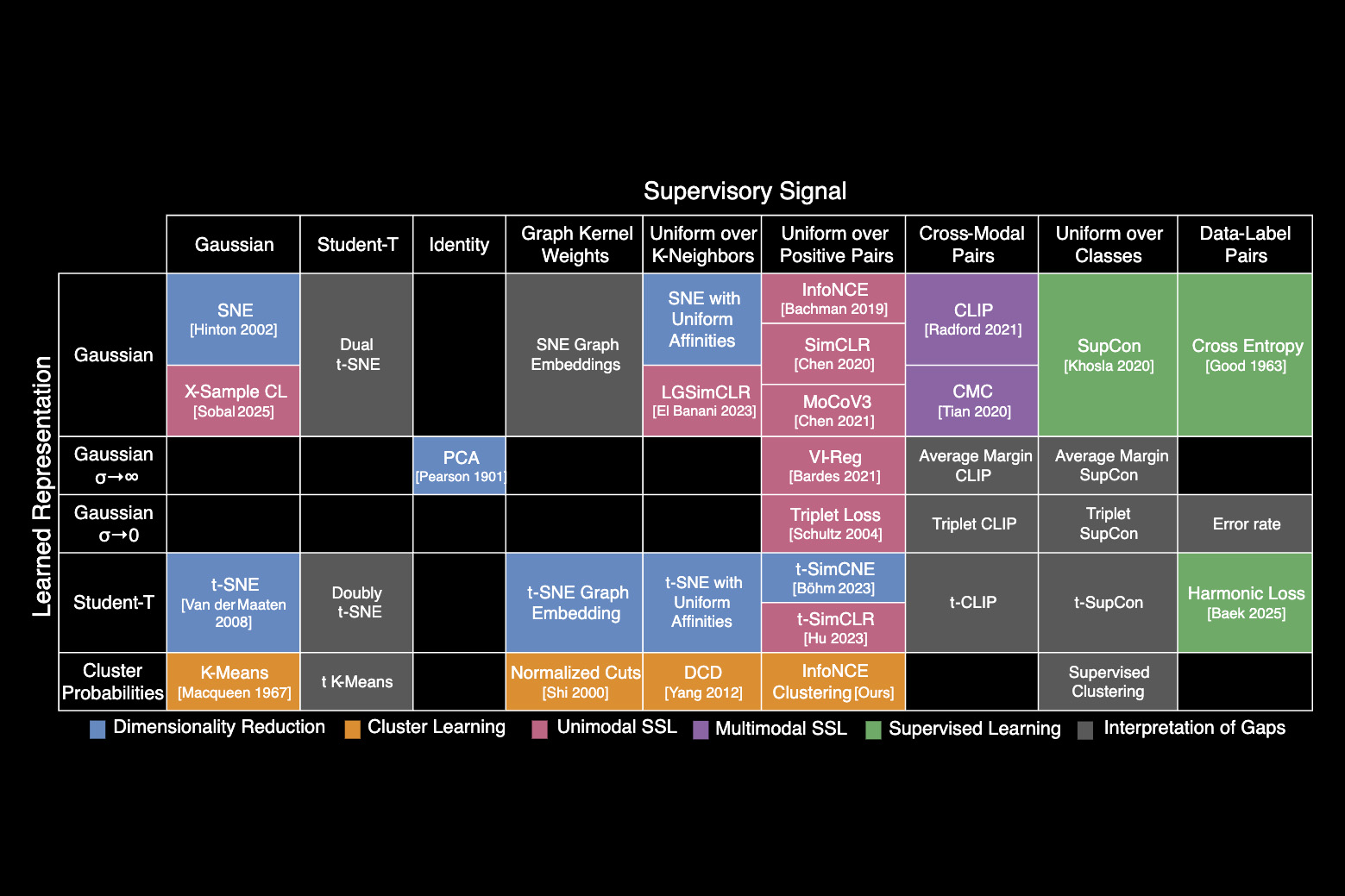

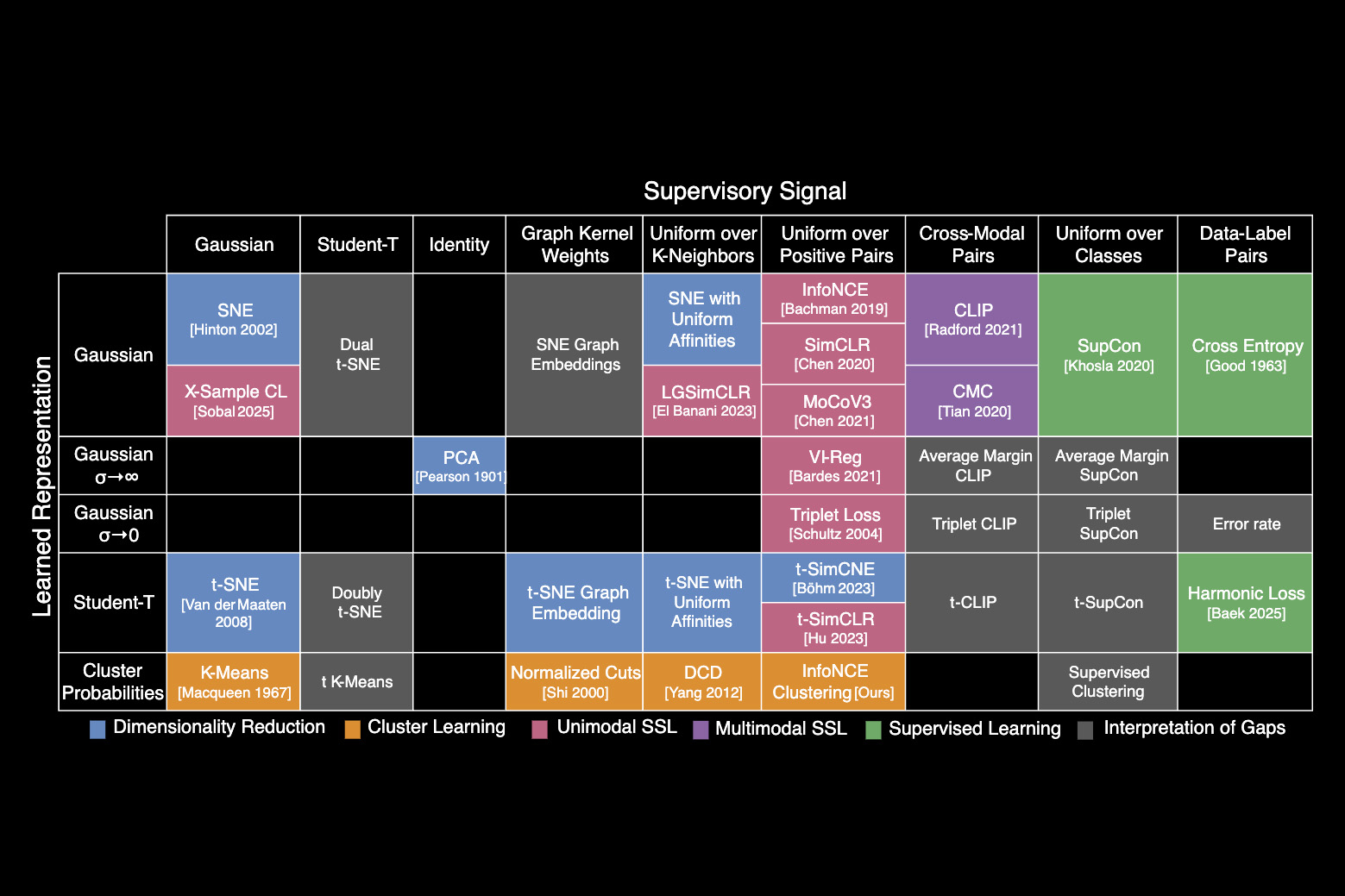

Based on these ideas, the researchers have identified a unifying equation which underlies many classic AI algorithms. They used this equation to crop popular methods and organize them in a table, each categorizing according to the approximate relationships it learns.

Like the periodic table of chemical elements, which initially contained virgin squares which were then filled by scientists, the periodic table of automatic learning also has empty spaces. These spaces predict where algorithms should exist, but which have not yet been discovered.

The table gives researchers a toolbox to design new algorithms without needing to rediscover ideas from previous approaches, explains Shaden Alshammari, a student graduated from MIT and principal author of a Document on this new framework.

“It's not just a metaphor,” adds Alshammari. “We are starting to see automatic learning as a system with a structure that is a space that we can explore rather than just guess our way.”

She is joined on the article by John Hershey, researcher at Google AI Perception; Axel Feldmann, student graduated from MIT; William Freeman, the professor of electrical and computer engineering of Thomas and Gerd Perkins and member of the computer and artificial intelligence laboratory (CSAIL); And the main author Mark Hamilton, student graduated from MIT and senior engineering director at Microsoft. Research will be presented at the international conference on representations of learning.

An accidental equation

The researchers did not decided to create a periodic table of automatic learning.

After joining the Freeman Lab, Alshammari began to study the clustering, an automatic learning technique that classifies images by learning to organize similar images in nearby clusters.

She realized that the clustering algorithm she studied was similar to another classic machine learning algorithm, called contrasting learning, and began to dig more deeply in mathematics. Alshammari found that these two disparate algorithms could be cropped using the same underlying equation.

“We almost arrived at this unifying equation by accident. Once Shaden discovered that he connected two methods, we just started to dream of new methods to introduce in this context. Almost everyone we tried could be added, ”says Hamilton.

The framework they created, the contrasting learning of information (I-CON) shows how a variety of algorithms can be viewed through the objective of this unifying equation. It includes everything, classification algorithms that can detect spam from in -depth learning algorithms that Power LLMS.

The equation describes how these algorithms find connections between real data points, then approach these internal connections.

Each algorithm aims to minimize the amount of difference between the connections it learns to approximate and the real connections of its training data.

They decided to organize I-Con in a periodic table to categorize algorithms according to how the points are connected in real data sets and the main algorithms can approximate these connections.

“The work was progressively unfolded, but once we identified the general structure of this equation, it was easier to add more methods to our framework,” explains Alshammari.

A discovery tool

As they had the table, the researchers began to see gaps where the algorithms could exist, but which had not yet been invented.

The researchers have filled a gap by borrowing ideas from an automatic learning technique called contrastive learning and applying them to the grouping of images. This led to a new algorithm that could classify the images not labeled 8% better than another advanced approach.

They also used I-Con to show how a data debating technique developed for contrastive learning could be used to increase the accuracy of clustering algorithms.

In addition, the flexible periodic table allows researchers to add new lines and columns to represent additional types of data point connections.

In the end, having I-Con as a guide could help scientists in automatic learning to go off the beaten track, encouraging them to combine ideas in a way in which they would not necessarily have thought, explains Hamilton.

“We have shown that a very elegant equation, anchored in information science, gives you rich algorithms covering 100 years of research in automatic learning. This opens many new avenues for discovery, ”he adds.

“Perhaps the most difficult aspect to be an automatic learning researcher these days is the apparently unlimited number of items that appear each year. In this context, articles that unify and connect existing algorithms are of great importance, but they are extremely rare. University of Jerusalem, which was not involved in this research.

This research was funded, in part, by the Air Force artificial intelligence accelerator, the National Science Foundation AI Institute for Artificial Intelligence and Fundamental Interactions, and Quanta Computer.