OPENAI has just sent seismic waves to the AI world: for the first time since GPT-2 came on stage in 2019, the company publishes not one, but two open-language models. Meet GPT-OS-150B And GPT-OS-20B—Meaux that anyone can download, inspect, refine and execute their own equipment. This launch does not only change the AI landscape; It explodes a new era of transparency, personalization and gross calculation power for researchers, developers and enthusiasts around the world.

Why is this outing a big problem?

Openai has long cultivated a reputation for breathtaking model capacities and a fortress approach to proprietary technology. Which changed on August 5, 2025. These new models are distributed under the permissive Apache 2.0 licensemaking them open to commercial and experimental use. The difference? Instead of hiding behind cloud api, anyone Can now put OpenAi -Grade models under a microscope – or put them directly to work on problems at the edge, in the company or even on consumption devices.

Meet the models: Technical Emerchors with a real world muscle

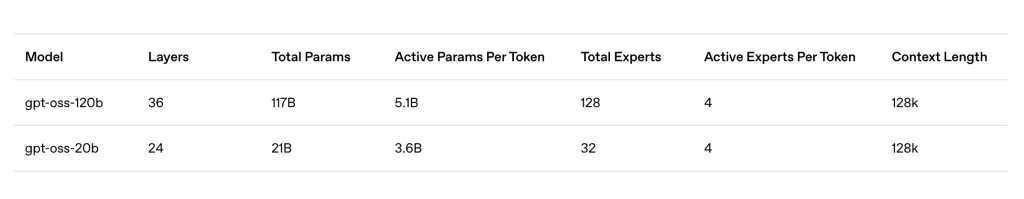

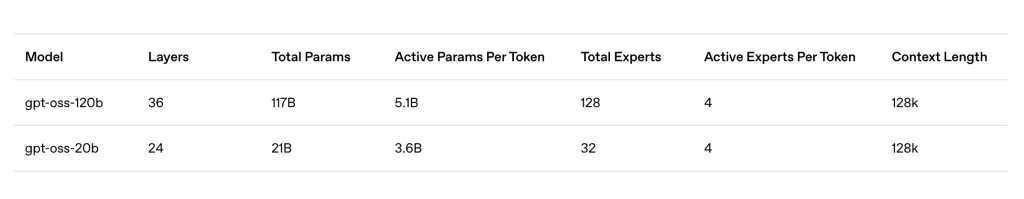

GPT-OS-150B

- Size: 117 billion parameters (with 5.1 billion active parameters per token, thanks to time mixing technology)

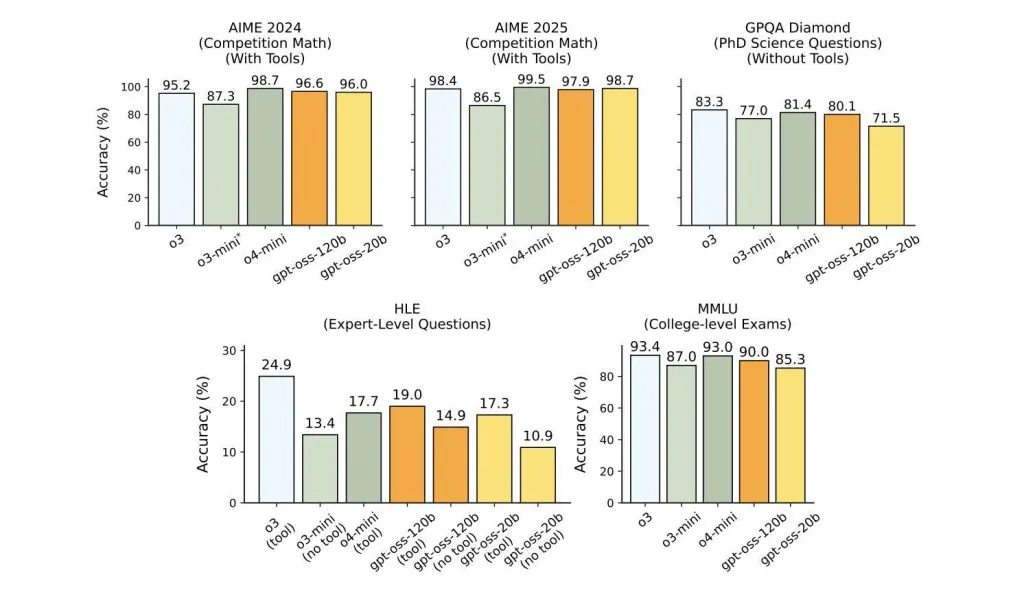

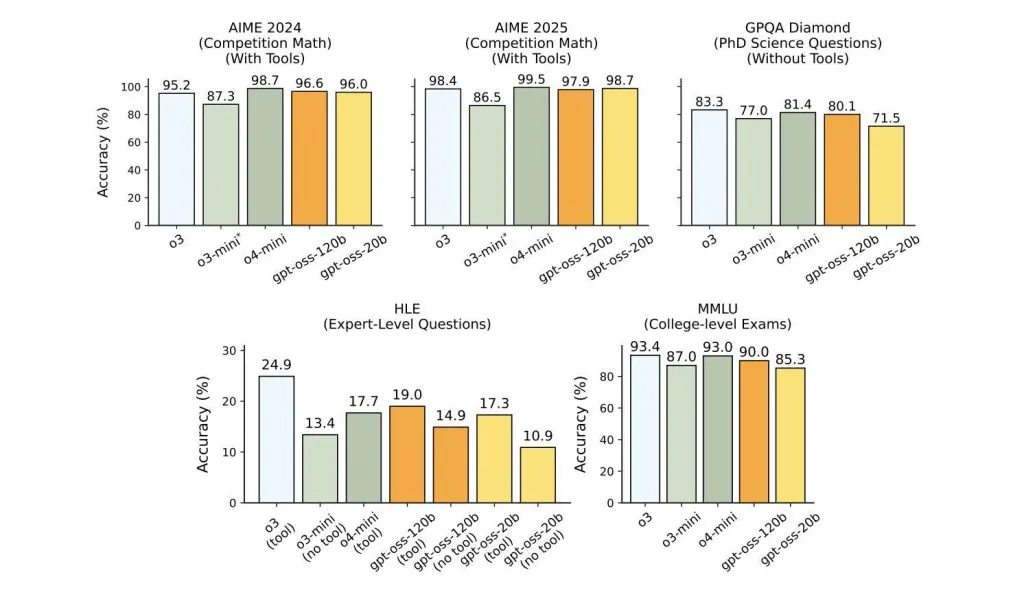

- Performance: Punch at the Openai O4-Mini (or better) Openai in real references.

- Material: Perform on a single high -end GPU – Think of the Nvidia H100 or 80 GB of class cards. No server farm required.

- Reasoning: Characteristics of the capacities of the chain of thoughts and agency – ideal for the automation of research, technical writing, generation of code, etc.

- Personalization: Supports configurable “reasoning effort” (low, medium, high), so you can deal with energy if necessary or save resources when you don't.

- Context: Gérose up to 128,000 massive tokens – rather text to read whole books at the same time.

- Fine tuning: Built for easy personalization and local / private inference – no rate limit, complete confidentiality of data and total deployment control.

GPT-OS-20B

- Size: 21 billion parameters (with 3.6 billion active parameters per token, also the mixture of experts).

- Performance: Is squarely between O3-Mini and O4-Mini in reasoning tasks-equality with the best “small” models available.

- Material: Performs on laptops of general public quality – with only 16 GB of RAM or equivalent, it is the most powerful open weight reasoning model you can install on a local phone or PC.

- Mobile loan: Specifically optimized for providing low latency AI for smartphones (including Qualcomm Snapdragon management), Edge devices and any scenario requiring local inference less the cloud.

- Agent powers: Like his big brother, 20B can use APIs, generate structured outings and execute the Python code on demand.

Technical details: mixture of experts and quantification MXFP4

Both models use a Mixture of experts (MOE) Architecture, only activating a handful of “expert” subnets by token. The result? Huge settings of parameters with a modest use of memory and a quick inference for lightning – perfect for consumer and high performance business equipment today.

Add to that Native MXFP4 quantificationRecovery of model memory imprints without sacrificing precision. The 120B model adapts perfectly to a single advanced GPU; The 20B model can work comfortably on laptops, office computers and even mobile equipment.

Impact of the real world: Tools for the company, developers and amateurs

- For companies: On -site deployment for confidentiality and conformity of data. More Cloud Box Cloud IA: financial, health care and legal sectors can now own and secure each piece of their LLM workflow.

- For developers: Freedom to tinker, refine and extend. No API limits, no SaaS invoices, just a pure and customizable AI with total control over latency or cost.

- For the community: The models are already available on hugs, Olllama, and even more – from download to deployment in a few minutes.

How does GPT-Ass accumulate?

Here is the botter: GPT-OS-120B is the first freely available open weight model that corresponds to the performance of high-level commercial models like O4-mini. The 20B variant not only fills the performance difference for AI on devices, but will probably speed up innovation and push the limits of what is possible with local LLM.

The future is open (still)

The Openai GPT-AS is not only an outing; It is a call from Clarion. By putting a reasoning at the cutting edge of technology, the use of the agent tools and agencies available for anyone to inspect and deploy, Openai throws the door to a whole community of manufacturers, researchers and businesses – not only to use it, but to build, iterate and evolve.

Discover the GPT-OS-150B,, GPT-OS-20B And Technical blog. Do not hesitate to consult our GitHub page for tutorials, codes and notebooks. Also, don't hesitate to follow us Twitter And don't forget to join our Subseubdredit 100k + ml and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.