The open artificial intelligence platform based in San Francisco Openai has announced the outlet of Point-E-an automatic learning system that allows users to generate a 3D object based on a simple text entry.

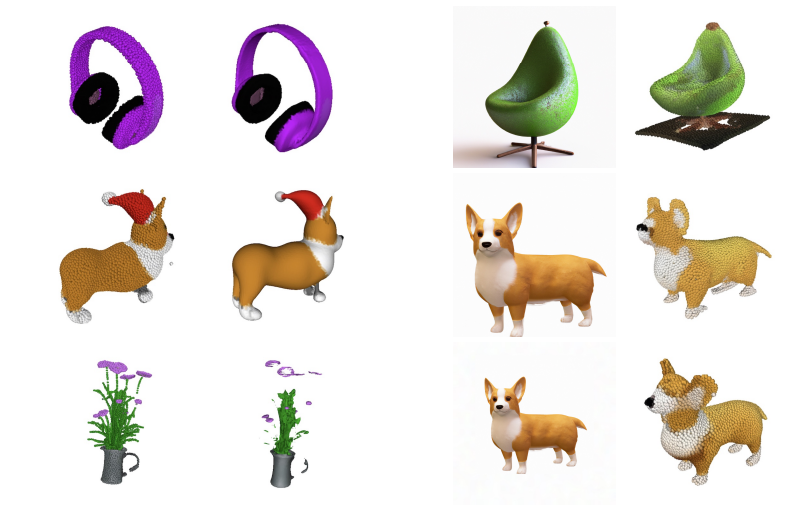

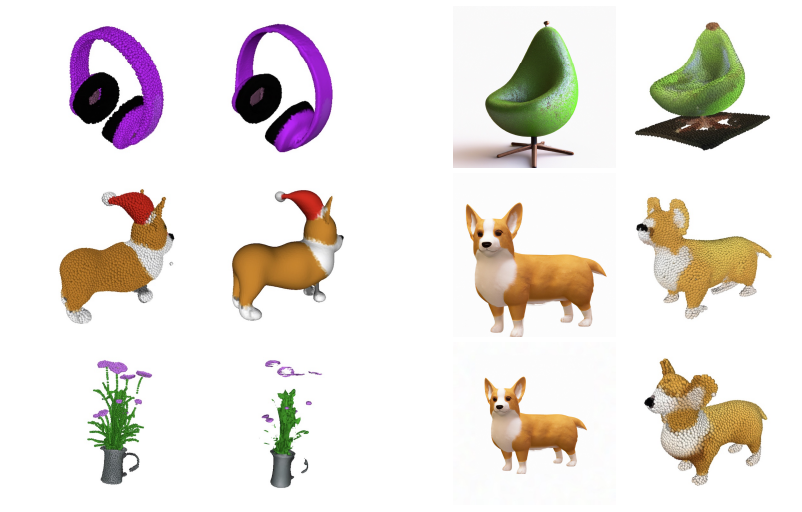

A team of researchers has developed a completely new approach. Point-E does not create 3D objects in the traditional sense. Instead, he creates punctual clouds or discreet sets of data points in space that represent a three -dimensional form.

The generation of points clouds is much easier than generating real images, but they do not capture the shape or texture with fine grain of an object – a key limitation of the point. To get around this limitation, the Point-E team has formed an additional AI system to convert the point clouds to meshes.

Point-E consists of two models: a text model with the image and a 3D image model. The model of text in the image, similar to generative art systems like the own Dall-E 2 of Openai, was formed on labeled images to understand the associations between words and visual concepts. The 3D image model, on the other hand, has received a set of images associated with 3D objects to learn to effectively translate between the two.

One of the greatest advantages of this approach is that it is very fast and not very demanding in terms of material required to produce the final image.

OPENAI researchers note that point-E-E clouds could be used to make objects from the real world, such as 3D printing. With the additional mesh conversion model, the system could also find its way in the game development workflows and entertainment.

“We note that this point is capable of effectively producing various 3D forms and complexes conditioned to text prompts. We hope that our approach can serve as a starting point for additional work in the field of 3D text synthesis, “said the researchers.

Find out more on the point in the paper

The code is available on Github