Nvidia Ai introduced Open nemotronA family of large language models (LLMS) designed to excel in complex reasoning tasks through mathematics, science and code. This model – efficient suite Versions of parameters 1.5b, 7b, 14b and 32b-has been Distilled from model 671b Deepseek R1 0528Capturing its high -level reasoning capacities in much smaller and more effective models.

The version positions Nvidia as a leader leader to the LLM Open Source ecosystem, offering models that push advanced performance (SOTA) while remaining commercially permissive and widely accessible via Face.

Presentation of the model and architecture

✅ Distillation of Deepseek R1 0528 (671b)

In the heart of the openreasoning-nemotron is a Distillation strategy which transfers the reasoning capacity from Deepseek R1 – a model of massive 671b parameters – in smaller architectures. The process prioritizes Generalization of reasoning On the prediction of raw tokens, allowing compact models to operate effectively on structured and high cognition tasks.

The distillation data set accent Mathematics, sciences and programming languagesAlign the model's capacities with key reasoning areas.

📊 Model variants and specifications

| Model name | Parameters | Planned use | Strengthered facial page |

|---|---|---|---|

| OpenREASONING-NEMOTRON-1.5B | 1.5b | Entry -level reasoning and inference | Link |

| Openreasoning-nemotron-7b | 7b | Reasoning half-scale, good for code / mathematics | Link |

| OpenReasoning-Nemotron-14b | 14b | Advanced reasoning capacities | Link |

| OpenReasoning-Nemotron-32B | 32B | Performance close to the border model in high -intensity tasks of logic | Link |

All models are compatible with Transformer architecturessupport Quantification FP16 / INT8and are optimized for GPUS NVIDIA and NEMO Frameworks.

Performance benchmarks

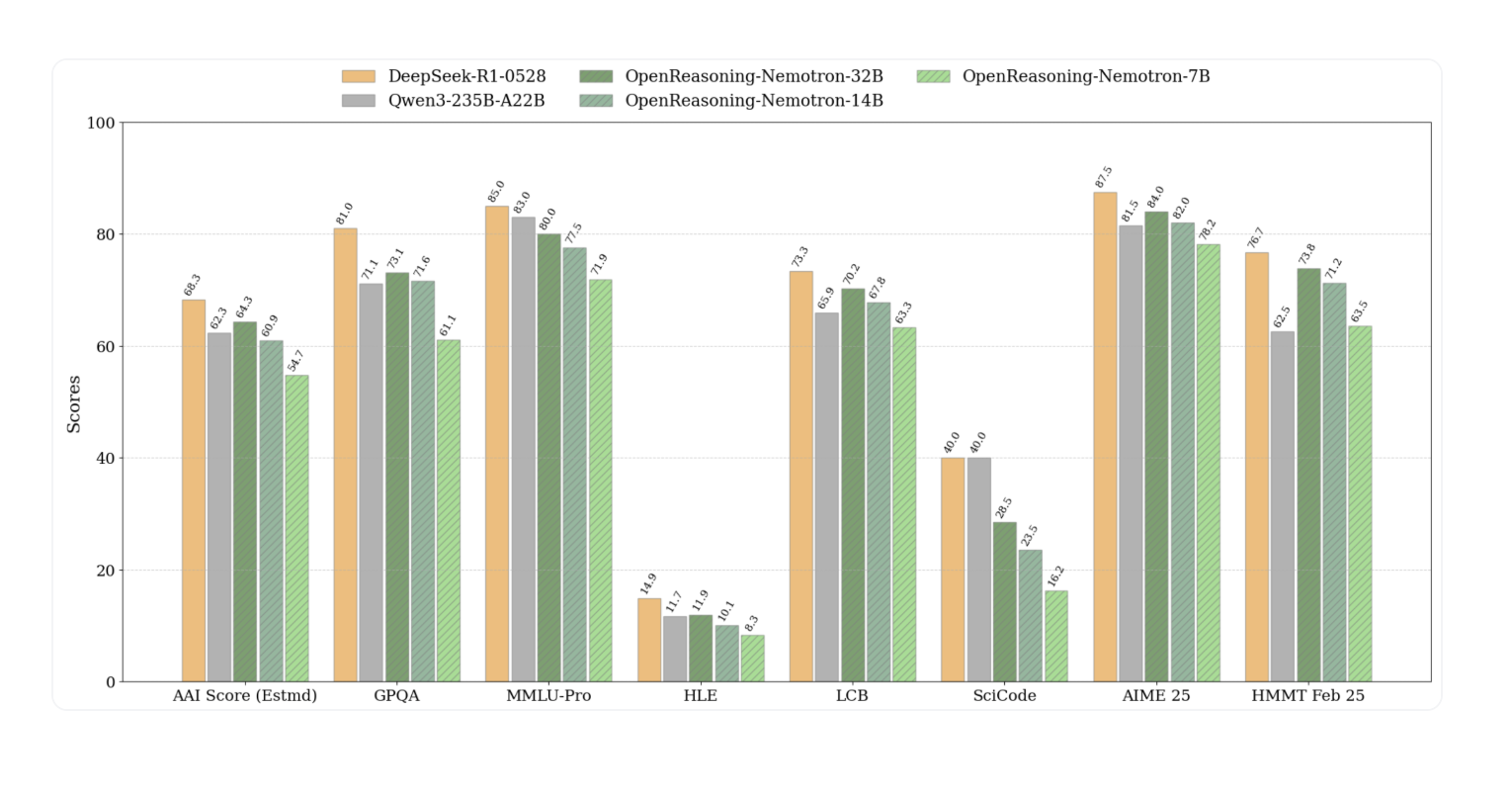

These models define New pass scores to one of such On several reasoning reactions:

| Model | Gpqa | Mmlu-pro | Hle | Livecodebench | Scicode | Love24 | Love25 | Hmmt February 2025 |

| 1.5b | 31.6 | 47.5 | 5.5 | 28.6 | 2.2 | 55.5 | 45.6 | 31.5 |

| 7b | 61.1 | 71.9 | 8.3 | 63.3 | 16.2 | 84.7 | 78.2 | 63.5 |

| 14b | 71.6 | 77.5 | 10.1 | 67.8 | 23.5 | 87.8 | 82.0 | 71.2 |

| 32B | 73.1 | 80.0 | 11.9 | 70.2 | 28.5 | 89.2 | 84.0 | 73.8 |

All the scores cited have passed @ 1 without gentelect.

🔍 genselect (heavy mode)

Using Generative selection with 64 candidates (“Genselect”), performance is still improving, in particular 32b:

- 32b realizes: AIM24 89.2 → 93.3, loves25 84.0 → 90.0, hmmt 73.8 → 96.7, Livecodebench 70.2 → 75.3.

This demonstrates high performance of emerging large -scale reasoning.

Training and specialization data of reasoning

The training corpus is a Distilled, high quality subset of the Deepseek R1 0528 data set. Key characteristics include:

- Strongly organized reasoning data mathematical, scientific and CS disciplines.

- End function Designed to strengthen thought channels in several steps.

- Accent on logical consistency, satisfaction of constraintsAnd symbolic reasoning.

This deliberate conservation guarantees a strong alignment with the problems of real reasoning found in the academic world and applied Ml Domains.

Open and ecosystem integration

The four openreason-nemotron models are released under a Open and commercially permissive licenseWith model cards, evaluation scripts and weight of inference available on the embraced face:

These models are designed to connect to the Nvidia Nemo frameworkand support Tensorrt-LLM,, OnnxAnd Face transformers embraced Tools Sches, facilitating rapid deployment in production and research contexts.

Key user cases

- Mathematical tutors and theorem resolvers

- Scientific AIM agents and medical reasoning systems

- Code and debugging generation assistants

- Multi-Hop Chain Chain Question

- Generation of synthetic data for structured areas

Conclusion

The models of Nvidia Openreasoning Nemotron offer a pragmatic open path to the opening to Capacity of reasoning on scale without calculation costs at the border scale. By distilling the 671B Deepseek R1 and by targeting the areas of high -effect reasoning Precision, efficiency and accessibility.

For developers, researchers and businesses working on AI applications with a high intensity of logic, OpenReasoning-Nemotron provides a convincing base-without compromise which often accompany proprietary or over-generalized models.

🔍 Frequently asked questions (FAQ)

Q1. What benchmarks are supported?

GPQA, MMLU-PRO, HLE, LIVECODEBENCH, SCICODE, LIA 2025/25, HMMT FEB 2025 (Pass @ 1).

Q2. How many data has been used?

A corpus of distillation of 5 million examples of reasoning newspapers Through the domains, generated by Deepseek-R1-0528.

Q3. Is strengthening learning used?

No – The models are formed only via SFT, preserving efficiency while allowing future research on RL.

Q4. Can I scale up the reasoning with Genselect?

Yes. The use of genenselect considerably increases performance – 32b jumps from 73.8 to 96.7 on HMMT with 64 candidates.

Discover the Technical details. All the merit of this research goes to researchers in this project.

Sponsorship opportunity: Reach the most influential AI developers in the United States and Europe. 1M + monthly players, 500K + community manufacturers, endless possibilities. (Explore sponsorship)

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.