Estimated reading time: 6 minutes

The AI has just unlocked the triple of the GPU power – without human intervention. Deepreinforce team introduced a new frame called Cuda-L1 average 3.12 × acceleration and up to 120 × advanced acceleration On 250 GPU tasks in the real world. It is not a simple academic promise: each result can be reproduced with open source code, on widely used Nvidia equipment.

The breakthrough: learning of contrastive strengthening (contrastive-RL)

At the heart of Cuda-L1 is a major jump in the AI learning strategy: Learning contrastive strengthening (contrastive-RL). Unlike the traditional RL, where an AI simply generates solutions, receives digital rewards and updates its blind, contrastive-RL model parameters strengthens performance scores and previous variants directly in the next generation prompt.

- Performance scores and code variants are given at AI in each optimization round.

- The model must then Write an “performance analysis” in natural language—Reflector on which code was the fastest, WhyAnd what strategies have led to this acceleration.

- Each step force complex reasoningGuiding the model to synthesize not only a new variant of code, but a more generalized mental model and focused on the data of what makes the Cuda code quickly.

The result? HA not only discover well -known optimizationsbut also Non -obvious tips That even human experts often neglect, including mathematical shortcuts which fully bypass the calculation or memory strategies set to specific material quirks.

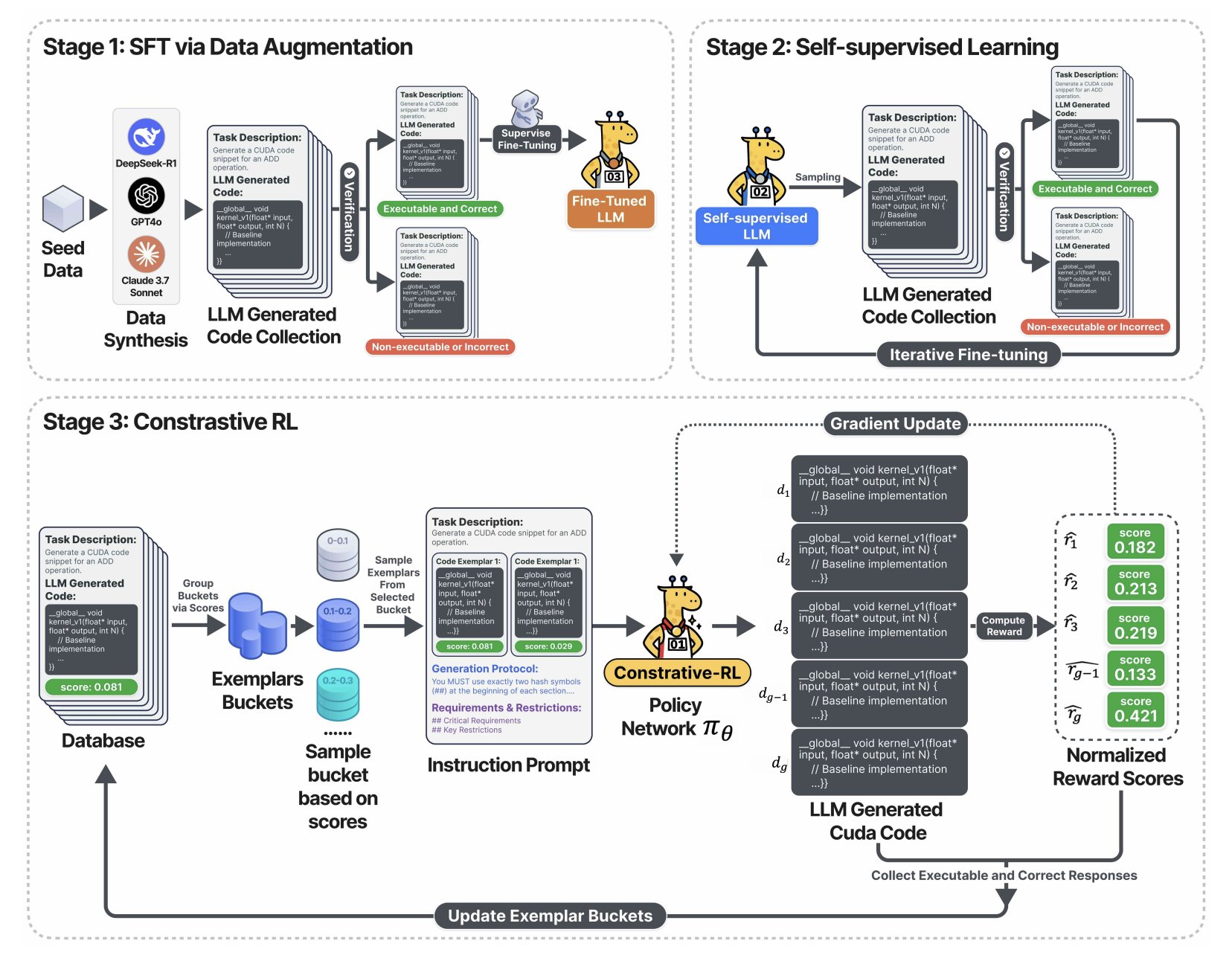

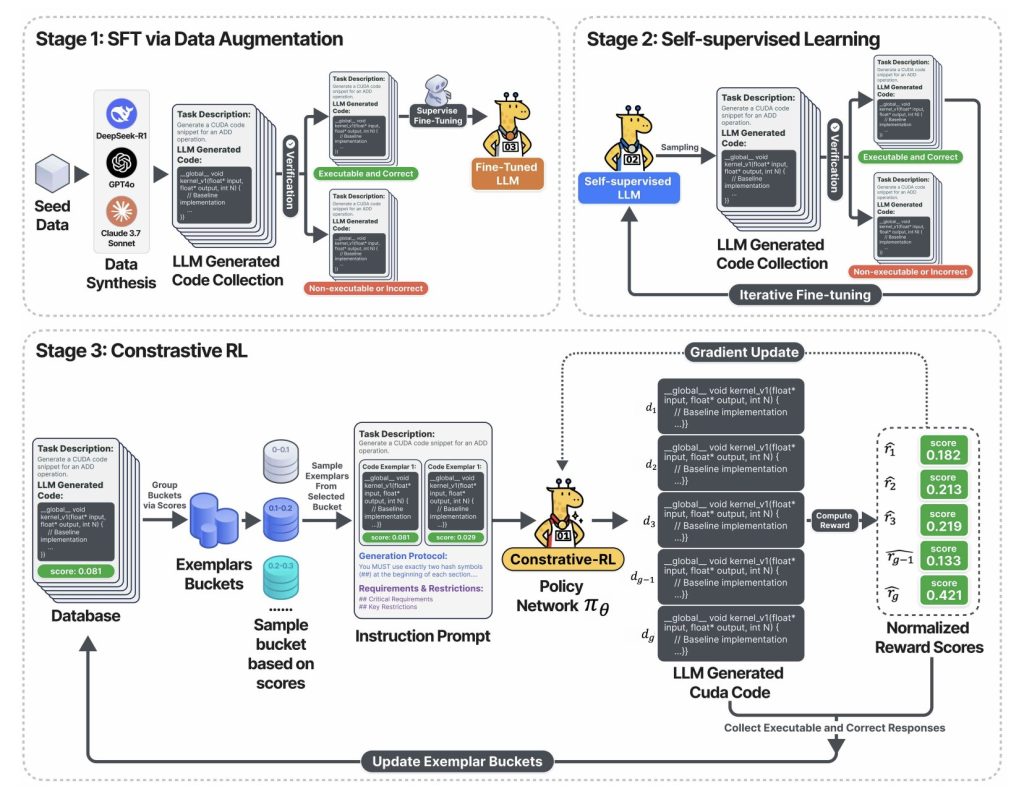

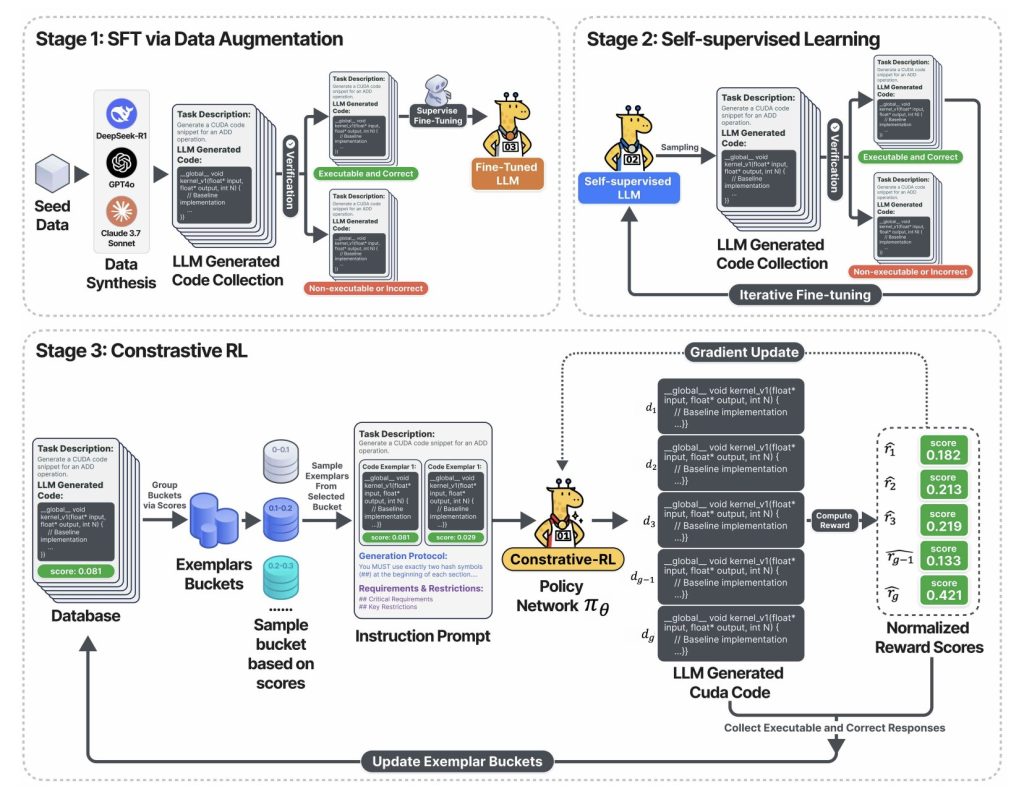

The above diagram captures the three -step training pipeline::

- Step 1: The LLM is refined using the CUDA code validated-collected by sampling from main foundation models (Deepseek-R1, GPT-4O, Claude, etc.), but only retaining correct and executable outputs.

- Step 2: The model enters a self-trained loop: it generates a lot of Cuda code, only retains functional functions and uses them to learn more. Result: rapid improvement in the accuracy and coverage of the code, everything without manual labeling.

- Step 3: In the Contrastive-RL phaseThe system samples several variants of code, shows each with its measured speed and calls into question the AI to debate, analyze and besides the season of previous generations before producing the next series of optimizations. This reflection and improvement loop is the keywright that offers massive accelerates.

What is the quality of Cuda-L1? Difficult data

Speetups at all levels

Noyal—The gold reference for the generation of GPU code (250 Pytorch workload of the real world) – was used to measure Cuda -L1:

| Model / Scene | AVG. Acceleration | Maximum acceleration | Median | Success rate |

|---|---|---|---|---|

| Vanilla Llama-3.1-405B | 0.23 × | 3.14 × | 0 × | 68/250 |

| Deepseek-R1 (RL-Tuned) | 1.41 × | 44.2 × | 1.17 × | 248/250 |

| Cuda-L1 (all steps) | 3.12 × | 120 × | 1.42 × | 249/250 |

- 3.12 × average acceleration: AI has found improvements in almost all tasks.

- 120 × maximum acceleration: Some bottlenecks and the ineffective code (such as the multiplications of the diagonal matrix) have been transformed with fundamentally higher solutions.

- Works through the equipment: The optimized codes on the NVIDIA A100 GPUs preserved substantial gains brought to other architectures (L40, H100, RTX 3090, H20), with average accelerations of 2.37 × to 3.12 ×Median gains constantly greater than 1.1 × on all devices.

Case study: discover hidden accelerations 64 × and 120 ×

DIAG (A) * B – Multiplication of matrix with diagonal

- Reference (ineffective)::

torch.diag(A) @ BBuilt a complete diagonal matrix, requiring a calculation / memory O (n²M). - CUDA-L1 Optimized:

A.unsqueeze(1) * Bexploits broadcasting, only achieving the complexity of O (NM) –resulting in an acceleration of 64 ×. - For what: The AI considered that the allocation of a complete diagonal was useless; This insight was inaccessible via a mutation of brute force, but surfaced via a comparative reflection through generated solutions.

3D transposed convolution – 120 × faster

- Original code: Make a complete convolution, pooling and activation, even when the inputs or hyperparameters mathematically guarantee all zeros.

- Optimized code: “Mathematical short-circuit” used

min_value=0The output could be immediately defined on zero, Go around all calculations and memory allowance. This overview is delivered size orders More acceleration than micro-optimizations in terms of material.

Commercial impact: why this counts

For business leaders

- Direct cost savings: Each 1% acceleration in GPU workloads is translated by 1% less GPuseConds Cloud, lower energy costs and more model flow. Here, the AI has delivered, on average, More than 200% additional calculation from the same material investment.

- Faster product cycles: Automated optimization reduces the need for CUDA experts. The teams can unlock performance gains in hours, not in months, and focus on functionality and search speed instead of low level adjustment.

For AI practitioners

- Verifiable, open source: Every 250 optimized CUDA grains are open source. You can test the speed gains yourself on the GPUs A100, H100, L40 or 3090 – No confidence required.

- No black magic cuda required: The process was not based on secret sauce, proprietary compilers or human adjustment in the loop.

For IA researchers

- BluePrint of domain reasoning: Contrastive -RL offers a new approach to the formation of AI in the fields where accuracy and performance – not just a natural language – of death.

- Hacking: The authors plunge deep into the way in which AI discovered subtle exploits and “cheaters” (such as asynchronous manipulation of flows for false acceleration) and describe robust procedures to detect and prevent such behavior.

Technical insistence: why the contrastive RL wins

- Performance feedback is now in the context: Unlike Vanilla RL, AI can learn not only by trials and errors, but by sustainable.

- Self-improvement steering wheel: The reflection loop makes the model robust to reward games and surpasses the two evolutionary approaches (fixed parameter, contrasting learning in the context) and traditional RL (blind policy gradient).

- Generalizes and discovers fundamental principles: AI can combine, classify and apply key optimization strategies such as the merger of memory, configuration of the threads, the fusion of the operation, the reuse of shared memory, reductions at the level of the chain and mathematical equivalence transformations.

Table: Higher techniques Discovered by Cuda-L1

| Optimization technique | Typical acceleration | Example of insight |

|---|---|---|

| Optimization of memory provision | Coherent boos | Continuous memory / storage for the efficiency of the cache |

| Access to memory (coalescence, shared) | High at high | Avoid bank conflicts, maximizes the bandwidth |

| Operation Fusion | High ops w / pipeline | Multiple fusion nuclei reduce readings / memory entries |

| Mathematical short-circuit | Extremely high (10-100 ×) | Detect when the calculation can be completely ignored |

| Thread block / parallel configuration | Moderate | Adapts blocks / forms of blocks to equipment / task |

| Reductions at the chain / without branch | Moderate | Lowers divergence and reduction of general costs |

| Save / optimization of shared memory | Moderate | Frequent data cover near the calculation |

| Asynchronous execution, minimum synchronization | Varied | Ride the E / S, allows a pipped calculation |

Conclusion: AI is now its own optimization engineer

With Cuda-L1, ai a Become his own performance engineerAccelerate research productivity and material yields, without relying on rare human expertise. The result is not only higher references, but a plan for AI systems which Learn to exploit the full potential of the equipment on which they operate.

AI now builds its own steering wheel: more effective, more perceptive and better able to maximize the resources that we give it – for science, industry and beyond.

Discover the Paper,, Codes And Project page. Do not hesitate to consult our GitHub page for tutorials, codes and notebooks. Also, don't hesitate to follow us Twitter And don't forget to join our Subseubdredit 100k + ml and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.