In this tutorial, we will learn to exploit the power of Google Gemini models alongside the flexibility of pandas. We will carry out simple and sophisticated data analyzes on the classic Titanic data set. By combining the CHOTGOOGLEGERATIVEAI Customer with the Langchain DataFrame experimental agent, we will configure an interactive “agent” which can interpret requests in natural language. He will inspect the data, calculate the statistics, discover the correlations and generate visual information, without writing manual code for each task. We will browse the basic exploration stages (such as counting the lines or calculating survival rates). We will immerse ourselves in advanced analyzes such as survival rates by demographic segments and pricing correlations. Then we will compare the changes on several dataframes. Finally, we will build routines for notation and exploration of personalized patterns to extract new information.

!pip install langchain_experimental langchain_google_genai pandas

import os

import pandas as pd

import numpy as np

from langchain.agents.agent_types import AgentType

from langchain_experimental.agents.agent_toolkits import create_pandas_dataframe_agent

from langchain_google_genai import ChatGoogleGenerativeAI

os.environ("GOOGLE_API_KEY") = "Use Your Own API Key"First of all, we install the required libraries, Langchain_experiment, Langchain_Google_Genai and Pandas, using PIP to activate the DataFrame agent and Google Gemini integration. Then import the basic modules. Then define your Google_Api_Key environment variable, and we are ready to instantiate a pandas agent powered by the Gemini for the analysis of conversational data.

def setup_gemini_agent(df, temperature=0, model="gemini-1.5-flash"):

llm = ChatGoogleGenerativeAI(

model=model,

temperature=temperature,

convert_system_message_to_human=True

)

agent = create_pandas_dataframe_agent(

llm=llm,

df=df,

verbose=True,

agent_type=AgentType.OPENAI_FUNCTIONS,

allow_dangerous_code=True

)

return agentThis assistance function initializes an LLM client propelled by the Gemini with our model and our temperature chosen. Then, it envelops it in an agent Langchain Pandas Dataframe which can execute requests in natural language (including the “dangerous” code) against our dataaframa. Simply pass our data to recover an interactive agent ready for conversational analysis.

def load_and_explore_data():

print("Loading Titanic Dataset...")

df = pd.read_csv(

"https://raw.githubusercontent.com/pandas-dev/pandas/main/doc/data/titanic.csv"

)

print(f"Dataset shape: {df.shape}")

print(f"Columns: {list(df.columns)}")

return dfThis function recovers the Titanic CSV directly from the Repo Pandas Github. It also prints its dimensions and its column names for rapid mental health control. Then it returns the loaded data frame so that we can immediately start our exploratory analysis.

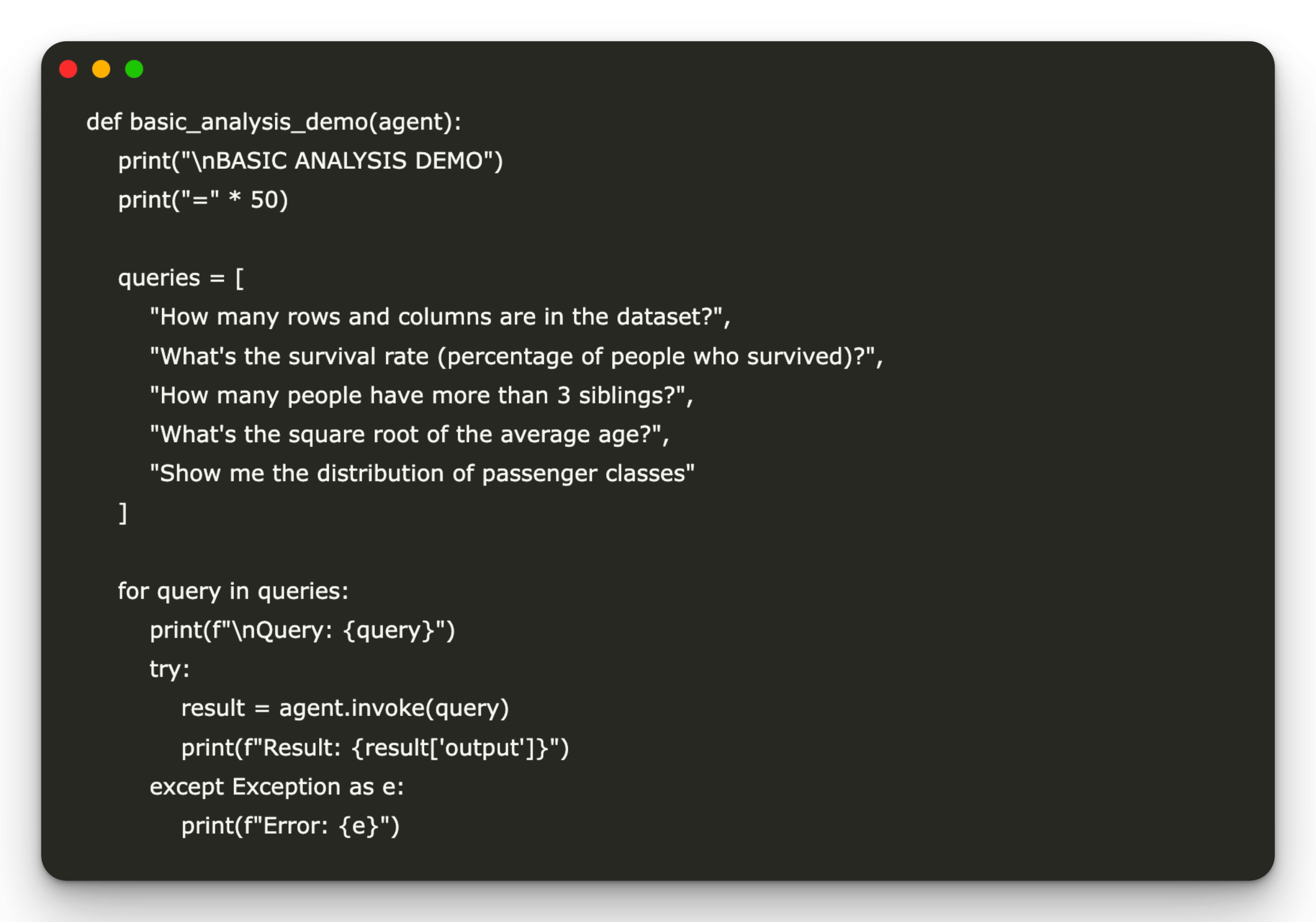

def basic_analysis_demo(agent):

print("\nBASIC ANALYSIS DEMO")

print("=" * 50)

queries = (

"How many rows and columns are in the dataset?",

"What's the survival rate (percentage of people who survived)?",

"How many people have more than 3 siblings?",

"What's the square root of the average age?",

"Show me the distribution of passenger classes"

)

for query in queries:

print(f"\nQuery: {query}")

try:

result = agent.invoke(query)

print(f"Result: {result('output')}")

except Exception as e:

print(f"Error: {e}")This demonstration routine is launching a “basic analysis” session by printing a header. Then, it is through a set of common exploratory requests, such as the dimensions of the data set, the survival rates, the number of families and the class distributions, compared to our Titanic Dataframe agent. For each invitation in natural language, he invokes the agent. Later, he captures his release and prints the result or an error.

def advanced_analysis_demo(agent):

print("\nADVANCED ANALYSIS DEMO")

print("=" * 50)

advanced_queries = (

"What's the correlation between age and fare?",

"Create a survival analysis by gender and class",

"What's the median age for each passenger class?",

"Find passengers with the highest fares and their details",

"Calculate the survival rate for different age groups (0-18, 18-65, 65+)"

)

for query in advanced_queries:

print(f"\nQuery: {query}")

try:

result = agent.invoke(query)

print(f"Result: {result('output')}")

except Exception as e:

print(f"Error: {e}")This “advanced analysis” function prints a header, then manages a series of more sophisticated queries. It calculates the correlations, performs laminated survival analyzes, calculates median statistics and performs detailed filtering compared to our dataframa agent fueled by Gemini. He coats each invite in natural language, captures the agent's responses and prints the results (or errors). Thus, this shows how much we can take advantage of the conversational AI for deeper and segmented information on our data set.

def multi_dataframe_demo():

print("\nMULTI-DATAFRAME DEMO")

print("=" * 50)

df = pd.read_csv(

"https://raw.githubusercontent.com/pandas-dev/pandas/main/doc/data/titanic.csv"

)

df_filled = df.copy()

df_filled("Age") = df_filled("Age").fillna(df_filled("Age").mean())

agent = setup_gemini_agent((df, df_filled))

queries = (

"How many rows in the age column are different between the two datasets?",

"Compare the average age in both datasets",

"What percentage of age values were missing in the original dataset?",

"Show summary statistics for age in both datasets"

)

for query in queries:

print(f"\nQuery: {query}")

try:

result = agent.invoke(query)

print(f"Result: {result('output')}")

except Exception as e:

print(f"Error: {e}")This demo illustrates how to run an agent fueled by gemini on several dataframes. In this case, it includes the original Titanic data and a version with missing ages attributed. Thus, we can ask questions of comparison between dataset (such as differences in the number of lines, average age comparisons, percentages of missing values and statistics of summary side by side) using simple invites in natural language.

def custom_analysis_demo(agent):

print("\nCUSTOM ANALYSIS DEMO")

print("=" * 50)

custom_queries = (

"Create a risk score for each passenger based on: Age (higher age = higher risk), Gender (male = higher risk), Class (3rd class = higher risk), Family size (alone or large family = higher risk). Then show the top 10 highest risk passengers who survived",

"Analyze the 'deck' information from the cabin data: Extract deck letter from cabin numbers, Show survival rates by deck, Which deck had the highest survival rate?",

"Find interesting patterns: Did people with similar names (same surname) tend to survive together? What's the relationship between ticket price and survival? Were there any age groups that had 100% survival rate?"

)

for i, query in enumerate(custom_queries, 1):

print(f"\nCustom Analysis {i}:")

print(f"Query: {query(:100)}...")

try:

result = agent.invoke(query)

print(f"Result: {result('output')}")

except Exception as e:

print(f"Error: {e}")This routine launches a “personalized analysis” session by browsing three complex prompts in several stages. It builds a model of scores of passengers, extracts and assesses the survival rates based on the deck and mines the survival models based on the name of name and the aberrant values / age / age. Thus, we can see with what facility our agent propelled by Gemini manages tailor -made surveys specific to the field with natural language requests.

def main():

print("Advanced Pandas Agent with Gemini Tutorial")

print("=" * 60)

if not os.getenv("GOOGLE_API_KEY"):

print("Warning: GOOGLE_API_KEY not set!")

print("Please set your Gemini API key as an environment variable.")

return

try:

df = load_and_explore_data()

print("\nSetting up Gemini Agent...")

agent = setup_gemini_agent(df)

basic_analysis_demo(agent)

advanced_analysis_demo(agent)

multi_dataframe_demo()

custom_analysis_demo(agent)

print("\nTutorial completed successfully!")

except Exception as e:

print(f"Error: {e}")

print("Make sure you have installed all required packages and set your API key.")

if __name__ == "__main__":

main()

The hand () function serves as a starting point for the tutorial. He verifies that our Gemini API key is defined, loads and explores the Titanic data set and initializes the conversational pandas agent. He then sequentially performs basic, advanced, multi-dataframa and personalized analysis demos. Finally, it envelops the entire workflow in a test block / except to catch and report any error before reporting the success of the completion.

df = pd.read_csv("https://raw.githubusercontent.com/pandas-dev/pandas/main/doc/data/titanic.csv")

agent = setup_gemini_agent(df)

agent.invoke("What factors most strongly predicted survival?")

agent.invoke("Create a detailed survival analysis by port of embarkation")

agent.invoke("Find any interesting anomalies or outliers in the data")Finally, we directly load the Titanic data, instance our agent Pandas powered by Gemini and draw three unique requests. We identify key survivors, decompos survival by the port of boarding and discover anomalies or aberrant values. We carry out all this without modifying any of our demonstration functions.

In conclusion, the combination of pandas with Gemini via a Langchain data agent transforms the exploration of data from the writing of Passe-Partout into clear requests and natural language. Whether we calculate summary statistics, create personalized risk scores, comparing several data -dataframas or drilling in nuanced survival analyzes, the transformation is obvious. With only a few configuration lines, we get an interactive analysis assistant that can adapt to new questions on the fly. It can surface hidden patterns and speed up our workflow.

Discover the Notebook. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our 99K + ML Subreddit and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.