In this tutorial, we rely hard on AI setThe growing ecosystem to show how fast we can transform the unstructured text into an answer service to questions that quote its sources. We are going to scrape a handful of live web pages, slice them into coherent pieces and nourish these pieces with the integration model together of RetherComputer / M2-Bert-80m-8k-Retrieval. These vectors land in a Faiss index for the search for similarities in milliseconds, after which a light model warmed the model that remains in the ground in the recovered passages. Because together, I managed the integrations and discuss behind a single API key, we avoid juggling several suppliers, quotas or SDK dialects.

!pip -q install --upgrade langchain-core langchain-community langchain-together

faiss-cpu tiktoken beautifulsoup4 html2text

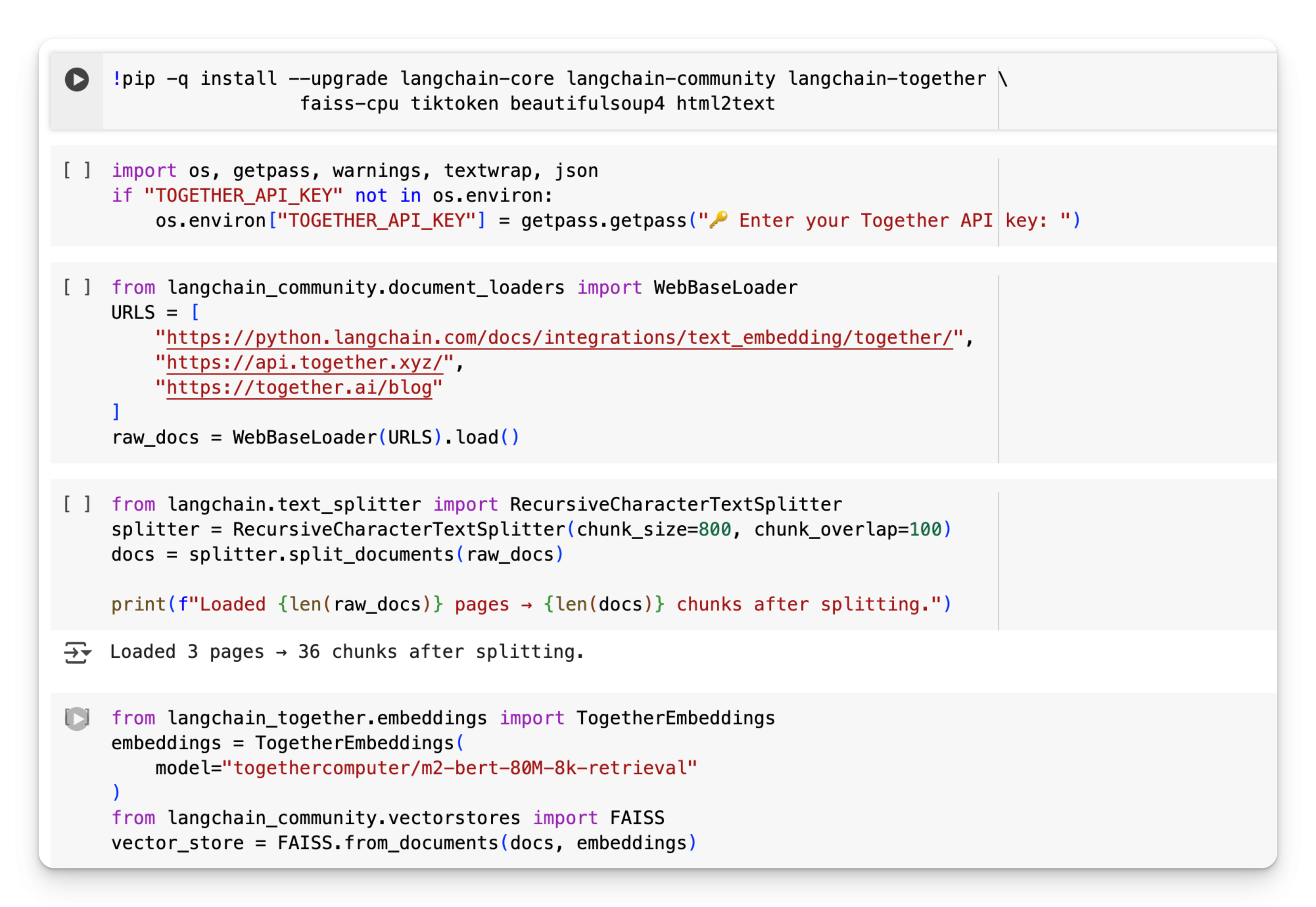

This silent command (-q) pip improves and installs the whole colaab CLOTH needs. He draws the Langchain Core libraries as well as the integration together of the AI, made for the search for a vector, the handling of tokens with Tiktoken and the light HTML analysis via BeautifulSoup4 and HTML2Text, ensuring that the laptop is running from start to finish without additional configuration.

import os, getpass, warnings, textwrap, json

if "TOGETHER_API_KEY" not in os.environ:

os.environ("TOGETHER_API_KEY") = getpass.getpass("🔑 Enter your Together API key: ")We check if the environment variable Ensemble_Api_Key is already defined; Otherwise, he invites us safely to the key with Getpass and stores it in OS. Environnon. The rest of the notebook can call on the AI API without hard coding secrets or expose them in raw text by capturing identification information once in execution.

from langchain_community.document_loaders import WebBaseLoader

URLS = (

"https://python.langchain.com/docs/integrations/text_embedding/together/",

"https://api.together.xyz/",

"https://together.ai/blog"

)

raw_docs = WebBaseLoader(URLS).load()

Webbaseloader recovers each URL, strips Langchain document objects, and returns metadata on the Clean Plus page. By passing a list of links related to together, we immediately collect the live documentation and the blog content which will later be threaded and integrated for semantic research.

from langchain.text_splitter import RecursiveCharacterTextSplitter

splitter = RecursiveCharacterTextSplitter(chunk_size=800, chunk_overlap=100)

docs = splitter.split_documents(raw_docs)

print(f"Loaded {len(raw_docs)} pages → {len(docs)} chunks after splitting.")RecursivecharatteTextSTSPLITTER SLOW Each page recovered in segments of 800 characteristics with an overlap of 100 characters so that the contextual indices are not lost to the limits of the pieces. The resulting list documents hold these langchain document objects the size of a bite, and the printing shows how many pieces have been produced from the original pages, essential preparation for high quality integration.

from langchain_together.embeddings import TogetherEmbeddings

embeddings = TogetherEmbeddings(

model="togethercomputer/m2-bert-80M-8k-retrieval"

)

from langchain_community.vectorstores import FAISS

vector_store = FAISS.from_documents(docs, embeddings)

Here, we instantly instantiated the M2-bert's recovery model of 80 m-parameters from AI as Embedder Langchain, then feed each piece of text while Faiss.from_Documents builds a vector index in memory. The resulting vector store supports Cosinus research at the millisecond, transforming our scratched pages into a semantic database available.

from langchain_together.chat_models import ChatTogether

llm = ChatTogether(

model="mistralai/Mistral-7B-Instruct-v0.3",

temperature=0.2,

max_tokens=512,

)

Chattogethethe enropes a model of Tuned Horités cat on AI pecippe, Mistral-7b-Instruct-V0.3 to be used like any other Langchain LLM. A low temperature of 0.2 maintains the responses anchored and reproducible, while Max_Tokens = 512 gives way to detailed and multi-paragraph responses without leakage cost.

from langchain.chains import RetrievalQA

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=vector_store.as_retriever(search_kwargs={"k": 4}),

return_source_documents=True,

)

Retrievalqa covers the parts together: it takes our retriever Faish (sending the 4 similar pieces) and feeds these extracts in the LLM using the simple “trick” prompt model. Return_source_documents adjustment = True means that each answer will come back with the exact passages on which it was based, giving us instantly and ready for quotes.

QUESTION = "How do I use TogetherEmbeddings inside LangChain, and what model name should I pass?"

result = qa_chain(QUESTION)

print("n🤖 Answer:n", textwrap.fill(result('result'), 100))

print("n📄 Sources:")

for doc in result('source_documents'):

print(" •", doc.metadata('source'))Finally, we send a natural language request via QA_Chain, which recovers the four most relevant pieces, feeds them with the downward model and returns a concise response. He then prints the formatted answer, followed by a source URL list, giving us both the synthesized explanation and the transparent quotes in a single photo.

In conclusion, in about fifty lines of code, we built a complete cloth buckle supplied from start to finish per AI set: ingest, integrate, store, recover and converse. The approach is deliberately modular, exchange for chroma, exchange the integration of 80 m parameter for a larger multilingual model of toget which remains constant, it is the convenience of a unified AI backend together: fast and affordable incorporations, cat models set for the following instruction and a generous free level which makes the experimentation painless. Use this model for bootstrap an internal knowledge assistant, a customer documentation bot or a personal research assistant.

Discover the Colab notebook here. Also, don't hesitate to follow us Twitter And don't forget to join our 90K + ML Subdreddit.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.