In this tutorial, we guide you by configuring a fully functional bot in Google Colab which operates CLAUDE D'ANTHROPIC model next to MeM0 For a recall of transparent memory. The combination of the intuitive orchestration of the Langgraph state machine with the powerful Mem0 vector memory store will allow our assistant to remember past conversations, recover the relevant details on demand and maintain natural continuity between the sessions. Whether you create support robots, virtual assistants or interactive demos, this guide will give you a robust base for memory experiences focused on memory.

!pip install -qU langgraph mem0ai langchain langchain-anthropic anthropicFirst of all, we install and improve Langgraph, the MEM0 AI customer, Langchain with its anthropogenic connector, and the basic anthropogenic SDK, ensuring that we have all the latest libraries necessary to build a Claude chatbot based on memory in Google Colar. Executing it in advance will avoid dependence problems and will ration the configuration process.

import os

from typing import Annotated, TypedDict, List

from langgraph.graph import StateGraph, START

from langgraph.graph.message import add_messages

from langchain_core.messages import SystemMessage, HumanMessage, AIMessage

from langchain_anthropic import ChatAnthropic

from mem0 import MemoryClientWe bring together the main construction blocks of our Chatbot Colab: he loads the operational system interface for the keys to the API, the dactylographed dictionaries of Python and the annotation utilities to define the conversational state, the graphic of Langgraph and the messages of messages to orchestrate the chat flow, the Langchain Claude for the Call of the Call of the call to the call.

os.environ("ANTHROPIC_API_KEY") = "Use Your Own API Key"

MEM0_API_KEY = "Use Your Own API Key"We safely inject our anthropogenic and MEM0 identification information into the environment and a local variable, ensuring that the Chatanthropic customer and the MeM0 memory store can self -use correctly without the sensitive keys to hard coding in our laptop. Centralizing our API keys here, we maintain its own separation between the code and the secrets while allowing transparent access to the Claude model and a persistent layer of memory.

llm = ChatAnthropic(

model="claude-3-5-haiku-latest",

temperature=0.0,

max_tokens=1024,

anthropic_api_key=os.environ("ANTHROPIC_API_KEY")

)

mem0 = MemoryClient(api_key=MEM0_API_KEY)

We initialize our core of the conversational AI: first, it creates an instance of catanthropic configured to speak with Claude 3.5 Sonnet at zero temperature for deterministic responses and up to 1024 tokens per response, using our anthropic key stored for authentication. Then, it runs a MEM0 MEM0Client with our API MEM0 key, giving our bot a memory store based on a persistent vector to record and recover the interactions passed transparently.

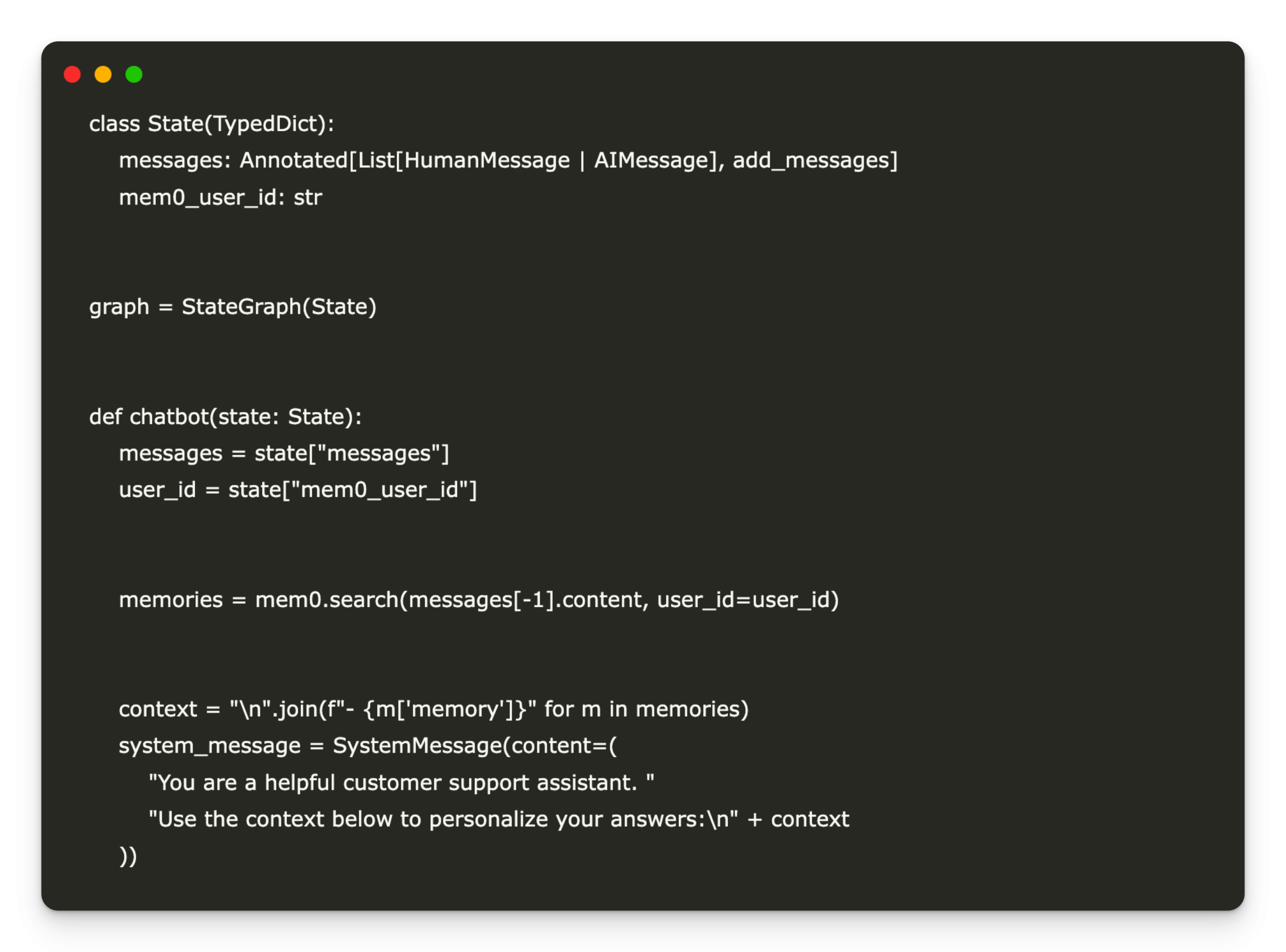

class State(TypedDict):

messages: Annotated(List(HumanMessage | AIMessage), add_messages)

mem0_user_id: str

graph = StateGraph(State)

def chatbot(state: State):

messages = state("messages")

user_id = state("mem0_user_id")

memories = mem0.search(messages(-1).content, user_id=user_id)

context = "\n".join(f"- {m('memory')}" for m in memories)

system_message = SystemMessage(content=(

"You are a helpful customer support assistant. "

"Use the context below to personalize your answers:\n" + context

))

full_msgs = (system_message) + messages

ai_resp: AIMessage = llm.invoke(full_msgs)

mem0.add(

f"User: {messages(-1).content}\nAssistant: {ai_resp.content}",

user_id=user_id

)

return {"messages": (ai_resp)}We define the conversational status scheme and wrap it in a Langgraph status machine: the standard state is followed by messaging history and a MEM0 user ID, and graph = stategraph (state) configures the flow controller. In the chatbot, the most recent user message is used to question MEM0 for relevant memories, a system prompt by the context is built, Claude generates an answer, and this new exchange is recorded in MEM0 before returning the assistant's response.

graph.add_node("chatbot", chatbot)

graph.add_edge(START, "chatbot")

graph.add_edge("chatbot", "chatbot")

compiled_graph = graph.compile()We connect our chatbot function to the Langgraph execution flow by recording it as a node called “Chatbot”, then connecting the start -up marker integrated into this node. Therefore, the conversation begins there and finally creates an edge of the car so that each new user message enters the same logic. The graphic call. Compile () then transforms this knot and edge configuration into an optimized and executable graphic object which will manage each round of our cat session automatically.

def run_conversation(user_input: str, mem0_user_id: str):

config = {"configurable": {"thread_id": mem0_user_id}}

state = {"messages": (HumanMessage(content=user_input)), "mem0_user_id": mem0_user_id}

for event in compiled_graph.stream(state, config):

for node_output in event.values():

if node_output.get("messages"):

print("Assistant:", node_output("messages")(-1).content)

return

if __name__ == "__main__":

print("Welcome! (type 'exit' to quit)")

mem0_user_id = "customer_123"

while True:

user_in = input("You: ")

if user_in.lower() in ("exit", "quit", "bye"):

print("Assistant: Goodbye!")

break

run_conversation(user_in, mem0_user_id)

We line while defining Run_Conversation, which packs our user input into the state of Langgraph, diffuses it through the compiled graph to invoke the chatbot node and prints Claude's response. The __main__ Guard then launched a simple replacement loop, inviting us to type messages, to transport them via our graphic compatible with memory and to leave gracefully when we enter “leave”.

In conclusion, we have assembled a conversational AI pipeline which combines the peak of Anthropic Claude model with the persistent memory capacities of MEM0, all orchestrated via Langgraph in Google Colar. This architecture allows our bot to recall the details specific to the user, to adapt the answers over time and to provide personalized support. From there, remember to experiment with richer memory strategies, a Claude adjustment, or the integration of additional tools in your graph.

Check Colab notebook here. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our 95K + ML Subdreddit.

Here is a brief overview of what we build on Marktechpost:

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.