Vision language models (VLMS) allow both text entries and visual understanding. However, image resolution is crucial for VLM performance for the processing of text data and cards rich in graphics. The increase in image resolution creates significant challenges. First, pre-business vision encoders often have difficulties with high-resolution images due to ineffective pre-training requirements. The race for inference on high -resolution images increases calculation costs and latency during the generation of visual tokens, whether by a single -resolution treatment or several low -resolution tile strategies. Second, high resolution images produce more tokens, which leads to an increase in LLM prefilming time and time for first (TTFT), which is the sum of the latency of the vision encoder and the time of LLM prefills.

Large multimodal models such as Frozen and Florence used a cross -warning to combine images and text incorporations in the intermediate LLM layers. Self-regressive architectures such as Llava, Mplug-Owl, Minigpt-4 and Cambrian-1 are effective. For effective image coding, clip pre-priest-up vision transformers remain widely adopted, with variants such as Siglip, Eva-Clip, Intervative and DFNCLIP. Methods such as Llava-Primege and the sampling of tokens based on Matryoshka are trying the dynamic pruning of the tokens, while the hierarchical squets such as Prison and Fastvit reduce the number of tokens thanks to progressive reductions. Recently, Convllava has been introduced, which uses a pure vision-convolutionary encoder to code images for a VLM.

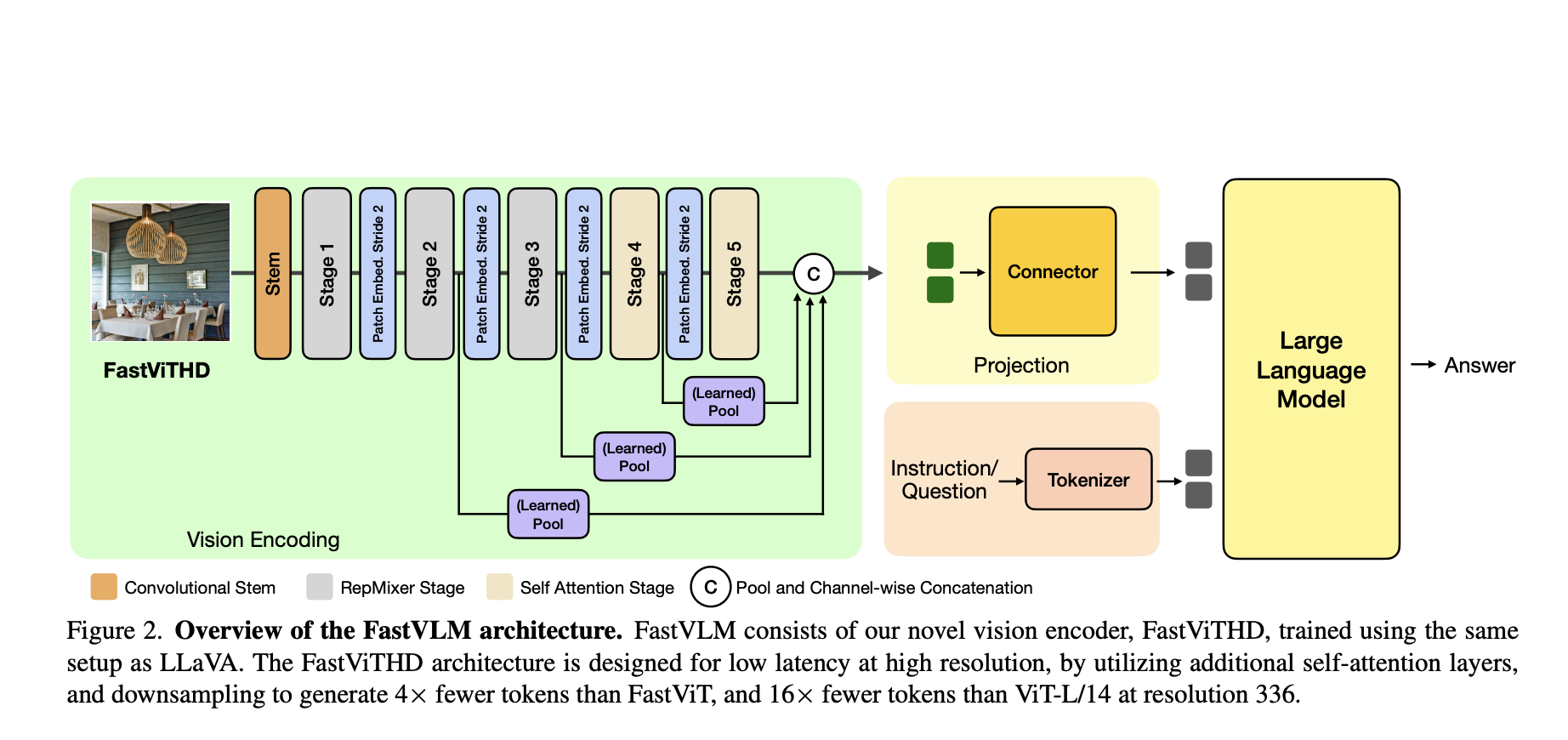

Apple researchers have proposed Fastvlm, a model that produces an optimized compromise between resolution, latency and precision by analyzing how image quality, processing time, the number of tokens and the size of the LLM are displayed. He uses Fastvithd, a hybrid vision encoder designed to produce less tokens and reduce encoding time for high -resolution images. Fastvlm obtains an optimal balance between the number of visual tokens and the image resolution only by widening the input image. It shows an improvement of 3.2 times of the TTFT in the LLAVA1.5 configuration and obtains higher performance on key references using the same LLM 0.5B compared to the LLAVA-Onevision to a maximum resolution. It delivers TTFT 85 times more quickly while using a vision encoder 3.4 times smaller.

All FastVLM models are formed on a single knot with 8 times the NVIDIA H100-80 GB GPUs, where VLM stadium training is fast, taking about 30 minutes to train with a QWEN2-7B decoder. In addition, Fastvithd improves basic fastvit architecture by introducing an additional step with an reduction reduction layer. This guarantees that self-tensioning works on tensors reduced by a factor of 32 rather than 16, reducing the image coding for latency while generating 4 times less tokens for the LLM decoder. The Fastvithd architecture contains five steps: the first three steps use repmile blocks for effective treatment, while the last two stages use self-attenuated blocks with several heads, creating an optimal balance between calculation efficiency and understanding of high resolution image.

Compared to Convllava using the same LLM and similar training data, Fastvlm obtains 8.4% better performance on TextVQA and an improvement of 12.5% on Docvqa while operating 22% faster. The performance advantage increases to higher resolutions, where Fastvlm maintains 2 × of the rapid treatment speeds than Convllava in various landmarks. Fastvlm corresponds or exceeds performance MM1 through various landmarks using an intermediate pre-training with 15 m samples for resolution scaling, while generating 5 times less visual token. In addition, Fastvlm surpasses not only Cambrian-1, but also works 7.9 times faster. With the adjustment of instructions on scale, it provides better results while using 2.3 times fewer visual tokens.

In conclusion, the researchers introduced Fastvlm, a VLM progression using the Fastvithd Vision skeleton for an efficient high resolution image coding. The hybrid architecture, pre-trained on reinforced image text data, reduces the output of visual token while maintaining a minimum precision sacrifice compared to existing approaches. FASTVLM obtains competitive performance through VLM benchmarks while providing significant improvements in efficiency in the number of parameters of the TTFT and vision back spine. Rigorous benchmarking on M1 MacBook Pro equipment shows that FASTVLM offers a resolution compromise resolution of the suite of technology higher than that of current methods.

Discover the Paper. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our Subseubdredit 100k + ml and subscribe to Our newsletter.

You can also love Nvidia Open Cosmos Diffusionrender (Check it now)

Sajjad Ansari is a last year's first year of the Kharagpur Iit. As a technology enthusiast, he plunges into AI's practical applications by emphasizing the understanding of the impact of AI technologies and their real implications. It aims to articulate complex AI concepts in a clear and accessible way.