The landscape of models of the AI Foundation evolves quickly, but few entries were as significant in 2025 as the arrival of the GLM-4.5 series of Z.ai: GLM-4.5 and his lighter brother GLM-4.5-Air. Unveiled by Zhipu AI, these models establish remarkably high standards for unified agent capacities and free access, aimed at filling the gap between reasoning, coding and intelligent agents – and to do so on massive and manageable ladders.

Architecture and model parameters

| Model | Total parameters | Active parameters | Notability |

|---|---|---|---|

| GLM-4.5 | 355B | 32B | Among the biggest open weights, the main reference performance |

| GLM-4.5-Air | 106b | 12b | Compatibility compact, efficient and targeting |

GLM-4.5 is built on a Mixture of experts (MOE) Architecture, with a total of 355 billion parameters (32 billion active at the same time). This model is made for advanced performance, targeting high demand reasoning and agency applications. GLM-4.5-AIR, with 106b in total and 12b, active parameters, provides similar capacities with a material and considerably reduced calculation imprint.

Hybrid reasoning: two modes in a framework

The two models introduce a Hybrid reasoning approach::

- Method of reflection: Allows a complex step-by-step reasoning, the use of tools, multi-tours planning and autonomous agent tasks.

- Non thought: Optimized for instantaneous and stateless responses, which makes versatile models for conversational use cases and fast reaction.

This double -mode design meets both sophisticated cognitive workflows and low latency interactive needs within a single model, empowering new generation AI agents.

Performance benchmarks

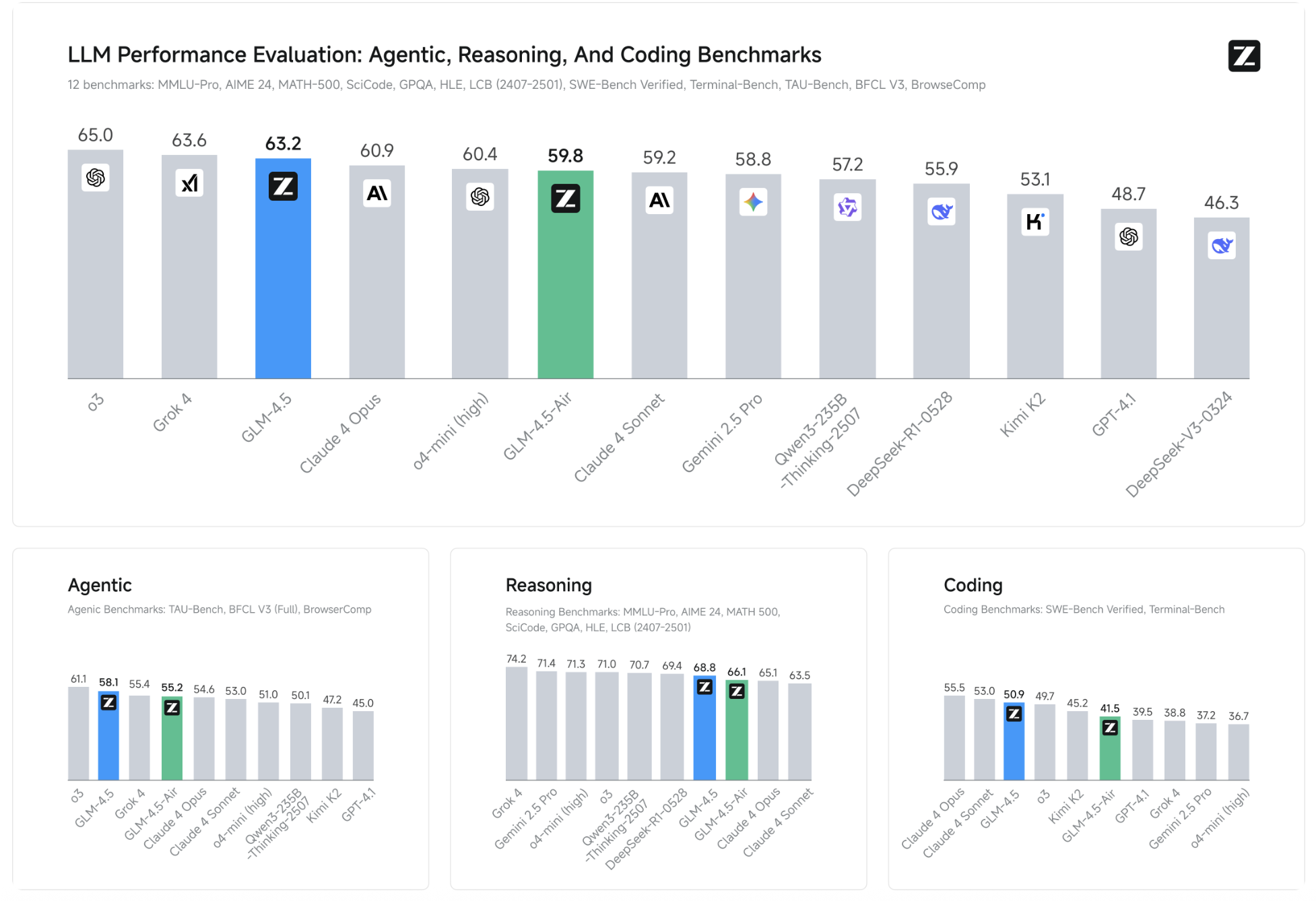

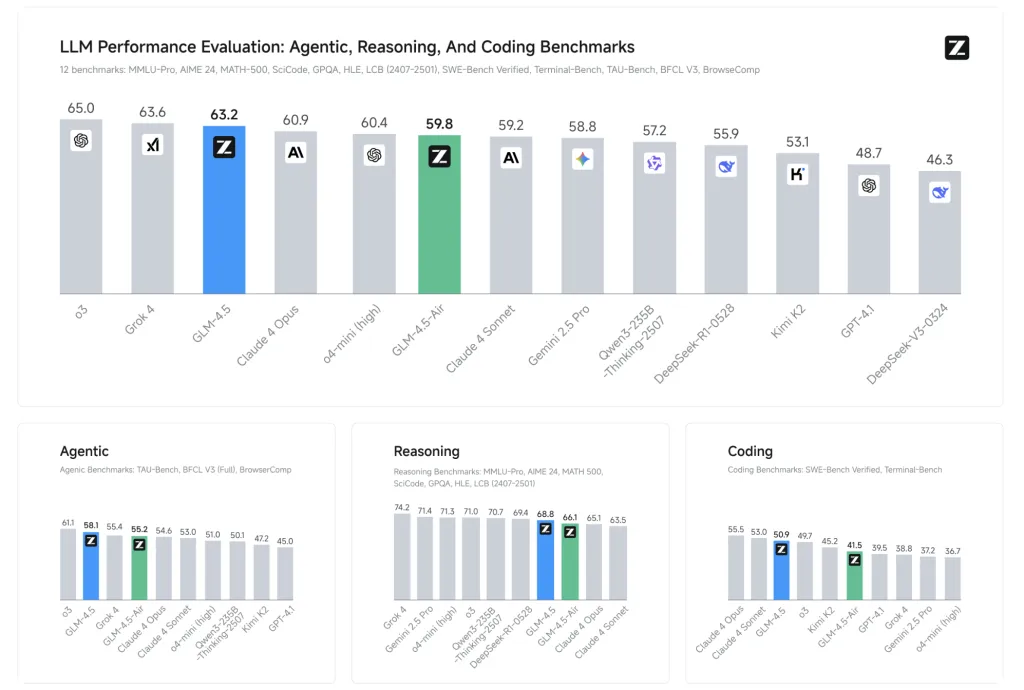

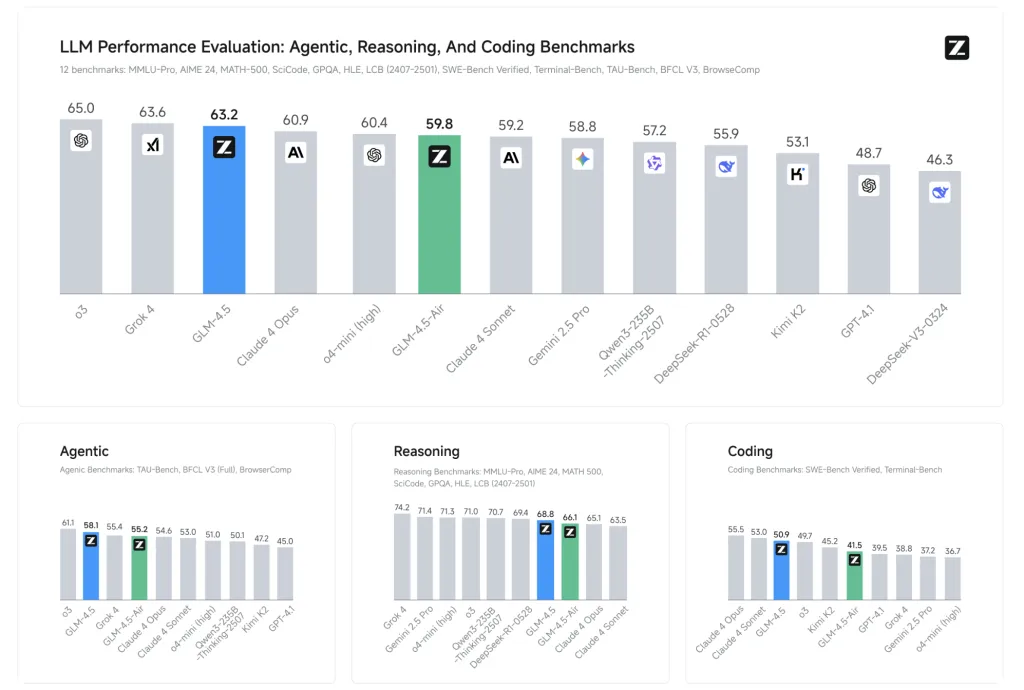

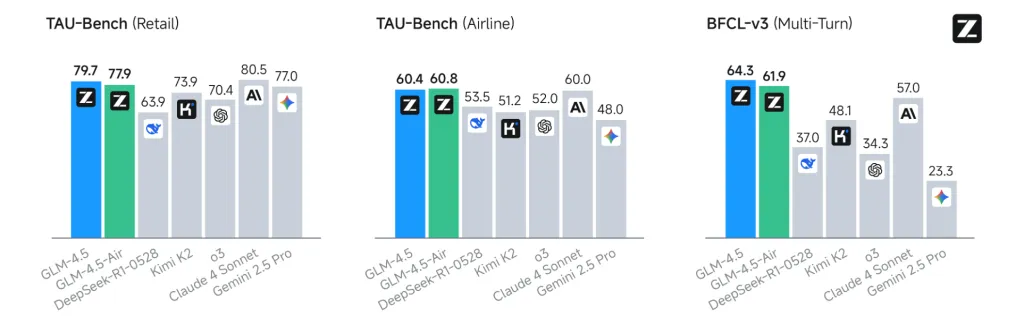

Z.a Benchmarked GLM-4.5 on 12 standard industry tests (including MMLU, GSM8K, Humaneval):

- GLM-4.5: Average reference score of 63.2, ranked third in total (second world, leading among all open source models).

- GLM-4.5-Air: Offers a competitive 59.8, establishing itself as the leader among the parameter models ~ 100b.

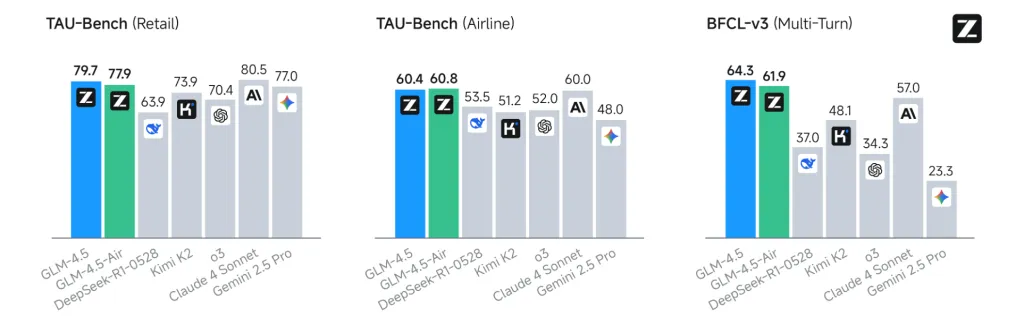

- Surpass notable rivals in specific fields: 90.6%tool call for tools, outperforming Claude 3.5 Sonnet and Kimi K2.

- Particular results in tasks and coding of the Chinese language, with coherent Sota results through open references.

Agent capacities and architecture

GLM-4.5 Advances “Native agent“Design: Basic agent features (reasoning, planning, action execution) are built directly in the architecture of the model. This means:

- Decomposition and planning of tasks in several stages

- Use and integration of tools with external APIs

- Visualization of complex data and management of work flow

- Native support for reasoning and perception action cycles

These capacities allow agenic applications from start to finish previously reserved for smaller and hard coded frames or closed source APIs.

Efficiency, speed and cost

- Speculative decoding and multi-token prediction (MTP): With features such as MTP, GLM-4.5 reaches 2.5 × –8 × inference faster than previous models, with generation speeds> 100 tokens / dry on the high-speed API and up to 200 tokens / drys claimed in practice.

- Memory and material: The Active 12B design of GLM-4.5-Air is compatible with the general public GPUs (32–64 GB of VRAM) and can be quantified to adapt to wider equipment. This allows high performance LLM to perform locally for advanced users.

- Price: API calls start as low as $ 0.11 per million entry tokens and $ 0.28 per million production tokens – Industry prices for the scale and the quality offered.

Open source access and ecosystem

A keystone of the GLM-4.5 series is its Open Source License MIT: Basic models, hybrid models (thought / non-thought) and FP8 versions are all released for commercial use and second-rate development without restriction. The code, tool analyzers and reasoning engines are integrated into the main LLM frames, including transformers, VLLM and SGLANG, with detailed standards available on Github and the face of cudelines.

The models can be used thanks to major inference engines, with a fine and on -site deployment fully supported. This level of opening and flexibility contrasts strongly with the increasingly closed position of Western rivals.

Key technical innovations

- Multi-token prediction (MTP) Layer for speculative decoding, considerably increasing the speed of inference on CPUs and GPUs.

- Unified architecture for reasoning, coding and multimodal perception workflows.

- Formed on 15 billions of tokens, with support for an input of up to 128k and 96K output context windows.

- Immediate compatibility with research and production tools, including instructions to adjust and adapt models for new use cases.

In summary, GLM-4.5 and GLM-4.5-AIR Represent a major jump for open-source, agentic foundation models and focused on reasoning. They have established new standards for accessibility, performance and unified cognitive capacities – providing a robust spine for the next generation of smart agents and developer applications.

Discover the GLM 4.5,, GLM 4.5 Air,, GitHub page And Technical details. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our Subseubdredit 100k + ml and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.