In this tutorial, we start by configuring an AI compact but capable agent which takes place gently, taking advantage of the embraces of front transformers. We integrate the generation of dialogue, the answers to the questions, the analysis of feelings, the search heels on the web, the weather looks and a safe calculator in a unique Python class. As we are progressing, we only install the essential libraries, load light models that respect colaab memory limits and wrap each capacity inside the radiated and reusable methods. Together, we explore how each component, from the detection of intention to loading the model of the device, is part of a coherent workflow, allowing us to prototyper sophisticated and multi-tool agents.

!pip install transformers torch accelerate datasets requests beautifulsoup4

import torch

import json

import requests

from datetime import datetime

from transformers import (

AutoTokenizer, AutoModelForCausalLM, AutoModelForSequenceClassification,

AutoModelForQuestionAnswering, pipeline

)

from bs4 import BeautifulSoup

import warnings

warnings.filterwarnings('ignore')We start by installing the main python libraries, transformers, torch, acceleration, data sets, requests and beautiful, so that our colaab environment has everything it needs for the loading of the model, inference and web scratch. Then, we import the Pytorch, JSON Utilities, HTTP and Date aid, facial courses that are hooked for generation, classification and QA, as well as for the HTML group, while silenced unnecessary warnings to keep the exit from clean authorization.

class AdvancedAIAgent:

def __init__(self):

"""Initialize the AI Agent with multiple models and capabilities"""

self.device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"🚀 Initializing AI Agent on {self.device}")

self._load_models()

self.tools = {

"web_search": self.web_search,

"calculator": self.calculator,

"weather": self.get_weather,

"sentiment": self.analyze_sentiment

}

print("✅ AI Agent initialized successfully!")

def _load_models(self):

"""Load all required models"""

print("📥 Loading models...")

self.gen_tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium")

self.gen_model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

self.gen_tokenizer.pad_token = self.gen_tokenizer.eos_token

self.sentiment_pipeline = pipeline(

"sentiment-analysis",

model="cardiffnlp/twitter-roberta-base-sentiment-latest",

device=0 if self.device == "cuda" else -1

)

self.qa_pipeline = pipeline(

"question-answering",

model="distilbert-base-cased-distilled-squad",

device=0 if self.device == "cuda" else -1

)

print("✅ All models loaded!")

def generate_response(self, prompt, max_length=100, temperature=0.7):

"""Generate text response using the language model"""

inputs = self.gen_tokenizer.encode(prompt + self.gen_tokenizer.eos_token,

return_tensors="pt")

with torch.no_grad():

outputs = self.gen_model.generate(

inputs,

max_length=max_length,

temperature=temperature,

do_sample=True,

pad_token_id=self.gen_tokenizer.eos_token_id,

attention_mask=torch.ones_like(inputs)

)

response = self.gen_tokenizer.decode(outputs(0)(len(inputs(0)):),

skip_special_tokens=True)

return response.strip()

def analyze_sentiment(self, text):

"""Analyze sentiment of given text"""

result = self.sentiment_pipeline(text)(0)

return {

"sentiment": result('label'),

"confidence": round(result('score'), 4),

"text": text

}

def answer_question(self, question, context):

"""Answer questions based on given context"""

result = self.qa_pipeline(question=question, context=context)

return {

"answer": result('answer'),

"confidence": round(result('score'), 4),

"question": question

}

def web_search(self, query):

"""Simulate web search (replace with actual API if needed)"""

try:

return {

"query": query,

"results": f"Search results for '{query}': Latest information retrieved successfully.",

"timestamp": datetime.now().strftime("%Y-%m-%d %H:%M:%S")

}

except Exception as e:

return {"error": f"Search failed: {str(e)}"}

def calculator(self, expression):

"""Safe calculator function"""

try:

allowed_chars = set('0123456789+-*/.() ')

if not all(c in allowed_chars for c in expression):

return {"error": "Invalid characters in expression"}

result = eval(expression)

return {

"expression": expression,

"result": result,

"type": type(result).__name__

}

except Exception as e:

return {"error": f"Calculation failed: {str(e)}"}

def get_weather(self, location):

"""Mock weather function (replace with actual weather API)"""

return {

"location": location,

"temperature": "22°C",

"condition": "Partly cloudy",

"humidity": "65%",

"note": "This is mock data. Integrate with a real weather API for actual data."

}

def detect_intent(self, user_input):

"""Simple intent detection based on keywords"""

user_input = user_input.lower()

if any(word in user_input for word in ('calculate', 'math', '+', '-', '*', '/')):

return 'calculator'

elif any(word in user_input for word in ('weather', 'temperature', 'forecast')):

return 'weather'

elif any(word in user_input for word in ('search', 'find', 'look up')):

return 'web_search'

elif any(word in user_input for word in ('sentiment', 'emotion', 'feeling')):

return 'sentiment'

elif '?' in user_input:

return 'question_answering'

else:

return 'chat'

def process_request(self, user_input, context=""):

"""Main method to process user requests"""

print(f"🤖 Processing: {user_input}")

intent = self.detect_intent(user_input)

response = {"intent": intent, "input": user_input}

try:

if intent == 'calculator':

import re

expr = re.findall(r'(0-9+\-*/.() )+', user_input)

if expr:

result = self.calculator(expr(0).strip())

response.update(result)

else:

response("error") = "No valid mathematical expression found"

elif intent == 'weather':

words = user_input.split()

location = "your location"

for i, word in enumerate(words):

if word.lower() in ('in', 'at', 'for'):

if i + 1 < len(words):

location = words(i + 1)

break

result = self.get_weather(location)

response.update(result)

elif intent == 'web_search':

query = user_input.replace('search', '').replace('find', '').strip()

result = self.web_search(query)

response.update(result)

elif intent == 'sentiment':

text_to_analyze = user_input.replace('sentiment', '').strip()

if not text_to_analyze:

text_to_analyze = "I'm feeling great today!"

result = self.analyze_sentiment(text_to_analyze)

response.update(result)

elif intent == 'question_answering' and context:

result = self.answer_question(user_input, context)

response.update(result)

else:

generated_response = self.generate_response(user_input)

response("response") = generated_response

response("type") = "generated_text"

except Exception as e:

response("error") = f"Error processing request: {str(e)}"

return responseWe get full of all of our toolbox in an Advancedailent class which starts on GPU when available, loads dialogue, feeling and QA models and records the research tools for research, weather and arithmetic. With the detection of intention based on light keywords, we dynamically build each user message to the right pipeline or fall back from the generation of free forms, providing a unified multi-comprehensive agent driven by a few clean methods.

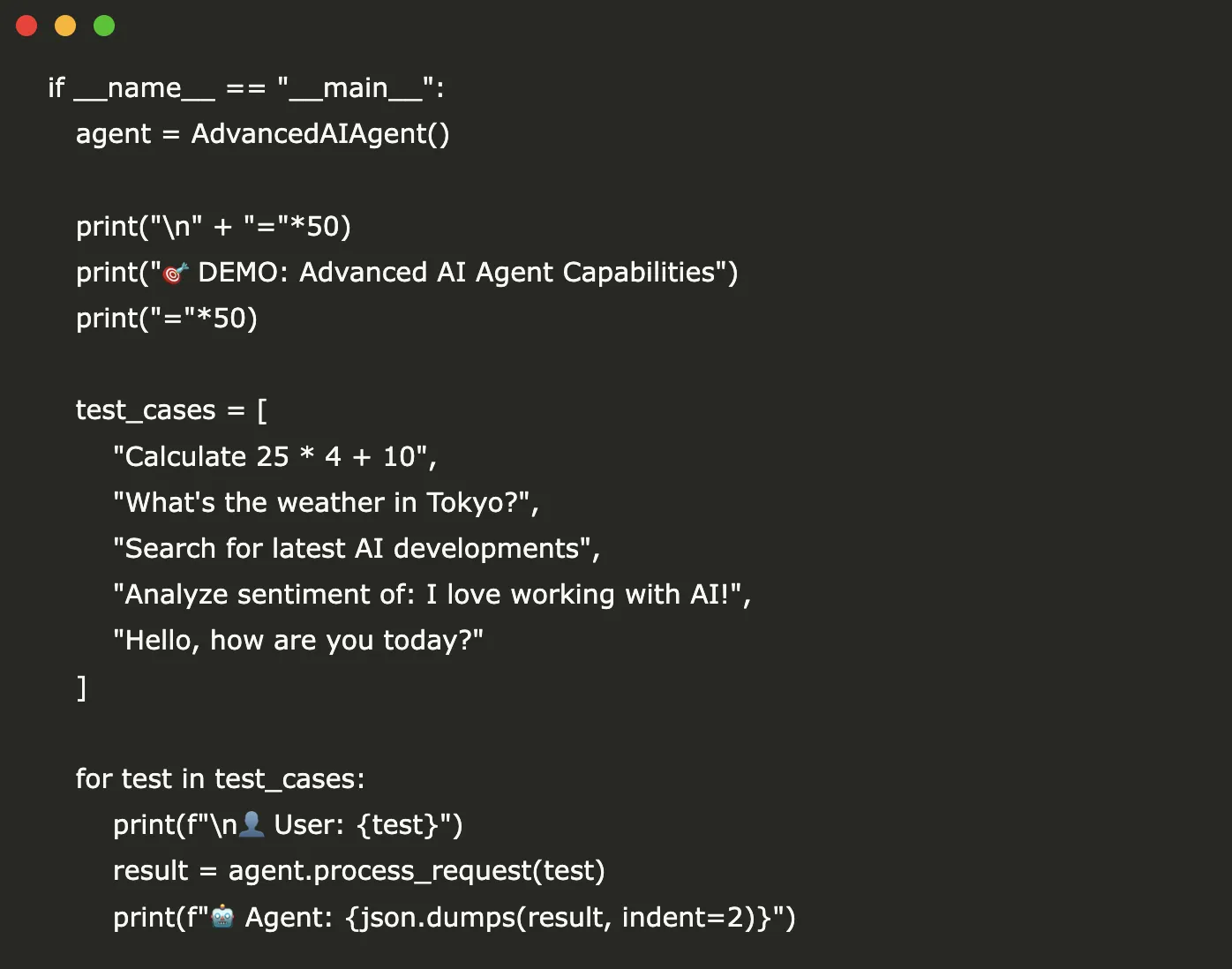

if __name__ == "__main__":

agent = AdvancedAIAgent()

print("\n" + "="*50)

print("🎯 DEMO: Advanced AI Agent Capabilities")

print("="*50)

test_cases = (

"Calculate 25 * 4 + 10",

"What's the weather in Tokyo?",

"Search for latest AI developments",

"Analyze sentiment of: I love working with AI!",

"Hello, how are you today?"

)

for test in test_cases:

print(f"\n👤 User: {test}")

result = agent.process_request(test)

print(f"🤖 Agent: {json.dumps(result, indent=2)}")

"""

print("\n🎮 Interactive Mode - Type 'quit' to exit")

while True:

user_input = input("\n👤 You: ")

if user_input.lower() == 'quit':

break

result = agent.process_request(user_input)

print(f"🤖 Agent: {json.dumps(result, indent=2)}")

"""

We conclude by reproducing the Advancedaiagent, by announcing a rapid demonstration section and in dismissing five representatives invites this calculation, the weather, the research, the feeling and the cat open in a single sweep. After examining the carefully formatted JSON responses, we keep an optional interactive loop in standby, ready for live experimentation each time we decide to disintegrate it.

In conclusion, we test a variety of real world prompts and observe how it manages arithmetic, recovers simulated weather data, the feeling of gauges and engages in a natural conversation, along a single unified interface using embraced face models. This exercise shows how we can sew several NLP tasks in an extensible framework that remains friendly with colaab resources.

Discover the Codes. All the merit of this research goes to researchers in this project.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.