Regarding learning, humans and artificial intelligence (IA) share a common challenge: how to forget about the information they should not know. For the rapid development of AI programs, in particular those formed on large data sets, this problem becomes critical. Imagine an AI model that inadvertently generates content using material protected by copyright or violent images – such situations can lead to legal complications and ethical concerns.

Researchers from the University of Texas in Austin have addressed this front problem by applying a revolutionary concept: the “unlearning” machine. In their recent study, a team of scientists led by Radu Marculescu has developed a method that allows generative models to selectively forget the problematic content without rejecting the entire knowledge base.

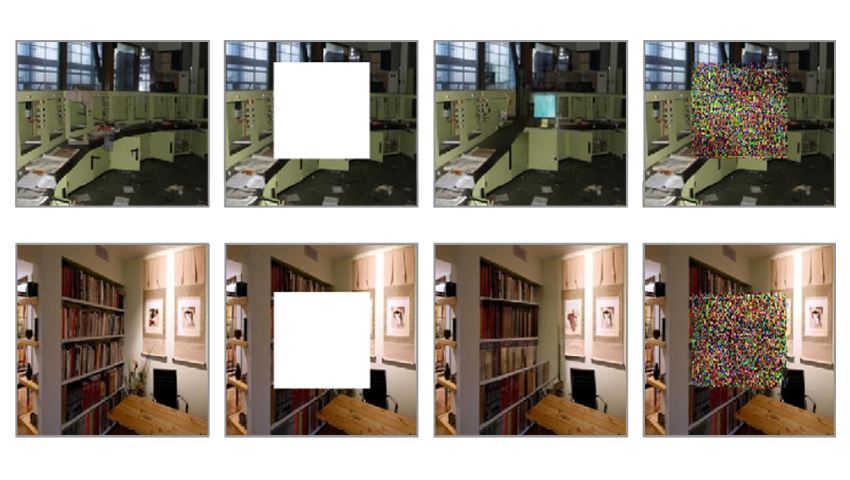

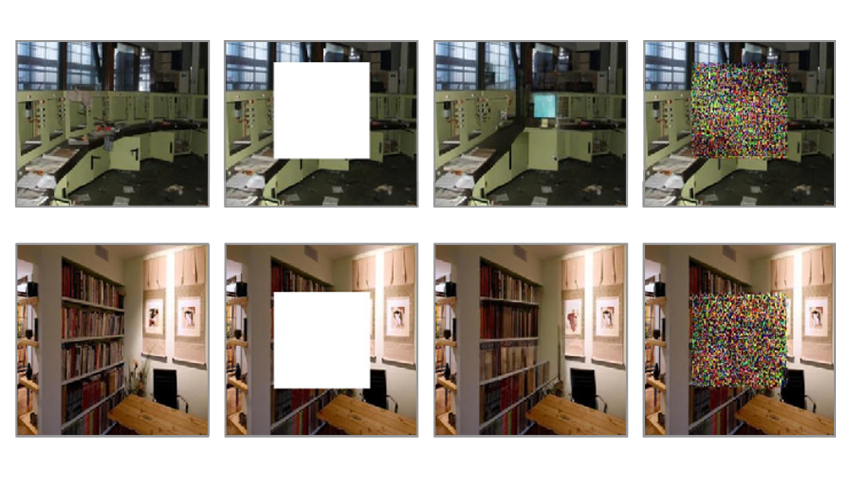

At the heart of their research are image models, capable of transforming input images based on contextual instructions. The new “unlearning” algorithm of machine allows these models the possibility of eliminating the content reported without undergoing in -depth recycling. Human moderators oversee the deletion of content, providing an additional layer of surveillance and responsiveness to user comments.

Although the machine decayment has been traditionally applied to classification models, its adaptation to generative models represents an emerging border. Generative models, in particular those dealing with image processing, have unique challenges. Unlike classifiers who make discreet decisions, generative models create rich and continuous outings. Ensuring that they unlearn specific aspects without compromising their creative capacities is a delicate balancing act.

As a next step, scientists plan to explore applicability to other methods, in particular for text models in the image. Researchers also intend to develop more practical benchmarks related to the control of the content created and to protect the confidentiality of data.

You can read the full study in the article published on the Arxiv preparation server.

While AI continues to evolve, the concept of “unlearning” of the machine will play an increasingly vital role. It allows AI systems to navigate the fine line between the retention of knowledge and the generation of responsible content. By incorporating human surveillance and selectively forgetting the problematic content, we get closer to the models of AI that learn, adapt and respect the legal and ethical boundaries.