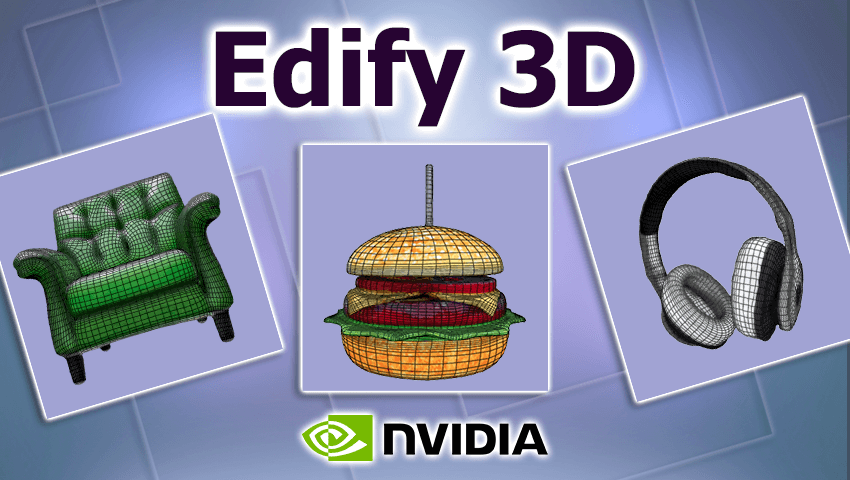

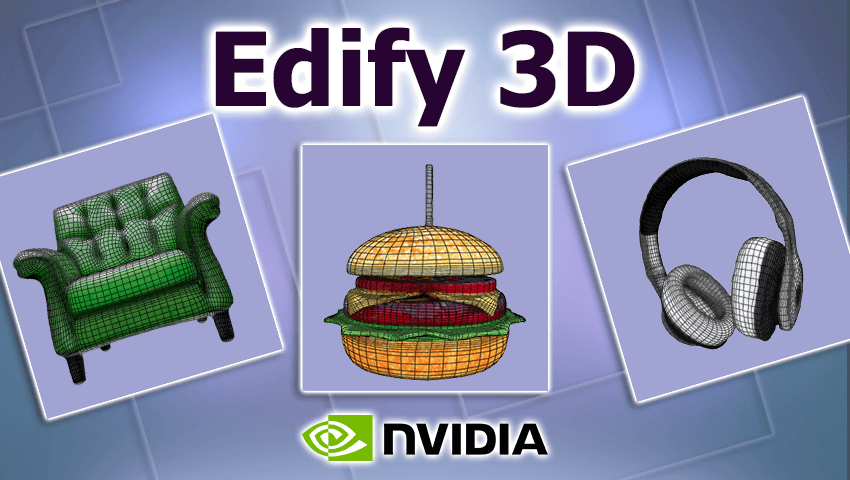

The demand for high quality 3D assets is booming in industries such as the design of video games, extended reality, film production and simulation. However, the development of 3D content for production often involves a complex and prolonged process requiring advanced skills and tools. Take up these challenges is EDIFY 3D by NVIDIA – A solution that uses AI technologies to make the creation of 3D assets faster, easier and more accessible.

EDIFY 3D defines a new reference in the creation of 3D assets by allowing a generation of high quality assets in less than two minutes. This innovative platform produces 3D models with detailed geometry, clean network topologies, UV map, 4K resolution textures and physically based rendering materials. Whether entry is a text description or a reference image, EDIFY 3D can generate incredibly precise 3D assets adapted to a wide range of applications.

Compared to traditional 3D text generation approaches, EDIFY 3D provides not only higher results in terms of details and realism, but also overcomes efficiency and scalability.

The basic EDIFY 3D technology uses advanced neural networks, combining diffusion models and transformers to push the limits of what AI can achieve in the generation of 3D active ingredients. The process begins with multi-lived diffusion models which synthesize the RGB appearance and the normal surfaces of an object from different points of view. These multi-visualities are then used for a reconstruction model based on a transformer which predicts the geometry, texture and materials of the final 3D form.

The pipeline is highly optimized for scalability, with the capacity to manage 3D and 3D image text entries. For the generation of 3D text, users provide a description of the natural language and the model synthesizes the object based on prompts and predefined poses. For the 3D image, the system can automatically extract the primary object from a reference image and generate its 3D counterpart, with invisible surface details.

To obtain its impressive results, Edify 3D is based on a meticulously designed data processing pipeline. The system begins by converting raw 3D form data into unified format, guaranteeing compatibility and consistency between data sets. Data not centered on the objective, incomplete analyzes and low quality forms are filtered by active learning with AI classifiers and human surveillance. The canonical alignment of the installation ensures that all forms are properly oriented, reducing ambiguity during the formation.

For training purposes, EDIFY 3D uses photorealistic rendering techniques to generate multi-visual images from treated 3D forms. A viewed language model is then used to generate descriptive legends for the images rendered, enriching the data set with significant metadata.

For 3D text use cases, EDIFY 3D produces detailed 3D models that align perfectly with the descriptions provided by the user. In the 3D image scenarios, the system accurately rebuilt the 3D structure of the reference object while “hallucinating” realistic textures for invisible areas, such as the back of an object.

The results of EDIFY 3D are distinguished by their exceptional quality. The active ingredients include clean mesh topologies, net textures and detailed geometry. These features make them ideal for the downstream publishing of workflows in industries such as games, animation and product design.

Find out more about the generation of high quality evolving 3D active ingredients In the article on Arxiv.