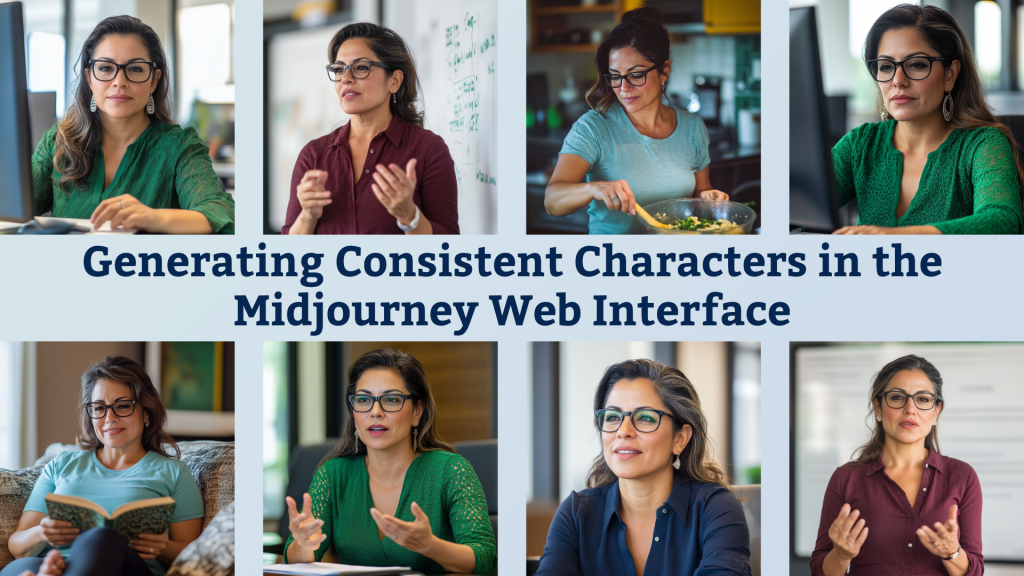

I use Midjourney to generate consistent characters for almost a year now. When I posted for the first time on the Characteristic of the character of the characterMidjourney was only available via Discord. This process is still working, but we now have other options with the web interface. You don't need to copy and stick URLs or remember the syntax of –cref on the web. In this article, I will explain the process to generate consistent characters in Midjourney with the web interface and share some tips for getting better results.

Click on any image in this article to extend it.

Generate the reference image of an initial character

To start, you need an initial reference image for your character. Type your prompt in the field at the top of the page.

Here is my prompt for this example:

Editorial photo, Latina woman, mid 50s, glasses, green blouse, working on a computer in a modern office

I am generally this structure for my prompts:

- Image type: For image formation, I find that the “editorial photo” is generally a good style. You can cause other styles (vector illustration, ink and watercolor, colorful pencil sketch, minimalist line drawing, pixar style, minimalist flat vector illustration, high precision photo, studio photo, etc.)

- Demography and character role: I described who I want, noting gender, race and age. You can also include a work description here, which is useful if the character needs more specialized clothes.

- Clothes and hair: In this example, I only described glasses clothes and a green blouse. You can add more details here, but the easier clothes are easier to keep consistent than more complex clothes.

- Adjustment, action, emotions: Describe the parameter and action. If you want colors or objects in the background, include them here too. I sometimes include the “blurred background” to keep the accent on the character.

You don't have to follow this structure strictly, but I generally get good results with this model. Midjourney works better with shorter and simpler prompts than with very long and complex prompts. He also ignores the filling words, so stay with sentences rather than long sentences and paragraphs.

Advice: If you do not note the race and the sex you want for a character, Midjourney (and other generators of IA images) will generally be by default that race and sex are the most stereotypical. You must be deliberate to counter the bias in the tools and data on which they are trained.

These are the four initial options generated by Midjourney.

With hindsight, I should probably have regenerated to get something with a less patterned shirt. The plain colors are easier to reproduce on several images, and the texture of this shirt is obviously different when you compare the images side by side. It would probably still be close enough for a training scenario, but it could be problematic for other uses.

Change if necessary

Most of the time, I choose the option I love the most, I am regenerating until I receive something that I am satisfied with. But in this case, I decided to make a small editing. I like the image on the far right, but his hand has too many fingers. Midjourney is doing well with your hands overall, but you have to monitor these problems.

When you click to enlarge and display a single image, you can access the Creation actions Menu on the right. Select Editor To change part of the image.

By default, the editor starts with a erased brush so you can delete the part of the image you want to modify. Errose too much erasure rather than too little to give it space to adapt.

I didn't need to change the prompt for this image, but you can also do it on this screen. After submitting, I had the choice between four new options.

Use the best image as a character reference

Once you have an image you are satisfied, use it as a character reference to generate more images.

- Open the reference image so that you can access the Creation actions menu.

- Click Fast To copy the prompt (which you can then modify).

- Click Picture To use the image as a reference.

By default, Midjourney uses it as an image prompt, which means that it uses composition, colors and other details. This is meant by the image icon in the lower right corner.

It's very easy to miss that!

Instead of using the default image prompt, you must change it by Reference of character option. Create the icon to access the three options: character reference, style reference and image prompt. Select the characters' reference option.

These icons and parameters are a bit difficult to find. Hopefully that a future version of Midjourney will make it easier.

Change the prompt for the desired differences. For my first variation, I wanted to show it by working rather than looking at the camera. I used the same basic prompt, but I changed the action at the end

Editorial photo, Latina woman, mid 50s, glasses, green blouse, working on a computer in a modern office, looking at the monitor, typing

Click on the “Use” button to generate additional coherent characters

Once you generate a variation with the right parameters, it is faster to generate additional images of the same character. Click on To use Button on the right side to use the same prompt and character reference image again. This copies the invitation and the image at the top so that you can modify it.

This time, I went to speak and make a gesture with his hands.

Adjust the character's weight to change clothes and hair

If you want to change clothes and hair while keeping the coherent facial features, use the weight property –CW of the character. The character's weight is defined on 100 default, but you can lower it as low as 0. I used –cw 20 to change the color of his shirt in these images. You can also see more variation in her hair.

Advice: In these examples, I found myself with more variations by directly looking at the camera than I really wanted. I think the problem is the laying of my original reference image. Try to start with a reference image in a more natural installation or to use another installation as a reference image.

Character generation fails

Just as the search for stock images means that you should browse images that do not meet your needs, sometimes Midjourney has images that do not work. I tried to get an image of it pouring coffee in the office break room, but I was not satisfied with any of the results. Even showing him just by holding a cup of coffee ended up with a bunch of strange results – poor hand positions and unrealistic handles, bad -sized cups, bad hands, etc.

If I really needed this image for a project, I would have found an image reference with the right installation to be included in the prompt. As it is just for a blog article, I abandoned this prompt and I moved on.

Try a white background to focus on expressions

Another option to create character images is to put them on a white background. If you want to focus on the face and generate different expressions and emotions, it can be very effective. A united background facilitates the implementation of characters from a scene with other characters (something that is still difficult to do in Midjourney – but it can be done in other image editing tools and can be done in other AI tools).

In this example, some of the images do not resemble the original character as much as others. However, several of them could potentially operate in a training scenario.

Upon reading

Midjourney has additional information in their documentation on How to use character references.

Discover my previous articles on the generation of images with Midjourney.