Modeling context protocol servers (MCP) quickly became a spine for integration of evolving, secure and agentic applications, especially since organizations seek to expose their services to workflows focused on AI while keeping experience, performance and safety intact by developers. Here are seven best practices focused on the data to build, test and pack robust MCP servers.

1 and 1 Intentional tool budget management

- Define a set of clear tools: Avoid mapping each API termination point for a new MCP tool. Instead, the tasks linked to the group and design higher level functions. The overload of the tool set increases the complexity of the server, the cost of deployment and can dissuade users. In a Docker MCP catalog review, the targeted selection of tools was found to Improve user adoption up to 30%.

- Use macros and channels: Implementation of prompts that chains several backend calls, so that users can trigger complex workflows via a single instruction. This reduces both the cognitive load for users and the error potential.

2 Shift Security on the left – eliminate vulnerable dependencies

- Depend on secure components: MCP servers often interfere with sensitive data. Scan your code base and your outbuildings for vulnerabilities using tools like Snyk, which automatically detects risks, including order injection or obsolete packages.

- Meet conformity: The software material bill (SBOM) and strict vulnerability management have become industry standards, in particular after the main security incidents.

- Example: Snyk reports that organizations that have implemented continuous security analysis have seen an average of 48% of vulnerability incidents in production in production.

3 and 3 Test carefully – locally and at a distance

- Local-premier, then remote tests: Start with quick local tests for quick iteration, then switch to remote tests based on a network that reflect the real world deployment scenarios.

- Take advantage of dedicated tools: Use specialized tools such as the MCP inspector, which allows you to test interactive test tools, inspect diagrams, examine newspapers and diagnose failures.

- Security in tests: Always use environmental variables for identification information, restrict network availability in DEV mode and use temporary tokens to minimize risks during tests.

4 Complete validation of the error scheme and management

- Strict adhesion on the diagram: The validation of the appropriate scheme prevents subtle bugs and disastrous production errors. The MCP Inspector automatically checks the missing or mismatched parameters, but maintain explicit unit / integration tests for tool patterns as regression cover.

- Verbous journalization: Activate detailed journalization during development to capture both demand / response cycles and context -specific errors. This practice reduces the resolution time (MTTR) for debugging up to 40%.

5 Package with reproducibility – Use Docker

- Containerization is the new standard: MCP servers package as a Docker containers to encapsulate all dependencies and execution configurations. This removes the phenomena “it works on my machine” and ensures the consistency of development in production.

- Why this counts: The servers based on Docker saw a 60% reduction in deployment Support tickets and quasi -instant integration allowed for end users – All needs are docker, regardless of the host operating system or the environment.

- Default security: The containerized termination points benefit from the image signature, the SBOM, the continuous scanning and the isolation of the host, minimizing the breath of all compromises compromise.

6. Optimize performance in terms of infrastructure and code

- Modern material: Use large bandwidth GPUs (for example, NVIDIA A100) and optimize for NUMA architectures for latency sensitive loads.

- Adjusting the nucleus and execution time: Use real -time nuclei, configure processor governors and use containers for the allocation of dynamic resources. 80% of organizations employing an advanced orchestration report Major efficiency gains.

- Conscious planning of resources: Adopt predictive load balancing or ML focused on servers and adjust memory management for large -scale deployments.

- Case study: Microsoft's personalized core setting for MCP servers has given a 30% performance strengthening and a 25% reduction in latency.

7 Version control, documentation and best operational practices

- Semantic versioning: TAG MCP Server rejects and semantically tools; Maintain a change. This rationalizes upgrades and declines of customers.

- Documentation: Provide clear API references, environmental requirements, tool descriptions and sample requests. Well documented MCP servers see 2x adoption rate for higher developers compared to undocumented migrants.

- Operational hygiene: Use a version repository.

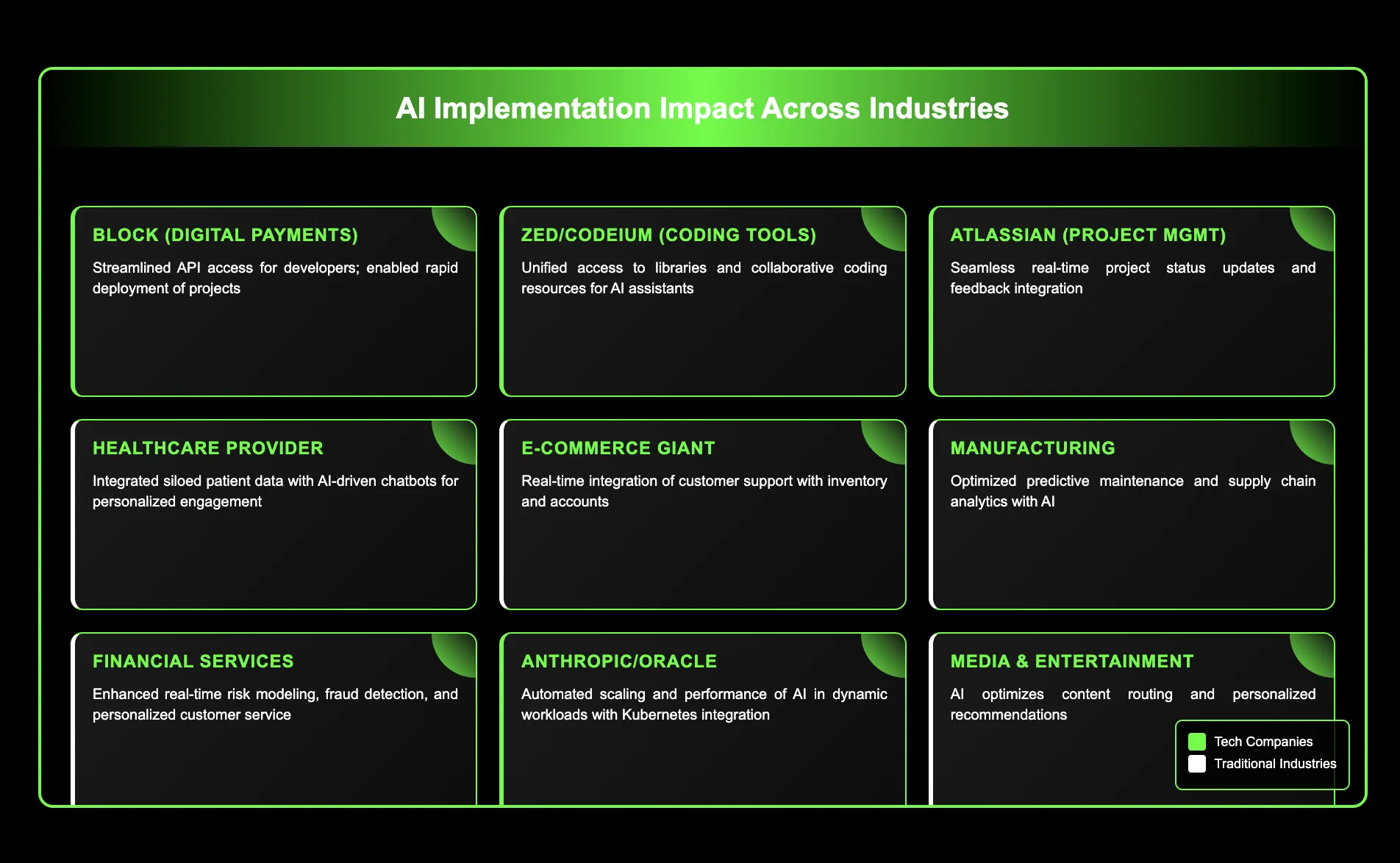

Impact of the real world: adoption and benefits of the MCP server

The adoption of model context protocol servers (MCP) reshapes industry standards by improving automation, data integration, developer productivity and large -scale AI performance. Here is an extended comparison rich in data between various industries and use cases.

| Organization / Industry | Impact / Result | Quantitative advantages | Key ideas |

|---|---|---|---|

| Block (digital payments) | Rationalized API access for developers; Activated the rapid deployment of projects | 25% increase in project completion rates | Focus went from troubleshooting of APIs to innovation and project delivery. |

| ZED / CODEIUM (coding tools) | Unified access to libraries and collaborative coding resources for AI assistants | 30% reduction During troubleshooting time | Improved user engagement and faster coding; Robust growth in the adoption of digital tools. |

| Atlassian (project management) | Updates of real -time project status without seamless and integration of comments | 15% increase in the use of products; Higher user satisfaction | AI workflows have improved the visibility of the project and the performance of the team. |

| Health provider | Integrated patient data in Tlét | 40% increase in patient Commitment and satisfaction | AI tools support proactive care, more timely interventions and improved health results. |

| Electronic commerce giant | Real -time integration of customer support with inventories and accounts | 50% reduction In the response time for customer demand | Conversion of sales and retention of customers considerably improved. |

| Manufacturing | Predictive maintenance and supply chain analysis optimized with AI | 25% reduction In inventory costs; until A 50% drop in downtime | Forecasting of the improved offer, less defects and energy Savings up to 20%. |

| Financial services | Risk modeling improved in real time, fraud detection and personalized customer service | Until Treatment 5 × Ai faster; Improved risk accuracy; Reduction of fraud losses | AI models access secure data live for sharper decisions – cost reduction and lifting conformity. |

| Anthropic / Oracle | Automated scale and AI performance in dynamic workloads with the integration of Kubernetes | 30% reduction in calculation costs, 25% reliability boost, 40% faster deployment | Advanced monitoring tools quickly exposed anomalies, increasing user satisfaction 25%. |

| Media and entertainment | AI optimizes content routing and personalized recommendations | Coherent user experience during peak trafficking | Dynamic load balancing allows high content delivery and high customer commitment. |

Additional protruding facts

These results illustrate how MCP servers become a critical catalyst for modern, rich and agency working flows, to give faster results, deeper information and a new level of operational excitation for technological organizations

Conclusion

By adopting these seven best practices supported by data – Design of intentional tools, proactive safety, complete tests, containerization, performance adjustment, solid operational discipline and meticulous documentation – engineering teams can create, test and train reliable, secure and prepared MCP servers. With evidence showing gains in user satisfaction, developers' productivity and commercial results, control of these disciplines is directly reflected in an organizational advantage in the era of agency software and integration focused on ia.

Meet the newsletter of AI dev read by 40K + developers and researchers from Nvidia, Openai, Deepmind, Meta, Microsoft, JP Morgan Chase, Amgen, Aflac, Wells Fargo and 100 others (Subscribe now)

Sources:

Michal Sutter is a data science professional with a master's degree in data sciences from the University of Padova. With a solid base in statistical analysis, automatic learning and data engineering, Michal excels in transforming complex data sets into usable information.