For more than 30 years, the scientific photographer Felice Frankel has helped teachers, researchers and MIT students visually communicate their work. Throughout this period, she saw the development of various tools to support the creation of convincing images: some useful and some contrary to the effort to produce a reliable and complete representation of research. In a recent opinion article published in Nature Magazine, Frankel discusses the emerging use of generative artificial intelligence (GENAI) in the images and challenges and implications that he has to communicate research. On a more personal note, she wonders if there will always be a place for a scientific photographer in the research community.

Q: You have mentioned that as soon as a photo is taken, the image can be considered “manipulated”. There are ways to manipulate your own images to create a visual that communicates the desired message more successfully. Where is the line between acceptable and unacceptable manipulation?

A: In the broadest sense, decisions made on how to frame and structure the content of an image, as well as the tools used to create the image, are already a manipulation of reality. We must remember that the image is only a representation of the thing, and not of the thing itself. Decisions must be made when creating the image. The critical problem is not to manipulate the data, and in the case of most images, the data is the structure. For example, for an image that I made some time ago, I deleted digitally the Petri box in which a yeast colony grew, to draw attention to the astonishing morphology of the colony. Image data is the morphology of the colony. I have not manipulated this data. However, I always indicate in the text if I did something to an image. I discuss the idea of improving the image in my manual, “Visual elements, photography. “”

Q: What can researchers do to ensure that their research is communicated correctly and ethically?

A: With the advent of AI, I see three main problems concerning visual representation: the difference between illustration and documentation, ethics around digital manipulation and a continuous need for researchers to be trained in visual communication. For years, I have been trying to develop a visual literacy program for current and future classes of researchers in science and engineering. MIT has a communication requirement which mainly meets writing, but what about the visual, which is no longer tangential to a newspaper submission? I will bet that most readers of scientific articles go directly to figures, after reading the summary.

We must force students to learn to critically look at a graphic or an image published and decide if there is something weird that happens. We must discuss the ethics of the “boost” an image to look like a predetermined way. I describe in the article an incident when a student changed one of my images (without asking me) to correspond to what the student wanted to communicate visually. I did not allow it, of course, and I was disappointed that the ethics of such a change was not taken into account. At the very least, we must develop conversations on the campus and, better still, create a visual literacy requirement with the writing requirement.

Q: The generative does not disappear. What do you see as the future of science communication visually?

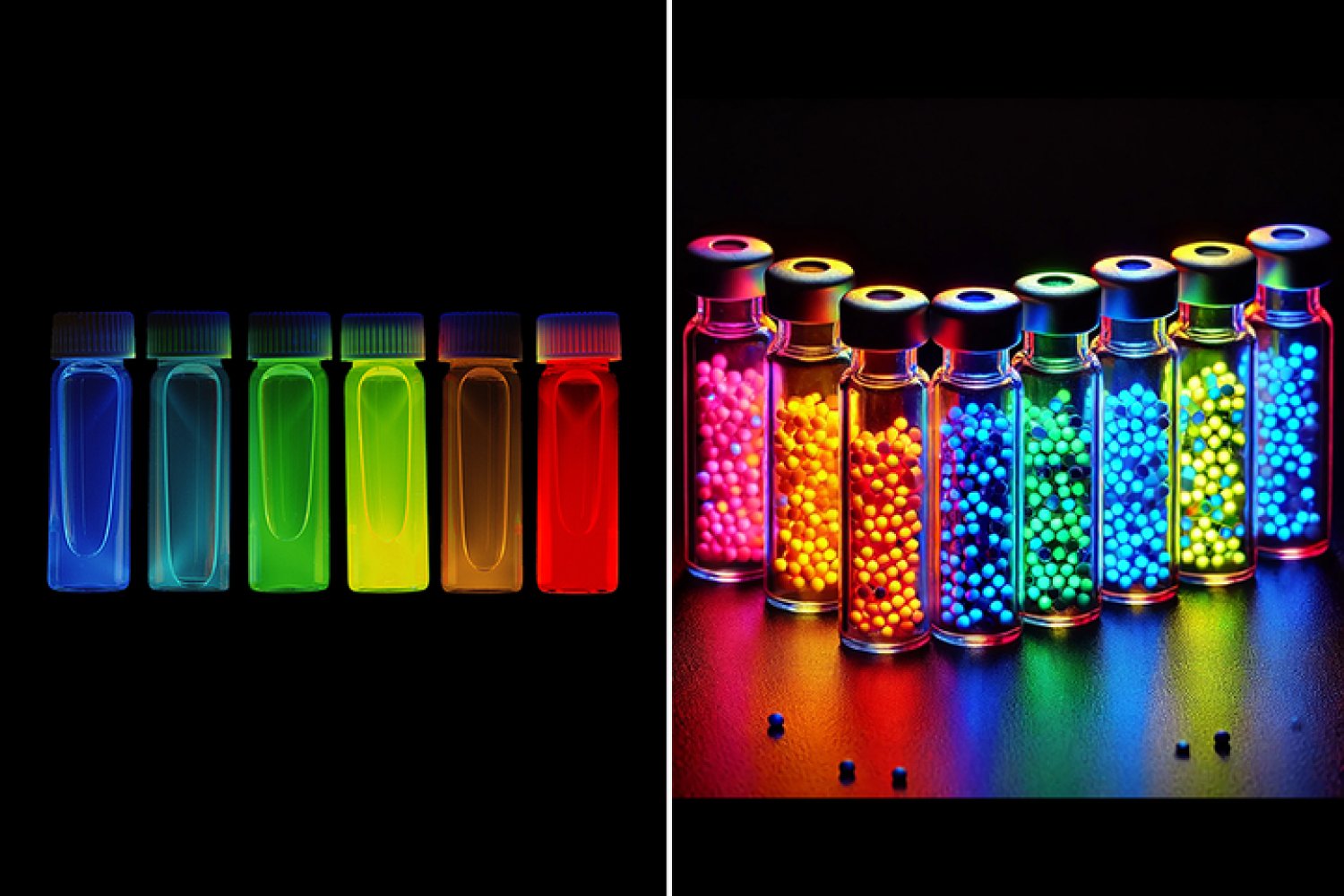

A: For Nature Article, I decided that a powerful way of questioning the use of AI in the generation of images was by example. I used one of the diffusion models to create an image using the following prompt:

“Create a photo of Nano-Cristals of Moungi Bawendi in bottles on a black background, fluoridating at different wavelengths, depending on their size, when they are excited with UV light.”

The results of my AI experimentation were often cartoon type images that could barely pass as reality – not to mention the documentation – but there will be a time when they will be. In conversations with colleagues in research and computer science communities, all agree that we should have clear standards on what is and is not authorized. And above all, a visual Genai should never be authorized as documentation.

But the visuals generated by AI will, in fact, be useful for illustration purposes. If a visual generated by AI must be subject to a review (or, moreover, be presented in a presentation), I believe that the researcher must

- Clearly label if an image was created by an AI model;

- Indicate which model has been used;

- Include the invite used; And

- Include the image, if there is one, which was used to help the prompt.