LLM -based agents are increasingly used in various applications because they manage complex tasks and assume several roles. A key element of these agents is memory, which stores and recalls information, reflects on past knowledge and makes informed decisions. Memory plays an essential role in tasks involving long -term interaction or role play by capturing past experiences and helping to maintain the coherence of roles. He supports the agent's ability to remember the interactions spent with the environment and to use this information to guide future behavior, making it an essential module in these systems.

Despite the growing emphasis on improving memory mechanisms in LLM -based agents, current models are often developed with different implementation strategies and lack a standardized framework. This fragmentation creates challenges for developers and researchers, who encounter difficulties in testing or comparing models due to incoherent conceptions. In addition, common features such as data recovery and summary are frequently reparaced between models, leading to ineffectures. Many academic models are also deeply integrated into specific agent architectures, which makes them difficult to reuse or adapt for other systems. This highlights the need for a unified modular framework for memory in LLM agents.

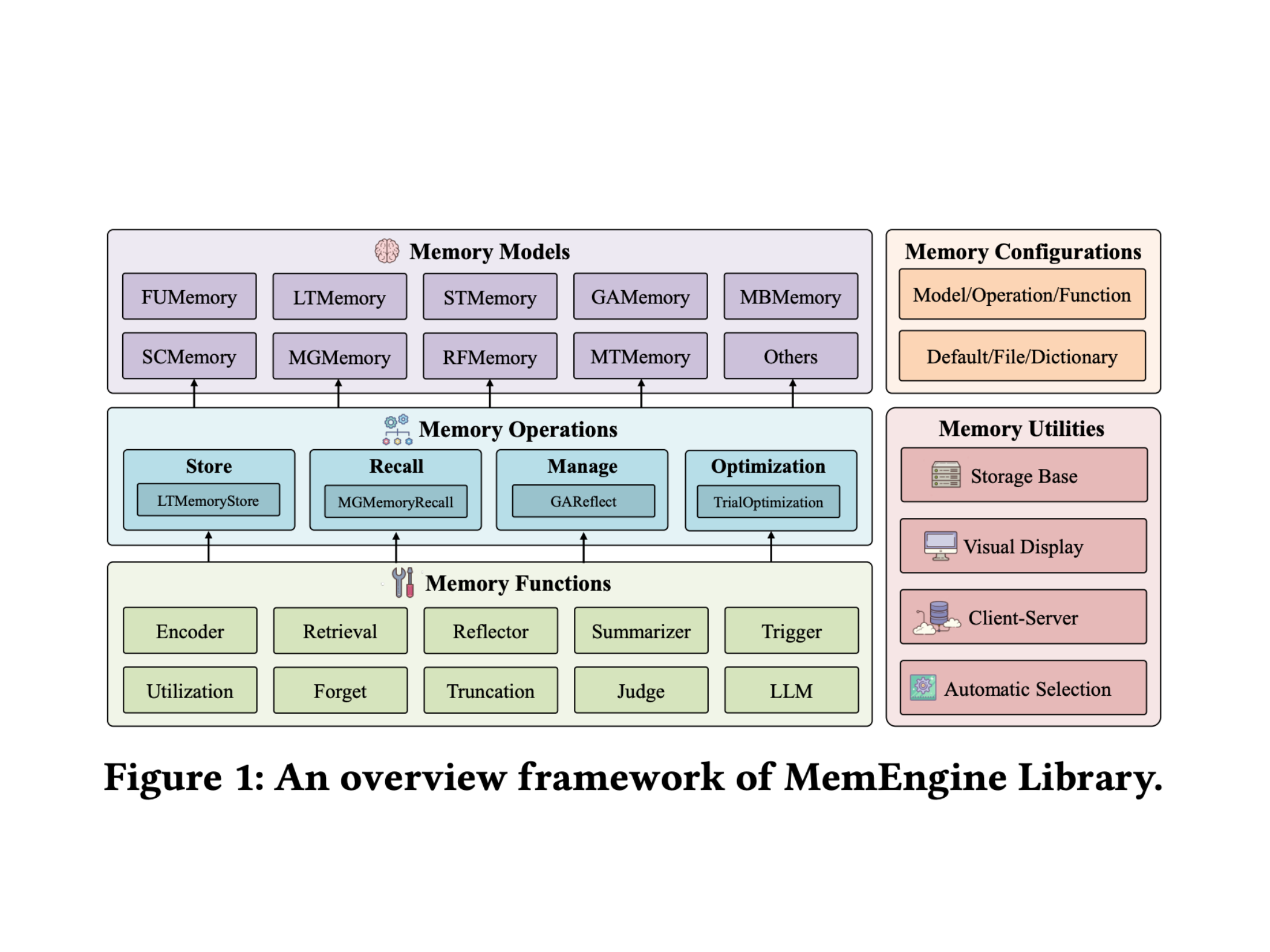

Researchers from the University Renmin and Huawei have developed Memengine, a unified and modular library designed to support the development and deployment of advanced memory models for LLM -based agents. Memengine organizes memory systems in three hierarchical levels – functions, operations and models – allowing an effective and reusable design. It supports many existing memory models, allowing users to change, configure them and extend them easily. The frame also includes tools to adjust hyperparammeters, record memory states and integrate into popular agents like Autogpt. With complete documentation and open source access, Memengine aims to rationalize research on memory models and promote generalized adoption.

Memengine is a unified and modular library designed to improve the memory capacities of LLM -based agents. Its architecture consists of three layers: a fundamental layer with basic functions, an intermediate layer that manages basic memory operations (such as storage, recall, management and optimization of information), and a top layer which includes a collection of advanced memory models inspired by recent research. These include models such as Fumemory (long context memory), Ltmemory (semantic recovery), Gamemory (self-reflexive memory) and Mtmemory (structured tree memory), among others. Each model is implemented using standardized interfaces, which facilitates change or combining them. The library also provides public services such as encoders, summaries, retrievers and judges, which are used to create and personalize memory operations. In addition, Memengine includes visualization, remote deployment and automatic models of the model, offering local use options based on the server.

Unlike many existing libraries which only support the storage and recovery of basic memory, Memengine is distinguished by taking charge of advanced functionalities such as reflection, optimization and customizable configurations. It has a robust configuration module allows developers to adapt hyperparammeters and prompts at different levels, either via static files or dynamic inputs. Developers can choose from the default settings, manually configure the parameters or rely on an automatic selection adapted to their task. The library also supports integration with tools like VLLM and Autogpt. Memengine allows personalization in terms of function, operation and model for those who build new memory models, offering documentation and in -depth examples. Memengine provides a more complete memory framework aligned by research than other memory agents and libraries.

In conclusion, Memengine is a unified and modular library designed to support the development of advanced memory models for LLM -based agents. While Great language model Agents have experienced growing use in industries, their memory systems remain a critical objective. Despite many recent progress, no standardized framework for the implementation of memory models exists. Memengine addresses this gap by offering a flexible and extensible platform that incorporates various cutting-edge approaches. It supports easy development and use of plug-and-play. For the future, the authors aim to extend the framework to include multimodal memory, such as audio and visual data, for wider applications.

Discover the Paper. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our 95K + ML Subdreddit and subscribe to Our newsletter.

Sana Hassan, consulting trainee at Marktechpost and double -degree student at Iit Madras, is passionate about the application of technology and AI to meet the challenges of the real world. With a keen interest in solving practical problems, it brings a new perspective to the intersection of AI and real life solutions.