In this complete tutorial, we guide users by creating a powerful multi-tool AI agent using Langgraph and Claude, optimized for various tasks, including mathematical calculations, web research, weather requests, text analysis and real-time information recovery. It begins by simplifying dependence installations to ensure effortless configuration, even for beginners. Users are then introduced to the structured implementations of specialized tools, such as a safe calculator, an effective web research utility by taking advantage of DuckDuckGo, a supplier of simulating weather information, a detailed text analyzer and a time holding function. The tutorial also clearly delimits the integration of these tools into an architecture of sophisticated agent built using Langgraph, illustrating practical use through interactive examples and clear explanations, facilitating beginners and advanced developers to quickly deploy personalized multifunctional AI agents.

import subprocess

import sys

def install_packages():

packages = (

"langgraph",

"langchain",

"langchain-anthropic",

"langchain-community",

"requests",

"python-dotenv",

"duckduckgo-search"

)

for package in packages:

try:

subprocess.check_call((sys.executable, "-m", "pip", "install", package, "-q"))

print(f"✓ Installed {package}")

except subprocess.CalledProcessError:

print(f"✗ Failed to install {package}")

print("Installing required packages...")

install_packages()

print("Installation complete!\n")We automate the installation of essential python packages required to build a multi-tool agent based on Langgraph. It operates a sub-process to run PIP orders in silence and guarantees that each package, ranging from long-chain components to web search and environmental treatment tools, is successfully installed. This configuration rationalizes the environmental preparation process, which makes the laptop portable and adapted to beginners.

import os

import json

import math

import requests

from typing import Dict, List, Any, Annotated, TypedDict

from datetime import datetime

import operator

from langchain_core.messages import BaseMessage, HumanMessage, AIMessage, ToolMessage

from langchain_core.tools import tool

from langchain_anthropic import ChatAnthropic

from langgraph.graph import StateGraph, START, END

from langgraph.prebuilt import ToolNode

from langgraph.checkpoint.memory import MemorySaver

from duckduckgo_search import DDGSWe import all the libraries and modules necessary to build the AI Multi-tool agent. It includes standard Python libraries such as the operating system, JSON, mathematics and datetime for general features and external libraries such as HTTP and DuckDuckGo_Search call requests to implement web research. Langchain and Langgraph ecosystems provide types of messages, tool decorators, state graph components and control pointing utilities, while Chatanthropic allows integration with the Claude model for conversational intelligence. These imports form the basic elements to define the tools, agent workflows and interactions.

os.environ("ANTHROPIC_API_KEY") = "Use Your API Key Here"

ANTHROPIC_API_KEY = os.getenv("ANTHROPIC_API_KEY")We define and collect the anthropic API key required to authenticate and interact with the Claude models. The bone line attributes your API key (which you must replace with a valid key), while OS.Gettenv recovers it safely for subsequent use in the initialization of the model. This approach guarantees that the key is accessible throughout the script without hard coding several times.

from typing import TypedDict

class AgentState(TypedDict):

messages: Annotated(List(BaseMessage), operator.add)

@tool

def calculator(expression: str) -> str:

"""

Perform mathematical calculations. Supports basic arithmetic, trigonometry, and more.

Args:

expression: Mathematical expression as a string (e.g., "2 + 3 * 4", "sin(3.14159/2)")

Returns:

Result of the calculation as a string

"""

try:

allowed_names = {

'abs': abs, 'round': round, 'min': min, 'max': max,

'sum': sum, 'pow': pow, 'sqrt': math.sqrt,

'sin': math.sin, 'cos': math.cos, 'tan': math.tan,

'log': math.log, 'log10': math.log10, 'exp': math.exp,

'pi': math.pi, 'e': math.e

}

expression = expression.replace('^', '**')

result = eval(expression, {"__builtins__": {}}, allowed_names)

return f"Result: {result}"

except Exception as e:

return f"Error in calculation: {str(e)}"We define the agent's internal state and implement a robust calculator tool. The AgentState class uses Typeddict to structure the agent's memory, specifically following the messages exchanged during the conversation. The calculator function, decorated with @tool to record it as a USABLE AI-AI, safely assesses mathematical expressions. It allows a safe calculation by limiting the functions available to a predefined set of the mathematical module and by replacing the common syntax as ^ by the Python exponential operator. This ensures that the tool can manage simple and advanced arithmetic functions such as trigonometry or logarithms while preventing the execution of dangerous code.

@tool

def web_search(query: str, num_results: int = 3) -> str:

"""

Search the web for information using DuckDuckGo.

Args:

query: Search query string

num_results: Number of results to return (default: 3, max: 10)

Returns:

Search results as formatted string

"""

try:

num_results = min(max(num_results, 1), 10)

with DDGS() as ddgs:

results = list(ddgs.text(query, max_results=num_results))

if not results:

return f"No search results found for: {query}"

formatted_results = f"Search results for '{query}':\n\n"

for i, result in enumerate(results, 1):

formatted_results += f"{i}. **{result('title')}**\n"

formatted_results += f" {result('body')}\n"

formatted_results += f" Source: {result('href')}\n\n"

return formatted_results

except Exception as e:

return f"Error performing web search: {str(e)}"We define a web_search tool that allows the agent to recover real -time information on the Internet using the DuckDuckGo search API via the DuckDuckGo_Search Python package. The tool accepts a search query and an optional NUM_RESULTS parameter, ensuring that the number of results returned is between 1 and 10. It opens a DuckDuckGo search session, recovers the results and form perfectly for the user -friendly display. If no results are found or an error occurs, the function manages it free of charge by returning an informative message. This tool offers the agent of real -time research capacity, improving responsiveness and utility.

@tool

def weather_info(city: str) -> str:

"""

Get current weather information for a city using OpenWeatherMap API.

Note: This is a mock implementation for demo purposes.

Args:

city: Name of the city

Returns:

Weather information as a string

"""

mock_weather = {

"new york": {"temp": 22, "condition": "Partly Cloudy", "humidity": 65},

"london": {"temp": 15, "condition": "Rainy", "humidity": 80},

"tokyo": {"temp": 28, "condition": "Sunny", "humidity": 70},

"paris": {"temp": 18, "condition": "Overcast", "humidity": 75}

}

city_lower = city.lower()

if city_lower in mock_weather:

weather = mock_weather(city_lower)

return f"Weather in {city}:\n" \

f"Temperature: {weather('temp')}°C\n" \

f"Condition: {weather('condition')}\n" \

f"Humidity: {weather('humidity')}%"

else:

return f"Weather data not available for {city}. (This is a demo with limited cities: New York, London, Tokyo, Paris)"We define a Weather_info tool which simulates the recovery of current weather data for a given city. Although he does not connect to a live meteorological API, he uses a predefined dictionary of simulated data for big cities like New York, London, Tokyo and Paris. When receiving a city name, the function normalized in lower case and checks its presence in the set of simulated data. It returns temperature, meteorological condition and humidity in a readable format if it is found. Otherwise, this informs the user that weather data is not available. This tool serves as a reserved space and can then be upgraded to recover data live from a real meteorological API.

@tool

def text_analyzer(text: str) -> str:

"""

Analyze text and provide statistics like word count, character count, etc.

Args:

text: Text to analyze

Returns:

Text analysis results

"""

if not text.strip():

return "Please provide text to analyze."

words = text.split()

sentences = text.split('.') + text.split('!') + text.split('?')

sentences = (s.strip() for s in sentences if s.strip())

analysis = f"Text Analysis Results:\n"

analysis += f"• Characters (with spaces): {len(text)}\n"

analysis += f"• Characters (without spaces): {len(text.replace(' ', ''))}\n"

analysis += f"• Words: {len(words)}\n"

analysis += f"• Sentences: {len(sentences)}\n"

analysis += f"• Average words per sentence: {len(words) / max(len(sentences), 1):.1f}\n"

analysis += f"• Most common word: {max(set(words), key=words.count) if words else 'N/A'}"

return analysisThe Text_Analyzer tool provides a detailed statistical analysis of a given text input. It calculates measurements such as the number of characters (with and without spaces), the number of words, the number of sentences and the average words per sentence, and it identifies the most frequently occurring word. The tool graciously manages the empty entry by encouraging the user to provide valid text. He uses simple chain operations and Python set and max functions to extract significant information. This is a precious utility for language analysis or the quality of the content of the content of the IA toolbox.

@tool

def current_time() -> str:

"""

Get the current date and time.

Returns:

Current date and time as a formatted string

"""

now = datetime.now()

return f"Current date and time: {now.strftime('%Y-%m-%d %H:%M:%S')}"The current_time tool provides a simple way to recover the date and time of the current system in a format readable by humans. Using the Python DateTime Module, it captures the present moment and format like Yyyy-MM-DD HH: MM: SS. This utility is particularly useful for responses to the scale of time or to respond to user requests on the current date and time in the IA agent's interaction flow.

tools = (calculator, web_search, weather_info, text_analyzer, current_time)

def create_llm():

if ANTHROPIC_API_KEY:

return ChatAnthropic(

model="claude-3-haiku-20240307",

temperature=0.1,

max_tokens=1024

)

else:

class MockLLM:

def invoke(self, messages):

last_message = messages(-1).content if messages else ""

if any(word in last_message.lower() for word in ('calculate', 'math', '+', '-', '*', '/', 'sqrt', 'sin', 'cos')):

import re

numbers = re.findall(r'(\d\+\-\*/\.\(\)\s\w)+', last_message)

expr = numbers(0) if numbers else "2+2"

return AIMessage(content="I'll help you with that calculation.",

tool_calls=({"name": "calculator", "args": {"expression": expr.strip()}, "id": "calc1"}))

elif any(word in last_message.lower() for word in ('search', 'find', 'look up', 'information about')):

query = last_message.replace('search for', '').replace('find', '').replace('look up', '').strip()

if not query or len(query) We initialize the language model that feeds the AI agent. If a valid anthropogenic key is available, it uses the Haiku Claude 3 model for high quality responses. Without an API key, a mockllm is defined to simulate basic tool routing behavior based on keyword correspondence, allowing the agent to operate offline with limited capacities. The Bind_Tools method connects the tools defined to the model, allowing it to invoke them if necessary.

def agent_node(state: AgentState) -> Dict(str, Any):

"""Main agent node that processes messages and decides on tool usage."""

messages = state("messages")

response = llm_with_tools.invoke(messages)

return {"messages": (response)}

def should_continue(state: AgentState) -> str:

"""Determine whether to continue with tool calls or end."""

last_message = state("messages")(-1)

if hasattr(last_message, 'tool_calls') and last_message.tool_calls:

return "tools"

return ENDWe define the agent's main decision -making logic. The agent_node function manages incoming messages, invokes the language model (with tools) and returns the model's response. The function should then assess whether the model's response includes tool calls. If this is the case, it sees control towards the tool for execution of the tool; Otherwise, he directs the flow to end the interaction. These functions allow dynamic and conditional transitions in the agent's work flow.

def create_agent_graph():

tool_node = ToolNode(tools)

workflow = StateGraph(AgentState)

workflow.add_node("agent", agent_node)

workflow.add_node("tools", tool_node)

workflow.add_edge(START, "agent")

workflow.add_conditional_edges("agent", should_continue, {"tools": "tools", END: END})

workflow.add_edge("tools", "agent")

memory = MemorySaver()

app = workflow.compile(checkpointer=memory)

return app

print("Creating LangGraph Multi-Tool Agent...")

agent = create_agent_graph()

print("✓ Agent created successfully!\n")We build the workflow powered by Langgraph which defines the operational structure of the AI agent. It initializes a toolnod to manage tool executions and uses a stategraph to organize the flow between agent's decisions and the use of tools. The nodes and edges are added to manage the transitions: starting with the agent, the routing conditionally to the tools and the loop if necessary. A MemorysAver is integrated for monitoring the persistent state through turns. The graph is compiled in an executable application (APP), allowing a structured and aware multi-tool agent of the memory ready for deployment.

def test_agent():

"""Test the agent with various queries."""

config = {"configurable": {"thread_id": "test-thread"}}

test_queries = (

"What's 15 * 7 + 23?",

"Search for information about Python programming",

"What's the weather like in Tokyo?",

"What time is it?",

"Analyze this text: 'LangGraph is an amazing framework for building AI agents.'"

)

print("🧪 Testing the agent with sample queries...\n")

for i, query in enumerate(test_queries, 1):

print(f"Query {i}: {query}")

print("-" * 50)

try:

response = agent.invoke(

{"messages": (HumanMessage(content=query))},

config=config

)

last_message = response("messages")(-1)

print(f"Response: {last_message.content}\n")

except Exception as e:

print(f"Error: {str(e)}\n")The test_age function is a validation utility which guarantees that the Langgraph agent reacts correctly between different use cases. He performs predefined requests, arithmetic, web research, weather, time and text analysis, and prints the agent's responses. Using a coherent thread_id for configuration, he invokes the agent with each request. It displays the results perfectly, helping developers to check the integration of tools and conversational logic before moving on to interactive or production.

def chat_with_agent():

"""Interactive chat function."""

config = {"configurable": {"thread_id": "interactive-thread"}}

print("🤖 Multi-Tool Agent Chat")

print("Available tools: Calculator, Web Search, Weather Info, Text Analyzer, Current Time")

print("Type 'quit' to exit, 'help' for available commands\n")

while True:

try:

user_input = input("You: ").strip()

if user_input.lower() in ('quit', 'exit', 'q'):

print("Goodbye!")

break

elif user_input.lower() == 'help':

print("\nAvailable commands:")

print("• Calculator: 'Calculate 15 * 7 + 23' or 'What's sin(pi/2)?'")

print("• Web Search: 'Search for Python tutorials' or 'Find information about AI'")

print("• Weather: 'Weather in Tokyo' or 'What's the temperature in London?'")

print("• Text Analysis: 'Analyze this text: (your text)'")

print("• Current Time: 'What time is it?' or 'Current date'")

print("• quit: Exit the chat\n")

continue

elif not user_input:

continue

response = agent.invoke(

{"messages": (HumanMessage(content=user_input))},

config=config

)

last_message = response("messages")(-1)

print(f"Agent: {last_message.content}\n")

except KeyboardInterrupt:

print("\nGoodbye!")

break

except Exception as e:

print(f"Error: {str(e)}\n")The cat_with_agent function provides an interactive command line interface for real-time conversations with the multi-tool agent Langgraph. He supports requests in natural language and recognizes orders such as “help” for advice of use and “leave” to leave. Each user input is processed via the agent, which dynamically selects and invokes the appropriate response tools. The function improves user engagement by simulating a conversational experience and presenting the agent's capacities in the management of various requests, mathematical and web research to the weather, text analysis and time recovery.

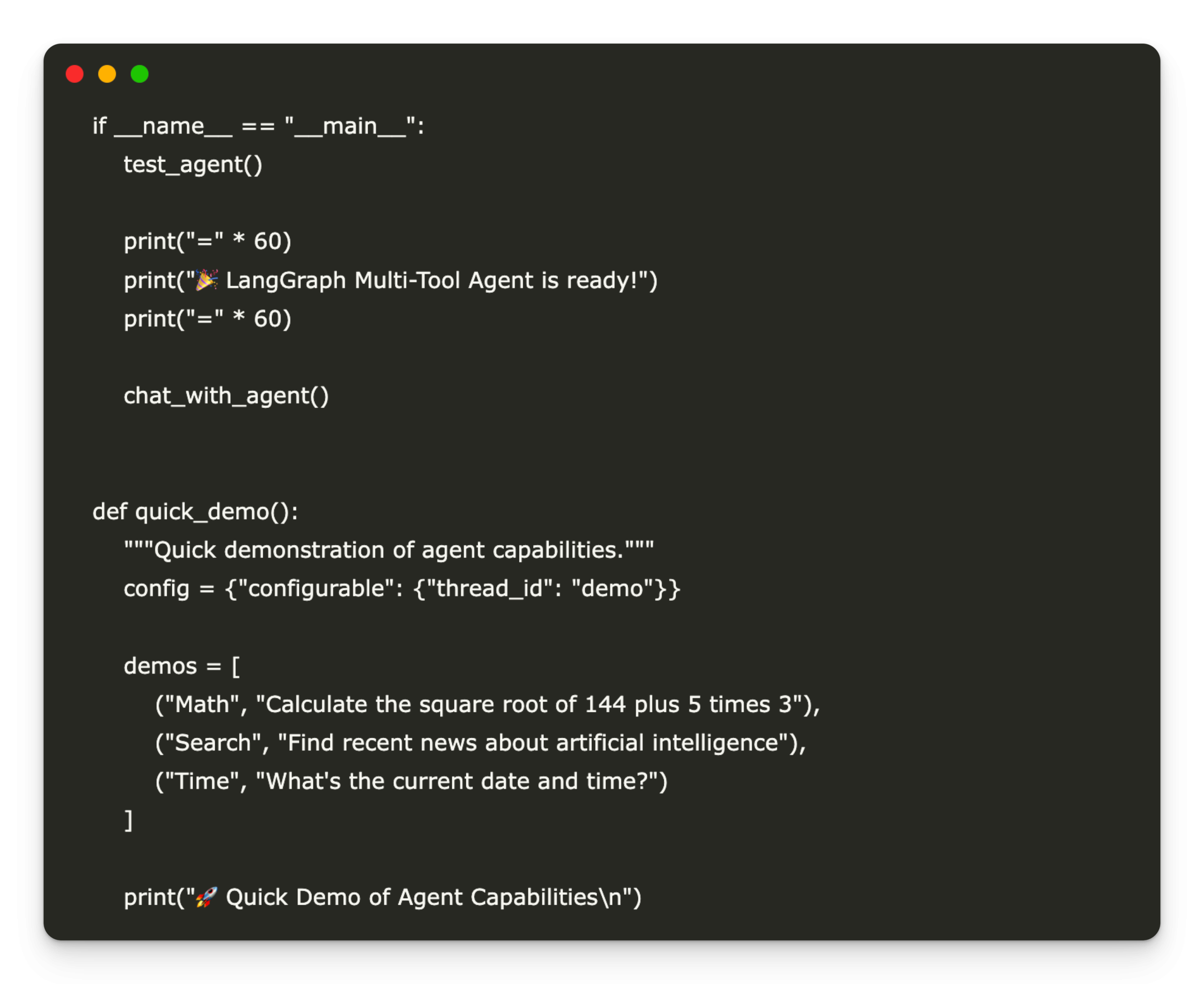

if __name__ == "__main__":

test_agent()

print("=" * 60)

print("🎉 LangGraph Multi-Tool Agent is ready!")

print("=" * 60)

chat_with_agent()

def quick_demo():

"""Quick demonstration of agent capabilities."""

config = {"configurable": {"thread_id": "demo"}}

demos = (

("Math", "Calculate the square root of 144 plus 5 times 3"),

("Search", "Find recent news about artificial intelligence"),

("Time", "What's the current date and time?")

)

print("🚀 Quick Demo of Agent Capabilities\n")

for category, query in demos:

print(f"({category}) Query: {query}")

try:

response = agent.invoke(

{"messages": (HumanMessage(content=query))},

config=config

)

print(f"Response: {response('messages')(-1).content}\n")

except Exception as e:

print(f"Error: {str(e)}\n")

print("\n" + "="*60)

print("🔧 Usage Instructions:")

print("1. Add your ANTHROPIC_API_KEY to use Claude model")

print(" os.environ('ANTHROPIC_API_KEY') = 'your-anthropic-api-key'")

print("2. Run quick_demo() for a quick demonstration")

print("3. Run chat_with_agent() for interactive chat")

print("4. The agent supports: calculations, web search, weather, text analysis, and time")

print("5. Example: 'Calculate 15*7+23' or 'Search for Python tutorials'")

print("="*60)Finally, we orchestrate the execution of the multi-tool agent Langgraph. If the script is executed directly, it initiates test_agent () to validate the features with examples of requests, followed by the launch of the interactive cat_with_age () mode for real -time interaction. The Quick_Demo () function also briefly presents the agent's capacities in mathematical queries, research and time. Clear user instructions are printed at the end, guiding users on the configuration of the API key, the execution of demonstrations and the interaction with the agent. This offers a fluid integration experience to users to explore and extend the agent's functionality.

In conclusion, this step-by-step tutorial gives valuable information on the construction of an effective multi-tool AI agent taking advantage of the generative capacities of Langgraph and Claude. With simple explanations and practical demonstrations, the guide allows users to integrate various public services into a coherent and interactive system. The flexibility of the agent in the execution of tasks, from complex calculations to the recovery of dynamic information, presents the versatility of modern AI development frameworks. In addition, the inclusion of user -friendly functions for tests and the interactive cat improves practical understanding, allowing immediate application in various contexts. Developers can extend and personalize their AI agents with this fundamental knowledge.

Discover the GitHub notebook. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our 95K + ML Subdreddit and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.