Mistral AI, in collaboration with All Hands Ai, has published updated versions of its large language models focused on developers under the Devstral 2507 label. The version includes two models –Devstral Small 1.1 And Devstral Medium 2507—E designed to take charge of code reasoning based on agents, program synthesis and the execution of structured tasks in large software standards. These models are optimized for performance and costs, which makes them applicable for actual use in development tools and code automation systems.

Devstral Small 1.1: Open model for local and integrated use

Devstral Small 1.1 (Also called devstral-small-2507) is based on the Mistral-Small-3.1 foundation model and contains approximately 24 billion parameters. He supports a 128K token context window, which allows him to manage multi-fichiers code entries and long typical guests in software engineering workflows.

The model is specifically refined for structured outputs, including XML formats and calling the functions. This makes it compatible with agent frameworks such as Openhands and adapted to tasks such as program navigation, changes in several stages and code search. He is licensed under Apache 2.0 and available both for research and commercial use.

Performance: Swe-Bench Results

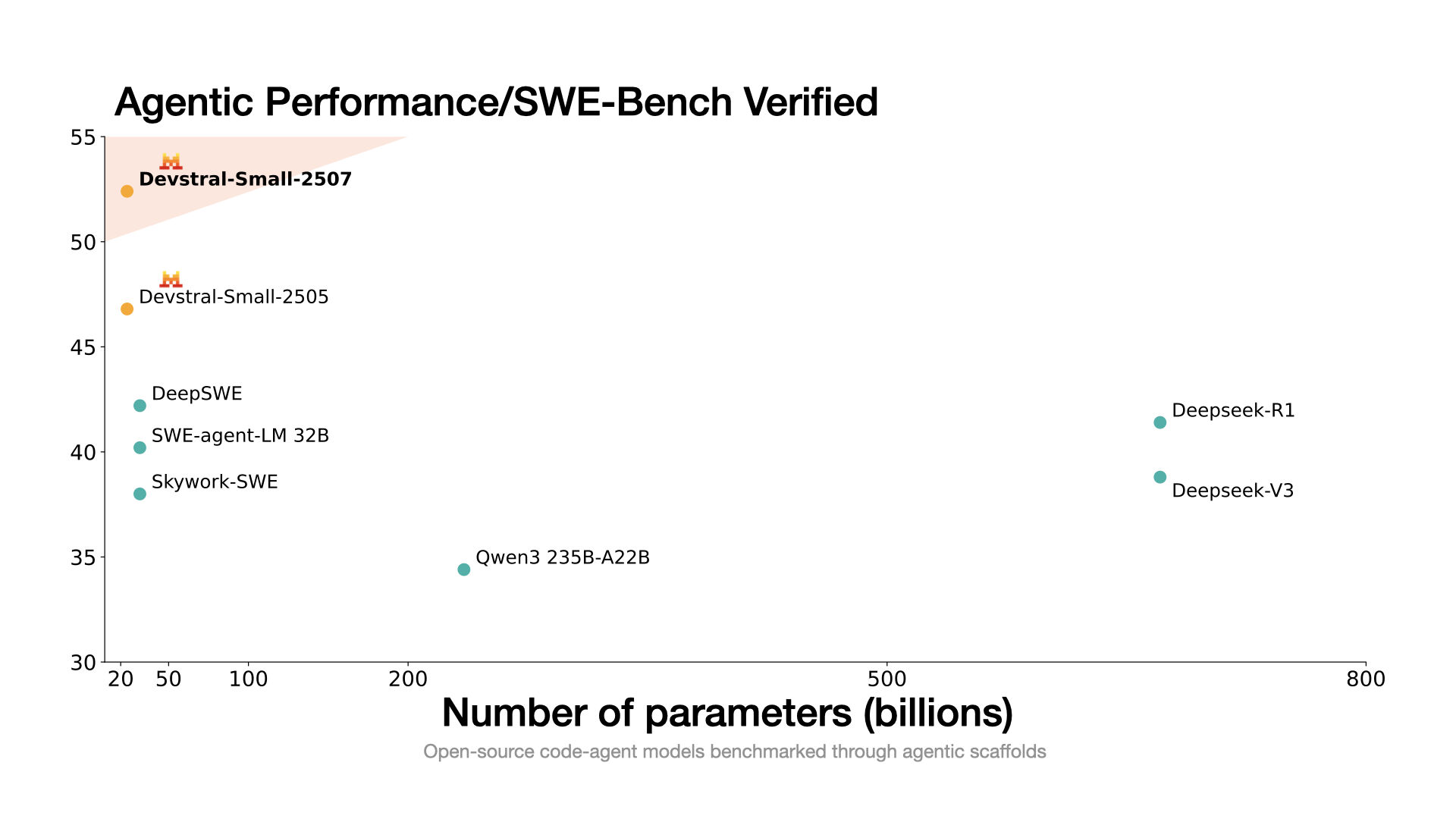

Devstral Small 1.1 Waiting 53.6% On the reference verified Swe-Bench, which assesses the capacity of the model to generate correct fixes for real github problems. This represents a significant improvement compared to the previous version (1.0) and the place before other openly available models of comparable size. The results were obtained using the open scaffolding, which provides a standard test environment to assess code agents.

Although it is not at the level of the largest proprietary models, this version offers a balance between size, inference cost and reasoning performance which is practical for many coding tasks.

Deployment: Local inference and quantification

The model is released in several formats. The quantified GGUF versions are available for use with llama.cpp,, vLLMand LM Studio. These formats make it possible to execute locally on high memory GPUs (for example, RTX 4090) or Apple silicon machines with 32 GB of RAM or more. This is beneficial for developers or teams who prefer to operate without dependence on the accommodated APIs.

Mistral also makes the model available via their inference API. The current price is $ 0.10 per million entry tokens and $ 0.30 per million output tokens, the same as the other models in the Mistral-Small line.

Devstral Medium 2507: higher precision, API only

Devstral Medium 2507 is not open source and is only available via the Mistral API or through business deployment agreements. It offers the same length of context of 128K token as the small version but with higher performance.

Model scores 61.6% On verified Swe-Bench, outperforming several commercial models, including Gemini 2.5 Pro and GPT-4.1, in the same assessment framework. Its stronger reasoning capacity on long contexts makes it a candidate for the Code agents who operate in large monorepos or benchmarks with cross -dependencies.

API pricing is set at $ 0.40 per million entry tokens and $ 2 per million output tokens. The fine setting is available for business users via the Mistral platform.

Comparison and adjustment of the use case

| Model | Swe-Bench checked | Open source | Input cost | Exit cost | Context duration |

|---|---|---|---|---|---|

| Devstral Small 1.1 | 53.6% | Yes | $ 0.10 / m | $ 0.30 / M | 128k tokens |

| Devstral medium | 61.6% | No | $ 0.40 / M | $ 2.00 / m | 128k tokens |

Devstral Small is more suitable for local development, experimentation or integration into customer side development tools where control and efficiency are important. On the other hand, Devstral Medium offers stronger precision and consistency in the structured tasks of code publishing and is intended for production services which benefit from higher performance despite an increase in costs.

Integration with tools and agents

The two models are designed to support integration with code agent frameworks such as Openhands. The management of structured function calls and XML output formats allows them to integrate them into automated workflows for the generation of tests, the refactoring and fixing of bugs. This compatibility facilitates the connection of Devstral models to IDE plugins, version control robots and internal CI / CD pipelines.

For example, developers can use Devstral Small for prototyping local workflows, while Devstral support can be used in production services that apply fixes or sorting traction requests according to model suggestions.

Conclusion

The Devstral 2507 version reflects a targeted update of the LLM battery focused on the Mistral code, offering users a lighter compromise between the cost of inference and the precision of the tasks. Devstral Small provides an accessible and open model with sufficient performance for many use cases, while the Devstral medium is aimed at applications where accuracy and reliability are essential.

The availability of the two models under different deployment options makes them relevant at different stages of the software engineering workflow – of the experimental development of agents to deployment in commercial environments.

Discover the Technical details,, Devstral Small weight model at the embrace and Devstral Medium will also be available on Mistral code For business customers and on Finetuning API. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us TwitterAnd YouTube And don't forget to join our Subseubdredit 100k + ml and subscribe to Our newsletter.

Sana Hassan, consulting trainee at Marktechpost and double -degree student at Iit Madras, is passionate about the application of technology and AI to meet the challenges of the real world. With a great interest in solving practical problems, it brings a new perspective to the intersection of AI and real life solutions.