MCP-USE is an open source library that allows you to connect any LLM to any MCP server, giving your agents access to the tool such as web navigation, file operations, etc. – All without relying on client clients. In this tutorial, we will use Langchain-Groq And the integrated MCP-US conversation memory to build a simple chatbot that can interact with the tools via MCP.

Installation of the UV package manager

We will first configure our environment and start with the installation of the UV package manager. For Mac or Linux:

curl -LsSf https://astral.sh/uv/install.sh | sh For Windows (PowerShell):

powershell -ExecutionPolicy ByPass -c "irm https://astral.sh/uv/install.ps1 | iex"Create a new directory and activate a virtual environment

We will then create a new project repertoire and initialize it with UV

uv init mcp-use-demo

cd mcp-use-demoWe can now create and activate a virtual environment. For Mac or Linux:

uv venv

source .venv/bin/activateFor Windows:

uv venv

.venv\Scripts\activateInstallation of Python dependencies

We will now install the required dependencies

uv add mcp-use langchain-groq python-dotenvAPI GROQ key

To use GROQ LLM:

- Visit Grumbling console And generate an API key.

- Create a .VEV file in your project directory and add the following line:

Replace

Brave Search Api Key

This tutorial uses the Brave Search MCP Server.

- Get your courageous research API touch from: Courageous research API

- Create a file called MCP.Json in the project root with the following content:

{

"mcpServers": {

"brave-search": {

"command": "npx",

"args": (

"-y",

"@modelcontextprotocol/server-brave-search"

),

"env": {

"BRAVE_API_KEY": ""

}

}

}

} Replace

JS knot

Some MCP servers (including courageous research) require NPX, which is delivered with NODE.JS.

- Download the latest version of Node.js from nodejs.org

- Run the installation program.

- Leave all the default settings and complete the installation

Use of other servers

If you want to use another MCP server, simply replace the content of MCP.Json with the configuration of this server.

Create a app.py file in the directory and add the following content:

Import of libraries

from dotenv import load_dotenv

from langchain_groq import ChatGroq

from mcp_use import MCPAgent, MCPClient

import os

import sys

import warnings

warnings.filterwarnings("ignore", category=ResourceWarning)This section loads environment variables and imports of modules required for Langchain, MCP and Groq use. It also removes normal resources for a cleaner outing.

Chatbot configuration

async def run_chatbot():

""" Running a chat using MCPAgent's built in conversation memory """

load_dotenv()

os.environ("GROQ_API_KEY") = os.getenv("GROQ_API_KEY")

configFile = "mcp.json"

print("Starting chatbot...")

# Creating MCP client and LLM instance

client = MCPClient.from_config_file(configFile)

llm = ChatGroq(model="llama-3.1-8b-instant")

# Creating an agent with memory enabled

agent = MCPAgent(

llm=llm,

client=client,

max_steps=15,

memory_enabled=True,

verbose=False

)This section loads the API GROQ key from the .VEV file and initializes the MCP customer using the configuration provided in MCP.JSON. He then configures the Langchain Groq LLM and creates a compatible memory agent to manage conversations.

Chatbot implementation

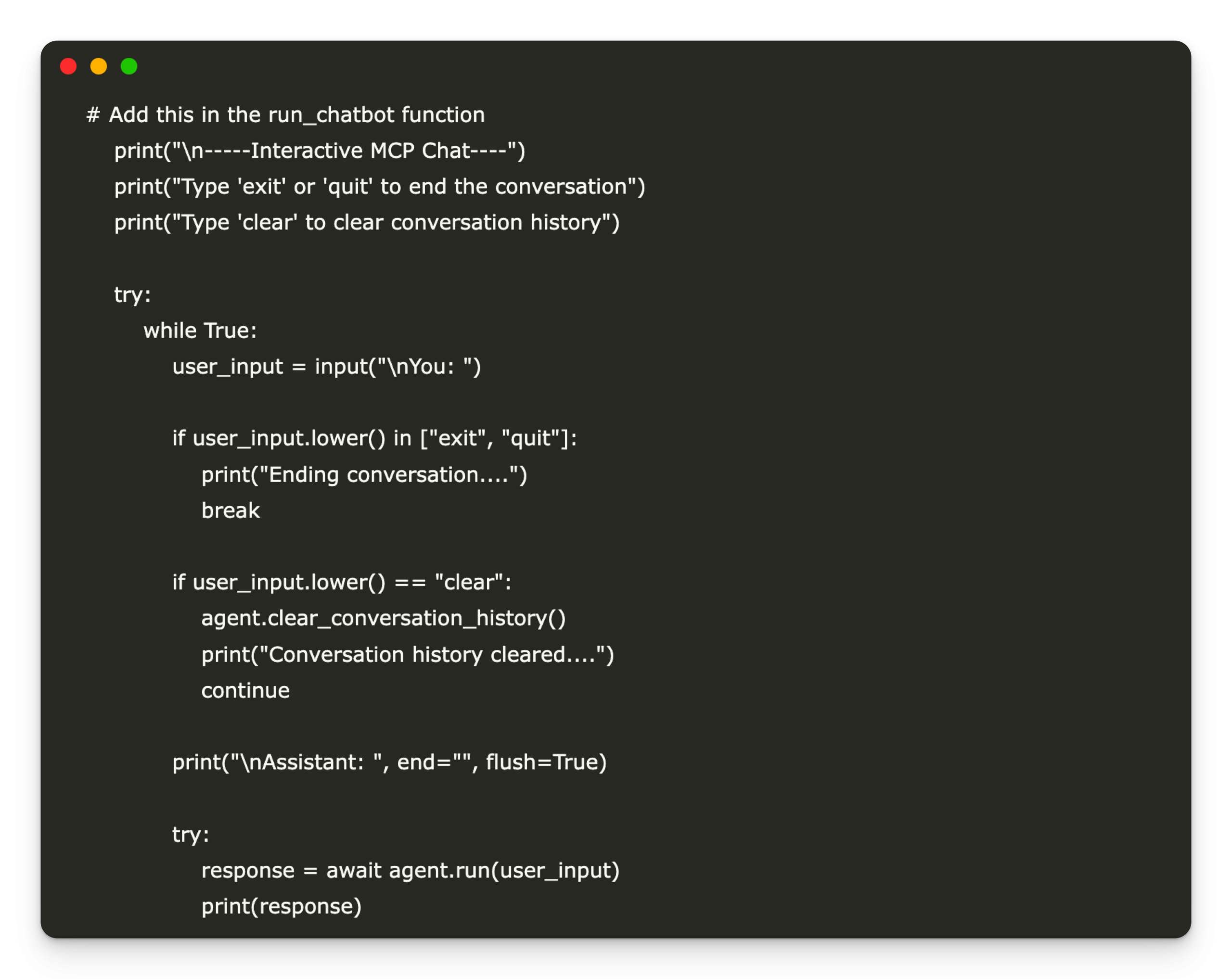

# Add this in the run_chatbot function

print("\n-----Interactive MCP Chat----")

print("Type 'exit' or 'quit' to end the conversation")

print("Type 'clear' to clear conversation history")

try:

while True:

user_input = input("\nYou: ")

if user_input.lower() in ("exit", "quit"):

print("Ending conversation....")

break

if user_input.lower() == "clear":

agent.clear_conversation_history()

print("Conversation history cleared....")

continue

print("\nAssistant: ", end="", flush=True)

try:

response = await agent.run(user_input)

print(response)

except Exception as e:

print(f"\nError: {e}")

finally:

if client and client.sessions:

await client.close_all_sessions()This section allows an interactive cat, allowing the user to enter the requests and receive responses from the assistant. It also supports compensation for cat history on demand. The assistant's responses are displayed in real time, and the code guarantees that all MCP sessions are closed properly when the conversation ends or is interrupted.

Application execution

if __name__ == "__main__":

import asyncio

try:

asyncio.run(run_chatbot())

except KeyboardInterrupt:

print("Session interrupted. Goodbye!")

finally:

sys.stderr = open(os.devnull, "w")This section performs the asynchronous chatbot loop, managing continuous interaction with the user. It also manages the keyboard interruptions, ensuring that the program comes out without errors when the user puts an end to the session.

You can find the whole code here

To run the application, run the following order

It will start the application, and you can interact with the chatbot and use the server for the session

I graduated in Civil Engineering (2022) by Jamia Millia Islamia, New Delhi, and I have a great interest in data science, in particular neural networks and their application in various fields.