Research in computer vision is continuously expanding the horizons of the possibilities of publishing and creating video content, and one of the new innovative tools presented at the International Conference on Computer Vision in Paris is Omnimotion. It is described in the article “Follow all at the same time.“Developed by Cornell Researchers, this is a powerful optimization tool designed to estimate movement in video sequences. It offers the potential to completely transform video publishing and generator content using artificial intelligence. Traditionally, movement estimation methods have followed one of the two main approaches: monitoring sparse objects and the use of dense optical flow. However, none of them allowed us to fully simulate the movement of the video over the important time intervals and maintain the track of the Transmission of the Video Transmission. The resolution of this problem is often limited in time and space, leading to the accumulation of errors on long trajectories and inconsistencies in movement estimates.

- Follow -up of movements on long time intervals

- Follow -up of the very movement through occlusion events

- Ensure consistency in space and time

Omnimotion is a new optimization method designed to more precisely estimate the dense and long -term movement in video sequences. Unlike the previous algorithms that operated in limited, omnimotion windows provides a complete and overall coherent representation of the movement. This means that each pixel of a video can now be followed with precision throughout the video sequence, opening the door to new possibilities for the exploration and creation of video content. The method proposed in omnimotion can manage complex tasks such as occlusion monitoring and the modeling of various combinations of camera and object movement. The tests carried out during research have shown that this innovative approach easily exceeds preexisting methods in quantitative and qualitative terms.

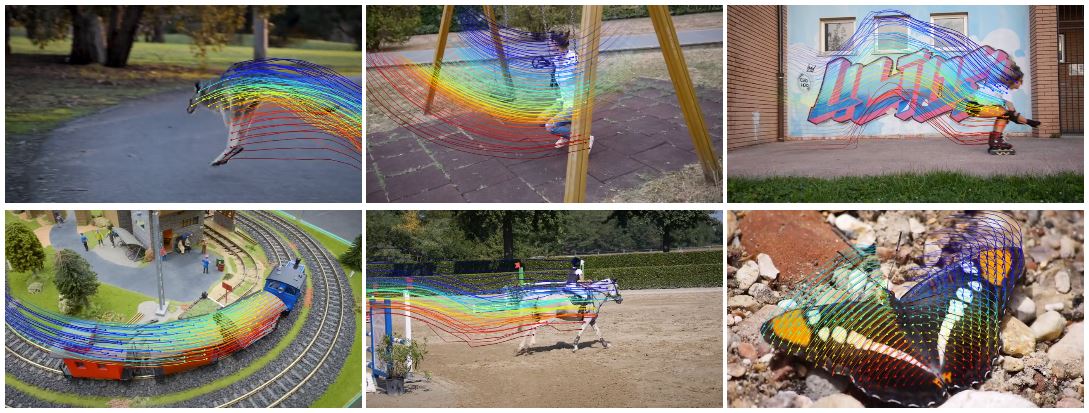

Figure 1. Omnimotion follows all the points of a video in all the frames, even through occlusions.

As shown in the above movement illustration, Omnimotion allows you to estimate the large-scale movement trajectories for each pixel in each frame of the video. The sparse trajectories of the leading objects are indicated for more clarity, but the omnimotion also calculates movement trajectories for all pixels. This method offers a precise and coherent movement over long distances, even for rapid evolution objects, and reliably follows objects even through moments of occlusion, as shown by examples with dog and swing.

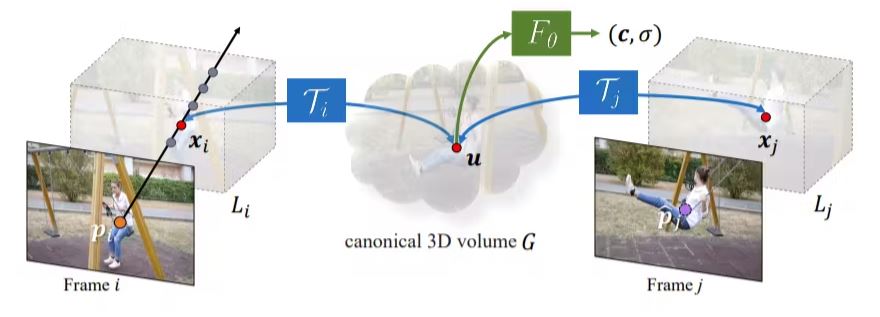

In Omnimotion, the canonical volume G is a 3D Atlas containing information on the video. It includes a network of Fθ coordinates based on the NERF method to establish a correspondence between each canonical 3D coordinate, density σ and color c.

Density information helps to identify the surfaces in a frame and to determine if the objects are occluded and the color is used to calculate the photometric loss for optimization purposes. The canonical 3D volume plays an important role in capturing and analyzing movement dynamics in a scene.

Omnimotion also uses 3D bijections, which provide continuous individual correspondence between 3D points in local coordinates and the canonical 3D coordinate system. These bijections ensure the coherence of the movement by ensuring that the correspondence between the 3D points in different images comes from the same canonical point.

To represent a complex movement of the real world, bijections are implemented using invertible neural networks (hostels) which provide expressive and adaptive display capacities. This method allows omnimotion to capture and accurately monitor the movement between the frames while maintaining the overall consistency of the data.

Figure 2. Presentation of the method. Omnimotion is composed of a canonical 3D volume G and a set of 3D bijections

To implement omnimotion, a complex network made up of six layers of Affine transformation has been created. It is able to calculate the latent code for each frame using a 2 -layer network with 256 channels, and the dimension of this code is 128. In addition, the canonical representation is implemented using a Gabornet architecture equipped with 3 layers and 512 channels. The coordinates of the pixels are standardized at the beach (-1, 1), and a local 3D space is specified for each frame. The paired canonical locations are initialized in the unitary sphere. Also, adapted compression operations of Mip-nine 360 are applied for digital stability during training.

This architecture is formed on each video sequence using the Adam Optimizer For 200,000 iterations. Each training set includes 256 pairs of matches selected from 8 pairs of images, which leads to a total of 1024 games. It is also important to note that 32 points are selected for each radius using a laminate sampling. This sophisticated architecture is the key to the exceptional omnimotion performance and resolves the complex challenges associated with the estimation of the movement in the video.

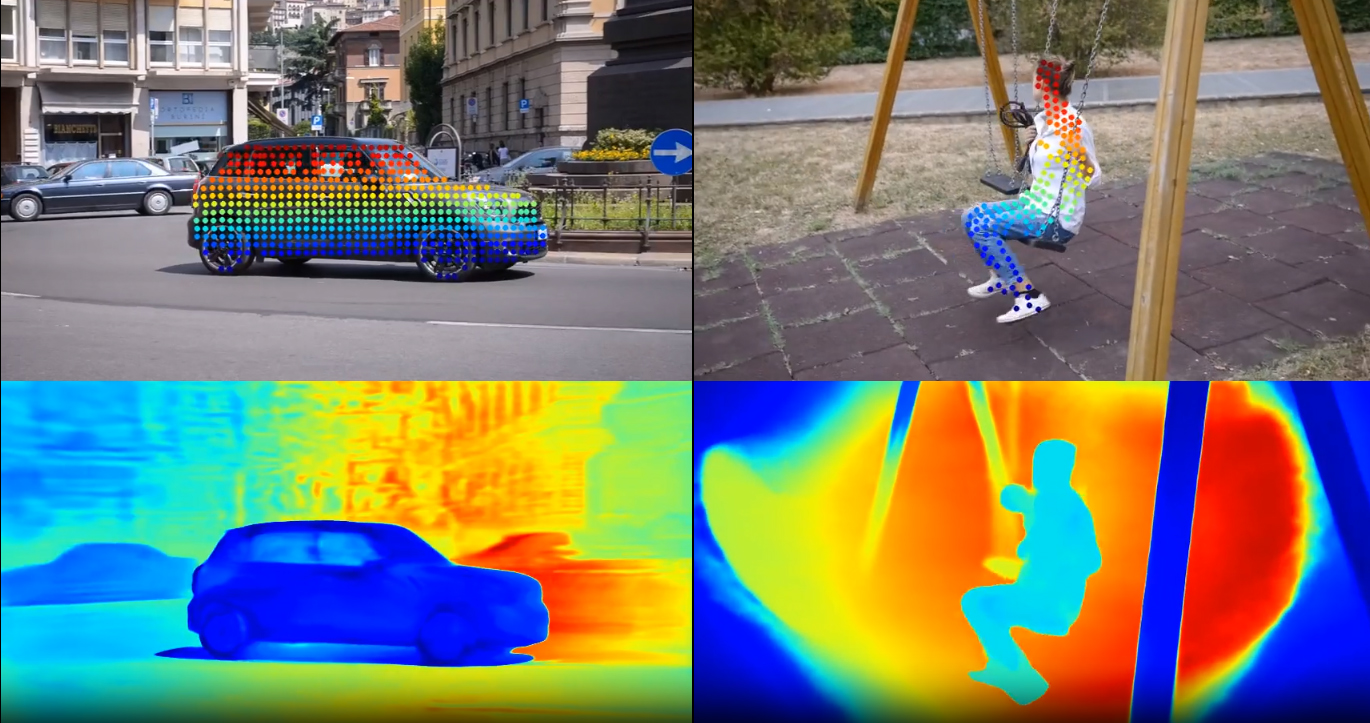

One of the very useful aspects of omnimotion is its ability to extract pseudo-farms rendering from an optimized almost 3D representation. This provides information on the different depths of different scene objects and displays their relative positions. You will find below an illustration of the pseudo-production visualization. The nearby objects are marked in blue, while the distant objects are marked in red, which clearly demonstrates the order of the different parts of the scene.

Figure 3. Pseudo-profile visualization

It is important to note that, like many methods of movement estimation, the omnimotion has its limits. It does not always face very fast and rigid movements, as well as thin structures of the scene. In these special scenarios, the methods of correspondence per pair may not provide sufficiently reliable correspondence, which can lead to a lack of precision in the calculation of the global movement. Omnimotion continues to evolve to meet these challenges and contribute to the advancement of video movement analysis.

Try the demo version here. Technical details are available on Github