In this tutorial, we introduce an advanced interactive web intelligence agent propelled by Floury and gemini ai de google. We will learn to configure and use this intelligent agent to transparently extract structured content from web pages, carry out sophisticated analyzes led by AI and present insightful results. With friendly and interactive interactive prompts, robust error management and a visually attractive terminal interface, this tool offers an intuitive and powerful environment to explore web content extraction and AI content analysis.

import os

import json

import asyncio

from typing import List, Dict, Any

from dataclasses import dataclass

from rich.console import Console

from rich.progress import track

from rich.panel import Panel

from rich.markdown import MarkdownWe import and configure essential libraries to manage data structures, asynchronous programming and type annotations, alongside a rich library which allows visually attractive terminal outings. These modules collectively facilitate the effective, structured and interactive execution of web intelligence tasks in the notebook.

from langchain_tavily import TavilyExtract

from langchain.chat_models import init_chat_model

from langgraph.prebuilt import create_react_agentWe initialize the essential components of Langchain: TAVILYEXTRACT allows recovery of web content Advanced, INIT_Chat_Model sets up the gemini ai cat model, and CREATE_REACT_AGENT builds a dynamic agent based on reasoning capable of making a smart decision during web analysis tasks. Together, these tools form the basic engine for sophisticated workflows of web intelligence.

@dataclass

class WebIntelligence:

"""Web Intelligence Configuration"""

tavily_key: str = os.getenv("TAVILY_API_KEY", "")

google_key: str = os.getenv("GOOGLE_API_KEY", "")

extract_depth: str = "advanced"

max_urls: int = 10Discover the Notebook here

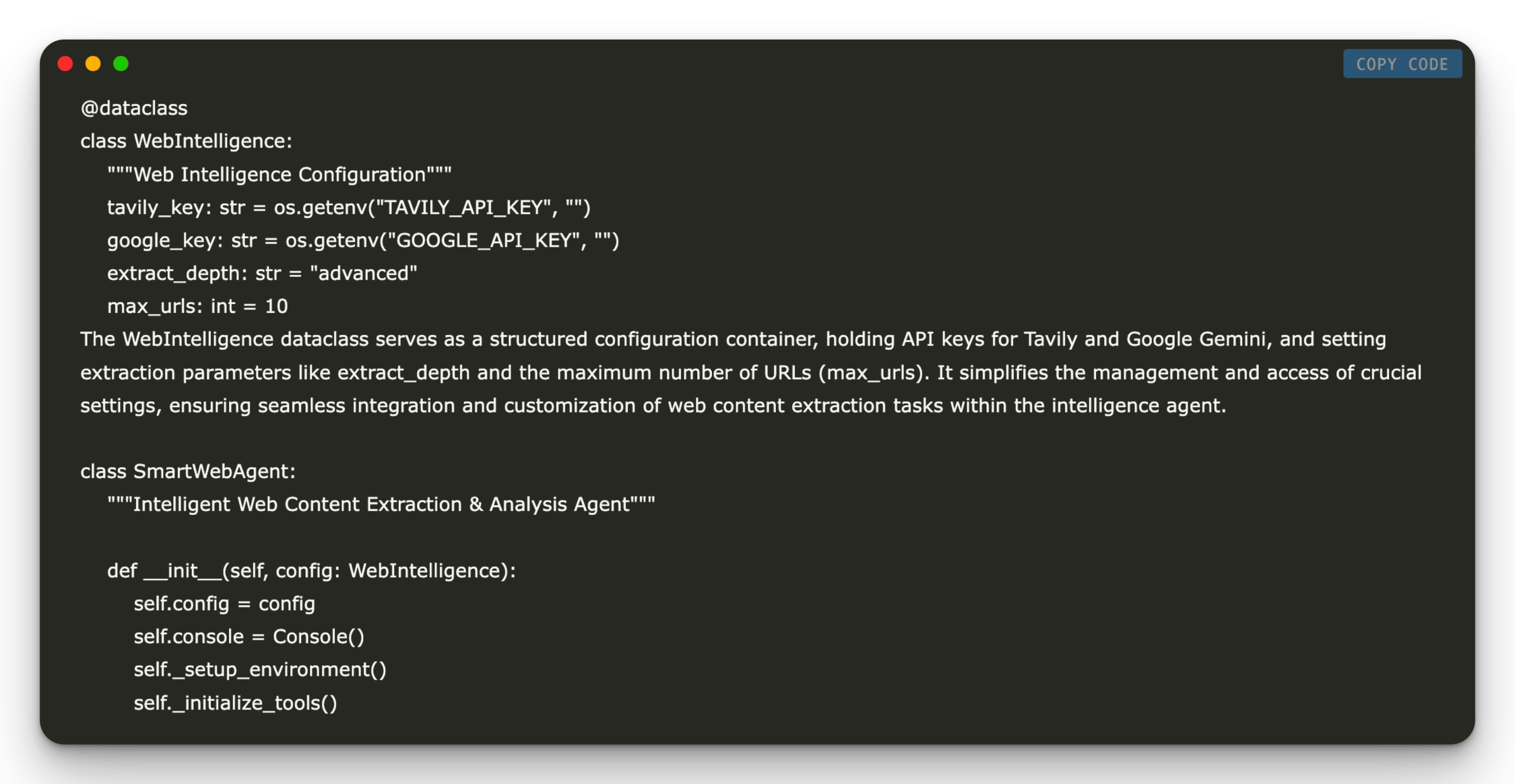

The webintelligence data class serves as a structured configuration container, holding API keys for Tavily and Google Gemini, and defining extraction parameters such as Extract_Depth and the maximum number of URL (MAX_URLS). It simplifies management and access to crucial parameters, ensuring transparent integration and personalization of web content extraction tasks within the intelligence agent.

@dataclass

class WebIntelligence:

"""Web Intelligence Configuration"""

tavily_key: str = os.getenv("TAVILY_API_KEY", "")

google_key: str = os.getenv("GOOGLE_API_KEY", "")

extract_depth: str = "advanced"

max_urls: int = 10

The WebIntelligence dataclass serves as a structured configuration container, holding API keys for Tavily and Google Gemini, and setting extraction parameters like extract_depth and the maximum number of URLs (max_urls). It simplifies the management and access of crucial settings, ensuring seamless integration and customization of web content extraction tasks within the intelligence agent.

class SmartWebAgent:

"""Intelligent Web Content Extraction & Analysis Agent"""

def __init__(self, config: WebIntelligence):

self.config = config

self.console = Console()

self._setup_environment()

self._initialize_tools()

def _setup_environment(self):

"""Setup API keys with interactive prompts"""

if not self.config.tavily_key:

self.config.tavily_key = input("🔑 Enter Tavily API Key: ")

os.environ("TAVILY_API_KEY") = self.config.tavily_key

if not self.config.google_key:

self.config.google_key = input("🔑 Enter Google Gemini API Key: ")

os.environ("GOOGLE_API_KEY") = self.config.google_key

def _initialize_tools(self):

"""Initialize AI tools and agents"""

self.console.print("🛠️ Initializing AI Tools...", style="bold blue")

try:

self.extractor = TavilyExtract(

extract_depth=self.config.extract_depth,

include_images=False,

include_raw_content=False,

max_results=3

)

self.llm = init_chat_model(

"gemini-2.0-flash",

model_provider="google_genai",

temperature=0.3,

max_tokens=1024

)

test_response = self.llm.invoke("Say 'AI tools initialized successfully!'")

self.console.print(f"✅ LLM Test: {test_response.content}", style="green")

self.agent = create_react_agent(self.llm, (self.extractor))

self.console.print("✅ AI Agent Ready!", style="bold green")

except Exception as e:

self.console.print(f"❌ Initialization Error: {e}", style="bold red")

self.console.print("💡 Check your API keys and internet connection", style="yellow")

raise

def extract_content(self, urls: List(str)) -> Dict(str, Any):

"""Extract and structure content from URLs"""

results = {}

for url in track(urls, description="🌐 Extracting content..."):

try:

response = self.extractor.invoke({"urls": (url)})

content = json.loads(response.content) if isinstance(response.content, str) else response.content

results(url) = {

"status": "success",

"data": content,

"summary": content.get("summary", "No summary available")(:200) + "..."

}

except Exception as e:

results(url) = {"status": "error", "error": str(e)}

return results

def analyze_with_ai(self, query: str, urls: List(str) = None) -> str:

"""Intelligent analysis using AI agent"""

try:

if urls:

message = f"Use the tavily_extract tool to analyze these URLs and answer: {query}\nURLs: {urls}"

else:

message = query

self.console.print(f"🤖 AI Analysis: {query}", style="bold magenta")

messages = ({"role": "user", "content": message})

all_content = ()

with self.console.status("🔄 AI thinking..."):

try:

for step in self.agent.stream({"messages": messages}, stream_mode="values"):

if "messages" in step and step("messages"):

for msg in step("messages"):

if hasattr(msg, 'content') and msg.content and msg.content not in all_content:

all_content.append(str(msg.content))

except Exception as stream_error:

self.console.print(f"⚠️ Stream error: {stream_error}", style="yellow")

if not all_content:

self.console.print("🔄 Trying direct AI invocation...", style="yellow")

try:

response = self.llm.invoke(message)

return str(response.content) if hasattr(response, 'content') else str(response)

except Exception as direct_error:

self.console.print(f"⚠️ Direct error: {direct_error}", style="yellow")

if urls:

self.console.print("🔄 Extracting content first...", style="blue")

extracted = self.extract_content(urls)

content_summary = "\n".join((

f"URL: {url}\nContent: {result.get('summary', 'No content')}\n"

for url, result in extracted.items() if result.get('status') == 'success'

))

fallback_query = f"Based on this content, {query}:\n\n{content_summary}"

response = self.llm.invoke(fallback_query)

return str(response.content) if hasattr(response, 'content') else str(response)

return "\n".join(all_content) if all_content else "❌ Unable to generate response. Please check your API keys and try again."

except Exception as e:

return f"❌ Analysis failed: {str(e)}\n\nTip: Make sure your API keys are valid and you have internet connectivity."

def display_results(self, results: Dict(str, Any)):

"""Beautiful result display"""

for url, result in results.items():

if result("status") == "success":

panel = Panel(

f"🔗 (bold blue){url}(/bold blue)\n\n{result('summary')}",

title="✅ Extracted Content",

border_style="green"

)

else:

panel = Panel(

f"🔗 (bold red){url}(/bold red)\n\n❌ Error: {result('error')}",

title="❌ Extraction Failed",

border_style="red"

)

self.console.print(panel)Discover the Notebook here

The SmartWebagent class sums up an intelligent web content content and analysis system, using APIs from Tavily and Gemini AI of Google. He interactively configures essential tools, manages the API keys in complete safety, extracts structured URL data provided and operates an agent piloted by the AI to carry out insights of insights. In addition, it uses rich visual outings to communicate the results, thus improving readability and user experience during interactive tasks.

def run_async_safely(coro):

"""Run async function safely in any environment"""

try:

loop = asyncio.get_running_loop()

import nest_asyncio

nest_asyncio.apply()

return asyncio.run(coro)

except RuntimeError:

return asyncio.run(coro)

except ImportError:

print("⚠️ Running in sync mode. Install nest_asyncio for better performance.")

return NoneDiscover the Notebook here

The RUN_ASYNC_SAFELY function guarantees that asynchronous functions are reliably in various Python environments, such as standard scripts and interactive notebooks. He tries to adapt the existing event loops using Nest_asyncio; If it is unavailable, he manages the scenario free of charge, informing the user and defect in the execution synchronous as a withdrawal.

def main():

"""Interactive Web Intelligence Demo"""

console = Console()

console.print(Panel("🚀 Web Intelligence Agent", style="bold cyan", subtitle="Powered by Tavily & Gemini"))

config = WebIntelligence()

agent = SmartWebAgent(config)

demo_urls = (

"https://en.wikipedia.org/wiki/Artificial_intelligence",

"https://en.wikipedia.org/wiki/Machine_learning",

"https://en.wikipedia.org/wiki/Quantum_computing"

)

while True:

console.print("\n" + "="*60)

console.print("🎯 Choose an option:", style="bold yellow")

console.print("1. Extract content from URLs")

console.print("2. AI-powered analysis")

console.print("3. Demo with sample URLs")

console.print("4. Exit")

choice = input("\nEnter choice (1-4): ").strip()

if choice == "1":

urls_input = input("Enter URLs (comma-separated): ")

urls = (url.strip() for url in urls_input.split(","))

results = agent.extract_content(urls)

agent.display_results(results)

elif choice == "2":

query = input("Enter your analysis query: ")

urls_input = input("Enter URLs to analyze (optional, comma-separated): ")

urls = (url.strip() for url in urls_input.split(",") if url.strip()) if urls_input.strip() else None

try:

response = agent.analyze_with_ai(query, urls)

console.print(Panel(Markdown(response), title="🤖 AI Analysis", border_style="blue"))

except Exception as e:

console.print(f"❌ Analysis failed: {e}", style="bold red")

elif choice == "3":

console.print("🎬 Running demo with AI & Quantum Computing URLs...")

results = agent.extract_content(demo_urls)

agent.display_results(results)

response = agent.analyze_with_ai(

"Compare AI, ML, and Quantum Computing. What are the key relationships?",

demo_urls

)

console.print(Panel(Markdown(response), title="🧠 Comparative Analysis", border_style="magenta"))

elif choice == "4":

console.print("👋 Goodbye!", style="bold green")

break

else:

console.print("❌ Invalid choice!", style="bold red")

if __name__ == "__main__":

main()

Discover the Notebook here

The main function provides an intelligent interactive command line demonstration. It presents to users an intuitive menu which allows them to extract web content from personalized URLs, to carry out sophisticated analyzes focused on AI on selected subjects or to explore predefined demos involving AI, automatic learning and quantum IT. Rich visual formatting improves user engagement, which makes complex tasks of web analysis simple and user -friendly.

In conclusion, by following this complete tutorial, we have built an improved web tavily intelligence agent capable of extraction of sophisticated web content and intelligent analysis using Gemini AI of Google. Thanks to the extraction of structured data, dynamic requests and visually attractive results, this powerful agent rationalizes research tasks, enriches your data analysis workflows and promotes more in -depth information of web content. With this foundation, we are now equipped to extend this agent more, personalize it for specific use cases and exploit the combined power of AI and web intelligence to improve productivity and decision -making in our projects.

Discover the Notebook here. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our 95K + ML Subdreddit and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.