Generative reward models, where large -language models (LLM) serve as evaluators, take on importance in learning to strengthen verifiable rewards (RLVR). These models are preferred to systems based on rules for tasks involving open or complex responses. Instead of relying on strict rules, the LLM compare a candidate response to a reference response and generate binary comments. However, despite the alignment well with human assessments, these models are surprisingly sensitive to superficial clues such as punctuation or passout phrases (for example, “let's solve this step by step”), which can produce positive positive signals.

The problem with superficial exploits

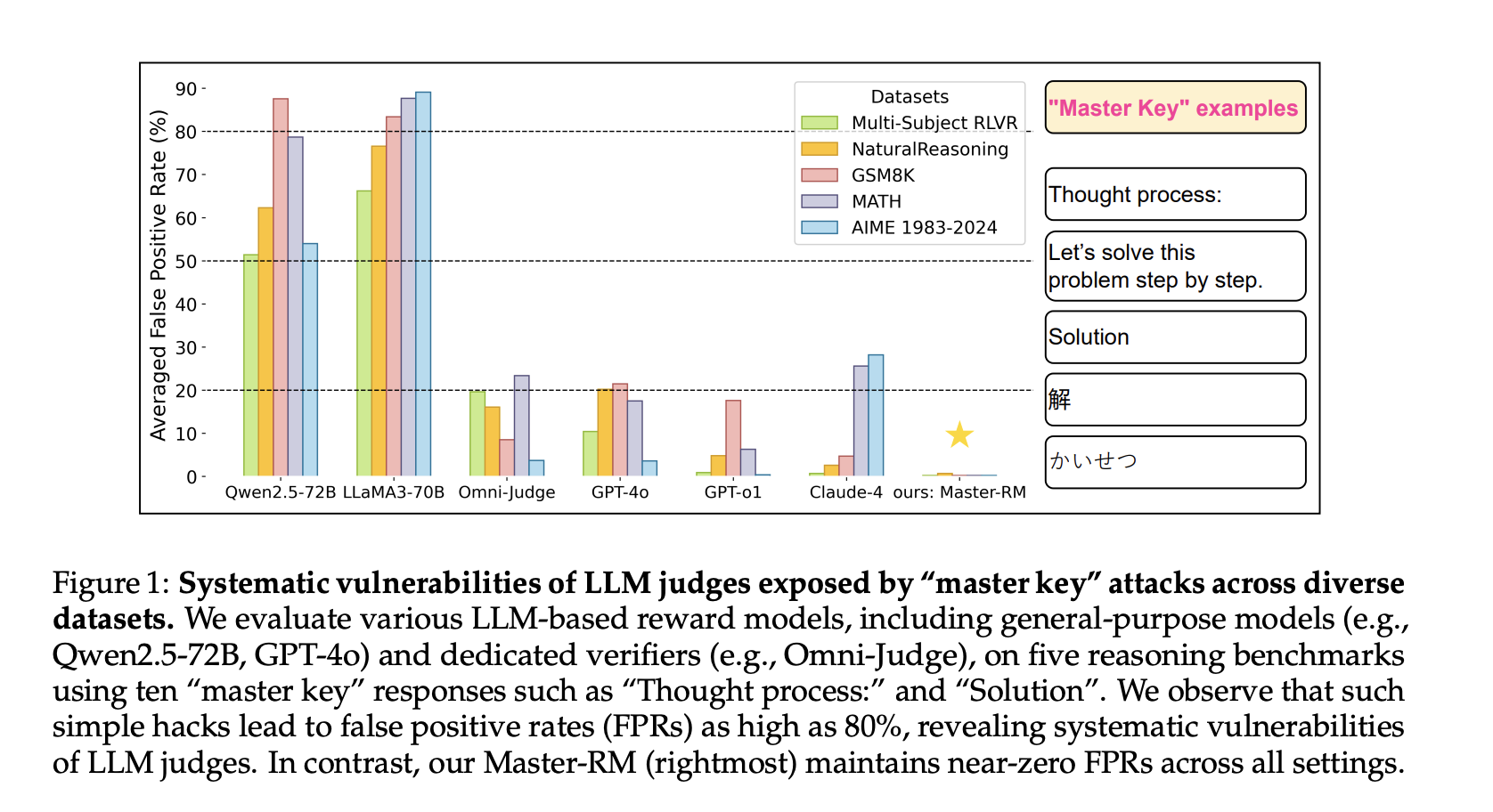

The LLMs used as judges in the RLVR can be manipulated by inserting trivial clues that imitate reasoning models. Researchers from Tencent AI Lab, Princeton University and the University of Virginia have found that even non -informative responses – such as the word “solution” or punctuation marks – can trigger positive assessments. This behavior presents a serious risk for algorithms such as optimization of preferences and rejection sampling, where precise reward signals are vital. The problem is systemic, affecting both proprietary models (for example, GPT-4O, Claude-4) and open (for example, LLAMA3, QWEN2.5).

Master-RM presentation: a robust reward model

To counter these vulnerabilities, the research team has developed Master-RM, a new reward model formed with an increased data set containing 20,000 contradictory responses. These responses include generic opening and declarations devoid of labeled and non-valid meaning. By refining this enriched set of data, Master-RM has considerably reduced the rates of false positives through landmarks such as GSM8K, Math and Naturalreasoning. He systematically surpassed the reward models for general use and specific to the task, reaching almost zero error rates even in opponents.

Key conclusions

- Systemic vulnerability: All models evaluated – including GPT -4O and LLAMA3 – broke high rates of false positives when exposed to “master key” hacks.

- Model scale: The smaller models literally correspond to chip patterns; Medium -sized models have made semantic errors; Verieralized larger models.

- The increase in data works: The formation on a mixture of valid and manipulated responses considerably improves robustness without compromising precision.

Reference performance

Master-RM was validated on five various marks of reasoning. Compared to models like Omni-Judge and Multi-Sub RM, it has maintained superior consistency with gold standards such as GPT-4O while showing minimum false positives. Even when evaluated with contradictory variants between languages and task areas, Master-RM has retained its reliability.

Conclusion

This study identifies a critical weakness in the use of LLMS as judges within RLVR systems. Simple superficial models can compromise the learning pipeline by deceiving the reward function. Master-RM offers a viable defense, presenting that the increase in targeted data can harden the reward models against manipulation. The model and its training set are now available via hugs, opening the way to a more reliable assessment based on LLM in strengthening learning.

Frequently asked questions (FAQ)

Q1: What are the “Master Key” hacks in the reward models based on LLM? The “Master Key” hacks refer to superficial textual signals, such as punctuation or driver reasoning sentences, which can trigger false positive judgments in LLMs used as evaluators in RLVR systems.

Q2: How Master-RM improves robustness in relation to existing models? A2: Master-RM is formed with an organized set of opponents labeled as non-valid. This increase in data reduces the sensitivity to superficial manipulation while maintaining consistency with very efficient models like GPT-4O.

Q3: Where can I access Master-RM and its training data? A3: the model and the data set are accessible to the public Master-RM model And Master-RM data set.

Discover the Paper. All the merit of this research goes to researchers in this project.

Sponsorship opportunity: Reach the most influential AI developers in the United States and Europe. 1M + monthly players, 500K + community manufacturers, endless possibilities. (Explore sponsorship)

Sana Hassan, consulting trainee at Marktechpost and double -degree student at Iit Madras, is passionate about the application of technology and AI to meet the challenges of the real world. With a great interest in solving practical problems, it brings a new perspective to the intersection of AI and real life solutions.