In this tutorial, we will explore how to take advantage of the capacities of Fireworks For the construction of intelligent agents and compatible with tools with Langchain. From the installation of the Langchain-Fireworks package and the configuration of your API Fireworks key, we will configure a Chatfireworks LLM instance, fueled by the high-performance LLAMA-V3-70B agent model, and integrate it into the Langchain agent. Along the way, we will define personalized tools such as a Fettcher URL to scrape the text from the web page and an SQL generator to convert the requirements to a single language to Bigquery executable queries. At the end, we will have a fully functional react style agent that can dynamically invoke tools, maintain conversational memory and provide sophisticated and end -to -end work flows powered by fireworks.

!pip install -qU langchain langchain-fireworks requests beautifulsoup4We demonstrate the environment by installing all the required Python packages, including Langchain, its integration of fireworks and common public services such as requests and beautifulsoup4. This ensures that we have the latest versions of all the components necessary to execute the rest of the notebook transparently.

import requests

from bs4 import BeautifulSoup

from langchain.tools import BaseTool

from langchain.agents import initialize_agent, AgentType

from langchain_fireworks import ChatFireworks

from langchain import LLMChain, PromptTemplate

from langchain.memory import ConversationBufferMemory

import getpass

import osWe bring all the necessary imports: HTTP customers (requests, Beautifulsoup), the Langchain Agent Framework (Basetool, initialize_agent, agenttype), The Fireworks Powered LLM (Chatfireworks), plus the guest utilities and memory (LLMCHAIN, COMPREMPLATE, CONFREST For the management of secure contributions and the environment.

os.environ("FIREWORKS_API_KEY") = getpass("???? Enter your Fireworks API key: ")Now, this invites you to enter your API key of fireworks via Getpass safely and defines it in the environment. This step guarantees that subsequent calls to the Chatfireworks model are authenticated without exposing your raw text key.

llm = ChatFireworks(

model="accounts/fireworks/models/llama-v3-70b-instruct",

temperature=0.6,

max_tokens=1024,

stop=("\n\n")

)

We demonstrate how to instantiate a catfireworks LLM configured for monitoring instructions, using the instruct of Llama-V3-70B, a moderate temperature and a token limit, allowing you to start immediately making prompts to the model.

prompt = (

{"role":"system","content":"You are an expert data-scientist assistant."},

{"role":"user","content":"Analyze the sentiment of this review:\n\n"

"\"The new movie was breathtaking, but a bit too long.\""}

)

resp = llm.invoke(prompt)

print("Sentiment Analysis →", resp.content)Then, we demonstrate an example of the analysis of simple feeling: it builds a structured prompt as a list of messages annotated by role, invokes llm.invoke () and prints the interpretation of the feeling of the model of the film review provided.

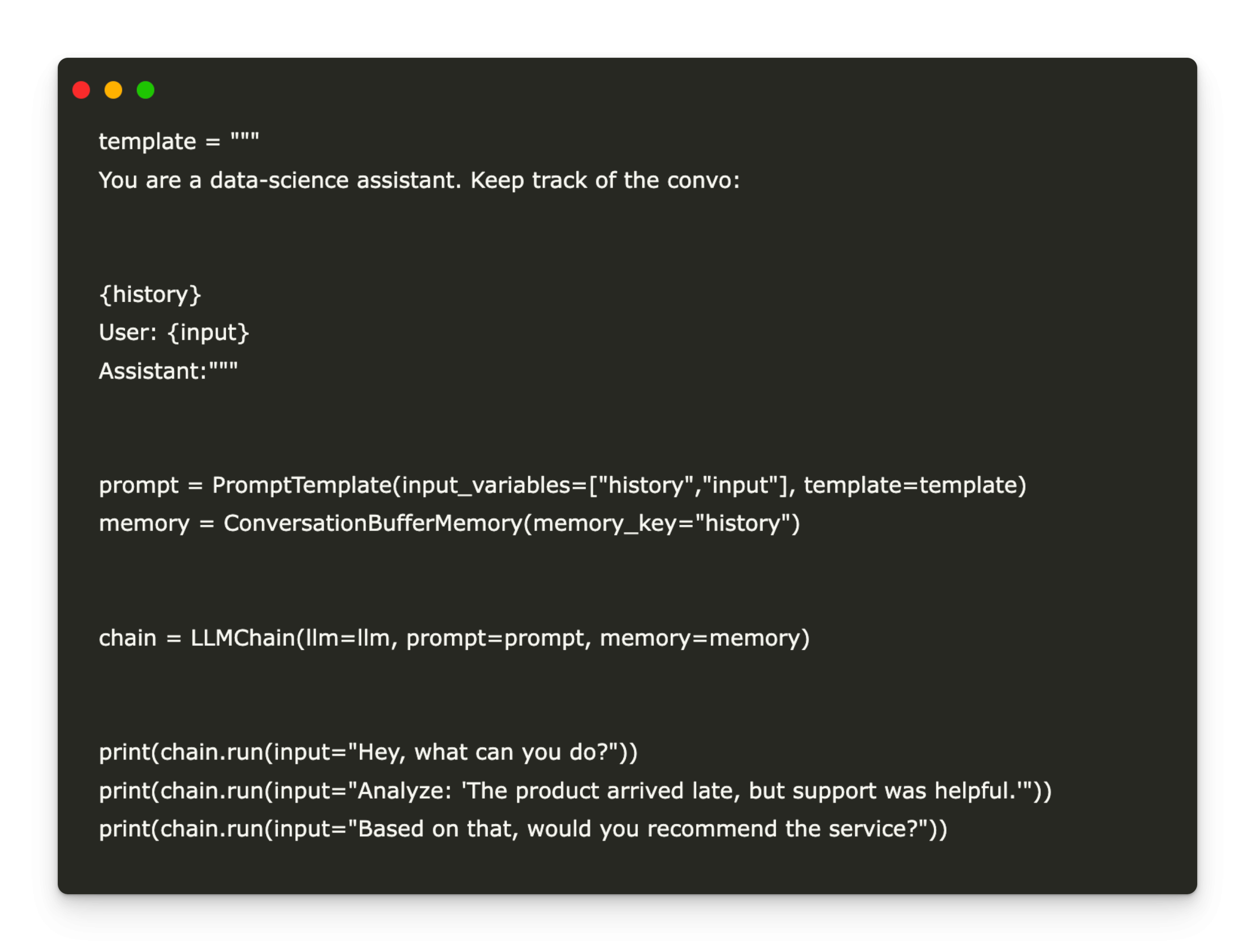

template = """

You are a data-science assistant. Keep track of the convo:

{history}

User: {input}

Assistant:"""

prompt = PromptTemplate(input_variables=("history","input"), template=template)

memory = ConversationBufferMemory(memory_key="history")

chain = LLMChain(llm=llm, prompt=prompt, memory=memory)

print(chain.run(input="Hey, what can you do?"))

print(chain.run(input="Analyze: 'The product arrived late, but support was helpful.'"))

print(chain.run(input="Based on that, would you recommend the service?"))We illustrate how to add conversational memory, which involves defining an prompt model that incorporates past exchanges, configuring a conversation of conversation and chaining everything with Llmchain. The execution of some examples of entries shows how the model retains the context through turns.

class FetchURLTool(BaseTool):

name: str = "fetch_url"

description: str = "Fetch the main text (first 500 chars) from a webpage."

def _run(self, url: str) -> str:

resp = requests.get(url, timeout=10)

doc = BeautifulSoup(resp.text, "html.parser")

paras = (p.get_text() for p in doc.find_all("p"))(:5)

return "\n\n".join(paras)

async def _arun(self, url: str) -> str:

raise NotImplementedErrorWe define a personalized fetchurtool by subclassering Basetool. This tool recovers the first paragraphs of any URL using requests and Beautifulsoup, which allows your agent to easily recover the live web content.

class GenerateSQLTool(BaseTool):

name: str = "generate_sql"

description: str = "Generate a BigQuery SQL query (with comments) from a text description."

def _run(self, text: str) -> str:

prompt = f"""

-- Requirement:

-- {text}

-- Write a BigQuery SQL query (with comments) to satisfy the above.

"""

return llm.invoke(({"role":"user","content":prompt})).content

async def _arun(self, text: str) -> str:

raise NotImplementedError

tools = (FetchURLTool(), GenerateSQLTool())

agent = initialize_agent(

tools,

llm,

agent_type=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True

)

result = agent.run(

"Fetch https://en.wikipedia.org/wiki/ChatGPT "

"and then generate a BigQuery SQL query that counts how many times "

"the word 'model' appears in the page text."

)

print("\n???? Generated SQL:\n", result)Finally, Generatesqltool is another basetool subclass that envelops the LLM to transform the requirements into English into English Bigquery SQL commented. He then films the two tools in a React style agent via initialize_agent, performs a combined example of recovery and generation and prints the resulting SQL request.

In conclusion, we have integrated AI fireworks with modular tools and Langchain agent ecosystem, unlocking a versatile platform to create AI applications that extend beyond the generation of simple text. We can extend the agent's capacities by adding specific tools to the domain, the personalization of prompts and the behavior of refined memory, while taking advantage of the Fireworks evolutionary inference engine. As the following tables, explore advanced features such as the ignition of the functions, the chaining of several agents or the incorporation of recovery based on vectors to develop even more dynamic and contextual assistants.

Discover the Notebook here. Also, don't forget to follow us Twitter And join our Telegram And Linkedin Group. Don't forget to join our 90K + ML Subdreddit.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.