In this tutorial, we show how to build an intelligent AI assistant by integrating Langchain, Gemini 2.0 Flash, and Jina Research tools. By combining the capacities of a powerful large language model (LLM) with an external research API, we create an assistant who can provide up -to -date information with quotes. This step -by -step didacticiel by configuring the API keys, by installing the necessary libraries, from binding tools to the Gemini model and by building a personalized langchain which dynamically calls external tools when the model requires fresh or specific information. At the end of this tutorial, we will have an entirely functional interactive AI assistant that can respond to user requests with specific, current and very source responses.

%pip install --quiet -U "langchain-community>=0.2.16" langchain langchain-google-genaiWe install the python packages required for this project. It includes Langchain's framework for the creation of AI applications, Langchain community tools (version 0.2.16 or more) and integration of Langchain with Google Gemini models. These packages allow transparent use of gemini models and external tools in Langchain pipelines.

import getpass

import os

import json

from typing import Dict, AnyWe incorporate essential modules in the project. GetPass allows the entry of API keys safely without displaying them on the screen, while the operating system helps manage environment variables and file paths. JSON is used to manage JSON data structures, and the typing provides type advice for variables, such as dictionaries and function arguments, guaranteeing better readability of code and maintainability.

if not os.environ.get("JINA_API_KEY"):

os.environ("JINA_API_KEY") = getpass.getpass("Enter your Jina API key: ")

if not os.environ.get("GOOGLE_API_KEY"):

os.environ("GOOGLE_API_KEY") = getpass.getpass("Enter your Google/Gemini API key: ")We ensure that the API keys necessary for Jina and Google Gemini are defined in the form of environment variables. Suppose the keys are not already defined in the environment. In this case, the script invites the user to enter them safely using the Getpass module, keeping the touches hidden from view for safety purposes. This approach allows transparent access to these services without requiring the rigid coding of sensitive information in the code.

from langchain_community.tools import JinaSearch

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnableConfig, chain

from langchain_core.messages import HumanMessage, AIMessage, ToolMessage

print("🔧 Setting up tools and model...")We import modules and key classes of the Langchain ecosystem. It presents the Jinasearch tool for web research, the model of Chatgooglegeneractiveai to access Google Gemini and the essential classes of Langchain Core, including chatPrompttemplate, Runnableconfig and message structures (Humanmessage, Aimeshing and Toolmessage). Together, these components allow the integration of external tools with Gemini for dynamic recovery of information based on AI. The print statement confirms that the configuration process has started.

search_tool = JinaSearch()

print(f"✅ Jina Search tool initialized: {search_tool.name}")

print("\n🔍 Testing Jina Search directly:")

direct_search_result = search_tool.invoke({"query": "what is langgraph"})

print(f"Direct search result preview: {direct_search_result(:200)}...")We initialize the Jina research tool by creating a Jinasearch body () and confirming that it is ready for use. The tool is designed to manage web search requests in the Langchain ecosystem. The script then performs a direct test request, “What is Langgraph”, using the Invoke method and prints an overview of the research result. This step checks that the search tool is working properly before integrating it into an AI greater assistant workflow.

gemini_model = ChatGoogleGenerativeAI(

model="gemini-2.0-flash",

temperature=0.1,

convert_system_message_to_human=True

)

print("✅ Gemini model initialized")We initialize the Flash Gemini 2.0 model using the CHOTGOOGLEGERATIVIVEAI of LANGCHAIN class. The model is defined with a low temperature temperature (0.1) for more deterministic responses, and the Convert_System_Message_To_Human = True parameter parameter guarantees that the system prompts are properly managed as messages readable by humans for gemini API. The final print statement confirms that the Gemini model is ready for use.

detailed_prompt = ChatPromptTemplate.from_messages((

("system", """You are an intelligent assistant with access to web search capabilities.

When users ask questions, you can use the Jina search tool to find current information.

Instructions:

1. If the question requires recent or specific information, use the search tool

2. Provide comprehensive answers based on the search results

3. Always cite your sources when using search results

4. Be helpful and informative in your responses"""),

("human", "{user_input}"),

("placeholder", "{messages}"),

))

We define a prompt model using chatprompttemplate.from_messages () which guides the behavior of the AI. It includes a system message describing the role of the assistant, a reserved space of human message for user requests and a space reserved for tool messages generated during tool calls. This structured prompt guarantees that AI provides useful, informative and well source responses while transparently integrating the search results in the conversation.

gemini_with_tools = gemini_model.bind_tools((search_tool))

print("✅ Tools bound to Gemini model")

main_chain = detailed_prompt | gemini_with_tools

def format_tool_result(tool_call: Dict(str, Any), tool_result: str) -> str:

"""Format tool results for better readability"""

return f"Search Results for '{tool_call('args')('query')}':\n{tool_result(:800)}..."We link the Jina research tool to the Gemini model using Bind_Tools (), allowing the model to summon the search tool if necessary. Main_chain combines the structured prompt model and the gemini model improved by tool, creating a transparent workflow to manage user inputs and dynamic tool calls. In addition, the format_tool_result formats function The search results for a clear and readable display, ensuring that users can easily include outings of search requests.

@chain

def enhanced_search_chain(user_input: str, config: RunnableConfig):

"""

Enhanced chain that handles tool calls and provides detailed responses

"""

print(f"\n🤖 Processing query: '{user_input}'")

input_data = {"user_input": user_input}

print("📤 Sending to Gemini...")

ai_response = main_chain.invoke(input_data, config=config)

if ai_response.tool_calls:

print(f"🛠️ AI requested {len(ai_response.tool_calls)} tool call(s)")

tool_messages = ()

for i, tool_call in enumerate(ai_response.tool_calls):

print(f" 🔍 Executing search {i+1}: {tool_call('args')('query')}")

tool_result = search_tool.invoke(tool_call)

tool_msg = ToolMessage(

content=tool_result,

tool_call_id=tool_call('id')

)

tool_messages.append(tool_msg)

print("📥 Getting final response with search results...")

final_input = {

**input_data,

"messages": (ai_response) + tool_messages

}

final_response = main_chain.invoke(final_input, config=config)

return final_response

else:

print("ℹ️ No tool calls needed")

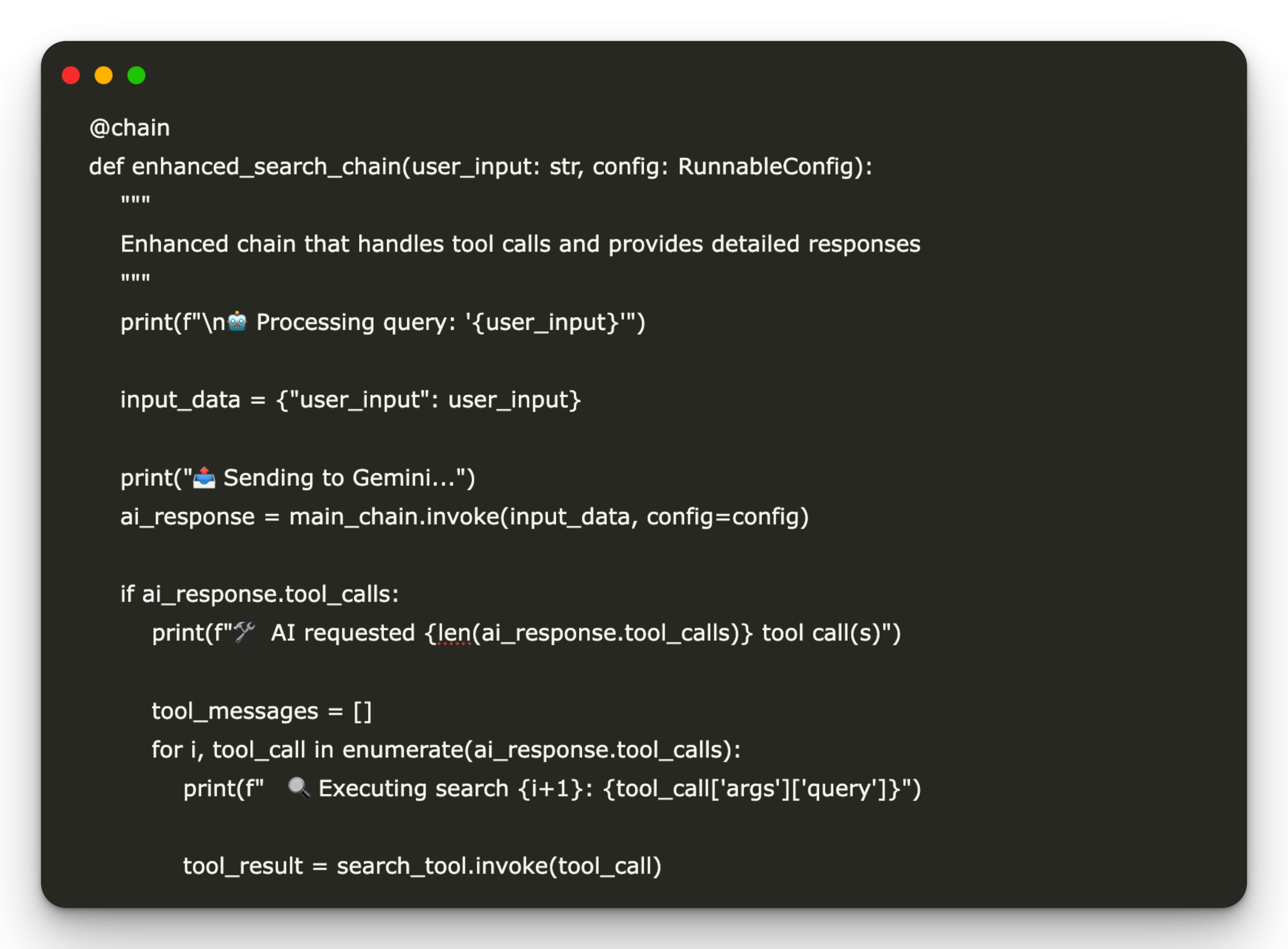

return ai_responseWe define the reinforcement_search_chain using the @Chain de Langchain decorator, allowing him to manage user requests with dynamic use of tools. It takes a user input and a configuration object, passes the entry via the main channel (which includes the prompt and the Gemini with tools), and checks if AI suggests tools (for example, web search via Jina). If tool calls are present, he performs research, creates Tolmessage objects and reinvests the chain with the results of the tool for a final and enriched response. If no tool call is made, it directly returns the AI response.

def test_search_chain():

"""Test the search chain with various queries"""

test_queries = (

"what is langgraph",

"latest developments in AI for 2025",

"how does langchain work with different LLMs"

)

print("\n" + "="*60)

print("🧪 TESTING ENHANCED SEARCH CHAIN")

print("="*60)

for i, query in enumerate(test_queries, 1):

print(f"\n📝 Test {i}: {query}")

print("-" * 50)

try:

response = enhanced_search_chain.invoke(query)

print(f"✅ Response: {response.content(:300)}...")

if hasattr(response, 'tool_calls') and response.tool_calls:

print(f"🛠️ Used {len(response.tool_calls)} tool call(s)")

except Exception as e:

print(f"❌ Error: {str(e)}")

print("-" * 50)The function, test_search_chain (), validates the entire configuration of the AI assistant by performing a series of test requests via reinforcement_search_chain. It defines a list of various test prompts, covering tools, AI subjects and Langchain integrations, and prints the results, indicating whether the tool calls have been used. This helps to check that AI can effectively trigger web research, process responses and return useful information to users, ensuring a robust and interactive system.

if __name__ == "__main__":

print("\n🚀 Starting enhanced LangChain + Gemini + Jina Search demo...")

test_search_chain()

print("\n" + "="*60)

print("💬 INTERACTIVE MODE - Ask me anything! (type 'quit' to exit)")

print("="*60)

while True:

user_query = input("\n🗣️ Your question: ").strip()

if user_query.lower() in ('quit', 'exit', 'bye'):

print("👋 Goodbye!")

break

if user_query:

try:

response = enhanced_search_chain.invoke(user_query)

print(f"\n🤖 Response:\n{response.content}")

except Exception as e:

print(f"❌ Error: {str(e)}")Finally, we execute the AI assistant as a script when the file is executed directly. He first calls the Test_Search_Chain () Test function to validate the system with predefined requests, ensuring that the configuration is working properly. Then, it starts an interactive mode, allowing users to type personalized questions and receive answers generated by AI enriched with dynamic search results if necessary. The loop continues until the user hits “quit”, “output” or “bye”, providing an intuitive and practical means of interacting with the AI system.

In conclusion, we managed to build an AI AI ASA assistant who operates the modular framework of Langchain, the generative capacities of Gemini 2.0 Flash and the web research functionality of Jina Search in real time. This hybrid approach shows how AI models can extend their knowledge beyond static data, providing users with timely and relevant information from reliable sources. You can now extend this project by integrating additional tools, by customizing prompts or deployment of the assistant as an APP or web application for wider applications. This foundation opens infinite possibilities to build intelligent systems that are both powerful and contextually aware.

Discover the GitHub notebook. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our 95K + ML Subdreddit and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.