Researchers from the University of Georgia and Massachusetts General Hospital (MGH) have developed a specialized linguistic model, RadiologyLlama-70BTo analyze and generate radiology reports. Built on LLAMA 3-70B, the model is formed on large medical data sets and offers impressive performance in the processing of radiological results.

Context and meaning

Radiological studies are a cornerstone of the diagnosis of the disease, but the growing volume of imaging data gives significant pressure on radiologists. AI has the potential to relieve this burden, improving both the efficiency and precision of the diagnosis. Radiologyllama-70B marks a key step towards the integration of AI into clinical workflows, allowing the analysis and rationalized interpretation of radiological reports.

Training and preparation data

The model was formed on a database containing more than 6.5 million medical reports of MGH patients, covering the years 2008-2018. According to the researchers, these complete relationships cover a variety of imaging methods and anatomical zones, including computed tomography, MRI, X -rays and fluoroscopic imaging.

The data set includes:

- Detailed radiological observations (results)

- Final impressions

- Study codes indicating imaging techniques such as CT, MRI and X -rays

After in -depth pretection and in -depth disinterest, the final training set included 4,354,321 reports, with 2,114 additional reports reserved for tests. Rigorous cleaning methods have been applied, such as the elimination of incorrect records, to reduce the probability of “hallucinations” (incorrect outputs).

Technical protruding facts

The model was formed using two approaches:

- Complete finer: Adjusting all model parameters.

- QLORA: A low -ranking adaptation method with 4 -bit quantification, which makes the calculation more effective.

Training infrastructure

The training process has exploited a group of 8 NVIDIA H100 GPU and included:

- Mixed precision training (BF16)

- Gradient control points for the optimization of memory

- Deeppeed Zero Stage 3 for distributed apprenticeship

Performance results

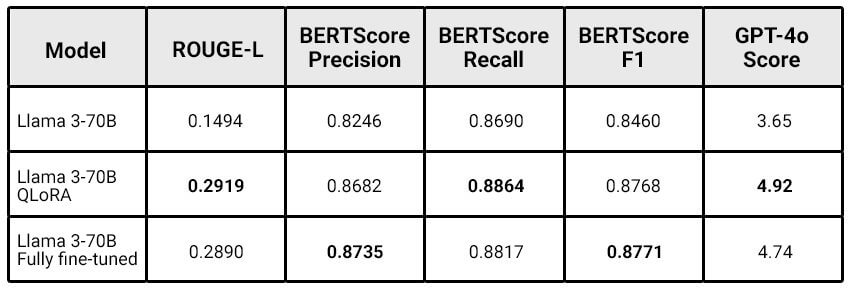

Radiologyllama-70B has considerably surpassed its basic model (Llama 3-70b):

QLORA has proven to be very effective, providing results comparable to a complete fine adjustment to lower calculation costs. The researchers noted: “The larger the model, the more the benefits of Qlora can get.”

Boundaries

The study recognizes certain challenges:

- No direct comparison with previous models such as

Radiology-llama2. - The latest Llama 3.1 versions were not used.

- The model can still present “hallucinations”, which makes it inappropriate for the generation of fully autonomous reports.

Future directions

The research team provides:

- Form the model on LLAMA 3.1-70B and explore the versions with 405B settings.

- Refine data pre -treatment using language models.

- Develop tools to detect “hallucinations” in the reports generated.

- Develop evaluation measures to include clinically relevant criteria.

Conclusion

RadiologyLlama-70B represents significant progression in the application of AI to radiology. Although it is not ready for fully autonomous use, the model shows great potential to improve the workflows of radiologists, providing more precise and relevant results. The study highlights the potential for approaches like QLORA to train specialized models for medical applications, opening the way to new innovations in health care AI.

For more details, see The complete study on Arxiv.