In this tutorial, we show how to take advantage ScrotegraphThe powerful scratch tools in combination with Gemini AI to automate the collection, analysis and analysis of competing information. Using SmartScrapertool and MarkdowifyTool of Scrapegraph, users can extract detailed information from product offers, pricing strategies, technological batteries and a market presence directly from competitors' websites. The tutorial then uses Gemini's advanced language model to synthesize these disparate data points in structured and usable intelligence. Throughout the process, the scraphaph guarantees that raw extraction is both precise and evolving, allowing analysts to focus on strategic interpretation rather than manual data collection.

%pip install --quiet -U langchain-scrapegraph langchain-google-genai pandas matplotlib seabornWe improve or calmly install the latest versions of essential libraries, including Langchain-Scraphy for advanced web scratching and Langchain-Google-Genai to integrate gemini AI, as well as for data analysis tools such as Pandas, Matplotlib and Seaborn, in order to guarantee that your environment is ready for the flow of work of competitive intelligence.

import getpass

import os

import json

import pandas as pd

from typing import List, Dict, Any

from datetime import datetime

import matplotlib.pyplot as plt

import seaborn as snsWe import essential python libraries for the configuration of a secure and data -based pipeline: GetPass and OS manage passwords and environmental variables, JSON manages serialized and pandas data offers robust data data operations. The typing module provides type advice for better code clarity, while DateTime records horodatages. Finally, Matplotlib.Pylch and Seaborn equip us with tools to create insightful visualizations.

if not os.environ.get("SGAI_API_KEY"):

os.environ("SGAI_API_KEY") = getpass.getpass("ScrapeGraph AI API key:\n")

if not os.environ.get("GOOGLE_API_KEY"):

os.environ("GOOGLE_API_KEY") = getpass.getpass("Google API key for Gemini:\n")We check if the environmental variables SGAI_API_KEY and Google_Api_Key are already defined; Otherwise, the script secure secure the user for its API Scracegraph and Google (GEMINI) keys via GetPass and stores them in the environment for subsequent authenticated requests.

from langchain_scrapegraph.tools import (

SmartScraperTool,

SearchScraperTool,

MarkdownifyTool,

GetCreditsTool,

)

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnableConfig, chain

from langchain_core.output_parsers import JsonOutputParser

smartscraper = SmartScraperTool()

searchscraper = SearchScraperTool()

markdownify = MarkdownifyTool()

credits = GetCreditsTool()

llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0.1,

convert_system_message_to_human=True

)

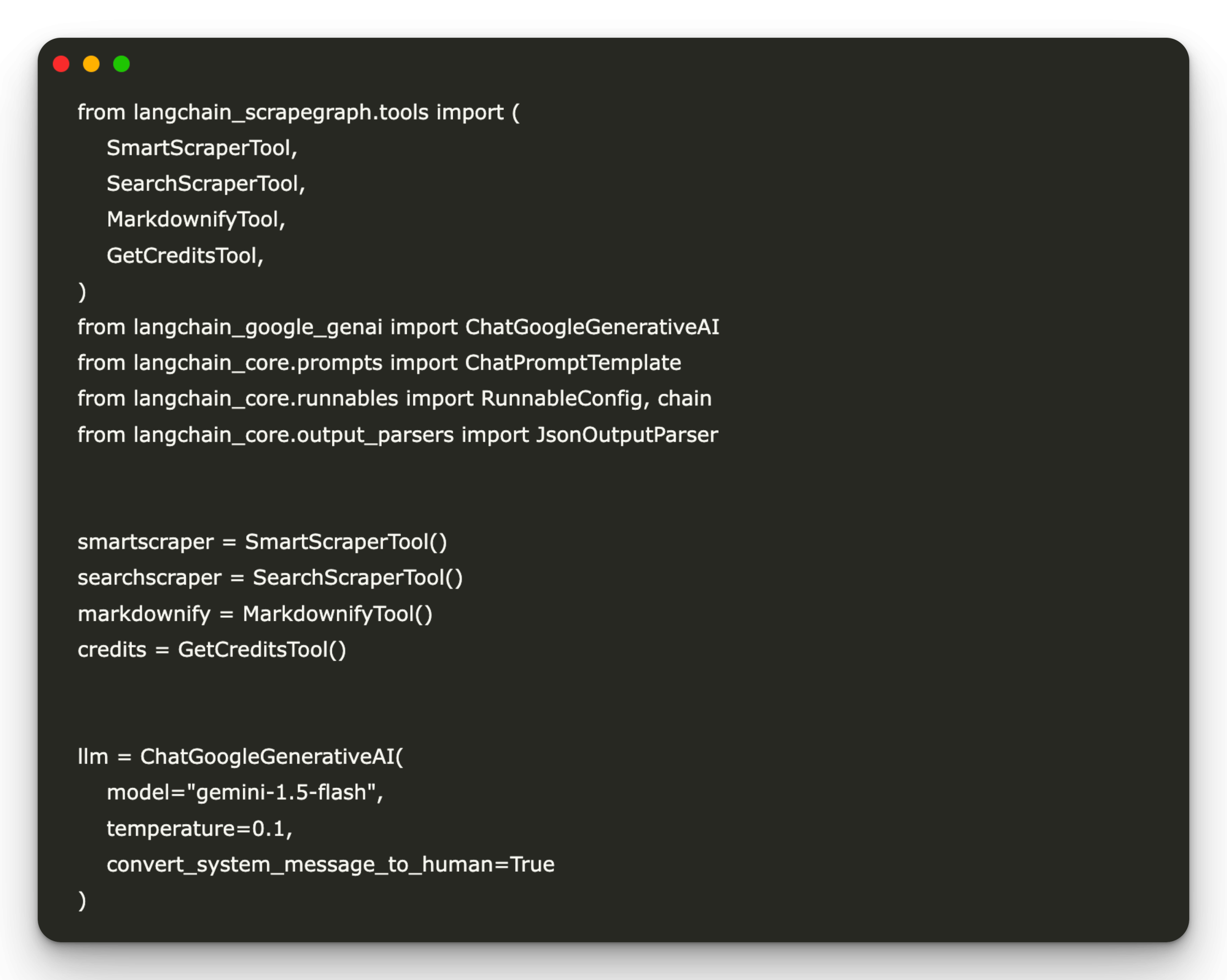

Here, we import and institute scrapraphs tools, smartscrapertool, Searchscrapertool, MarkdowifyTool and Getcreditstool, to extract and processing web data, then configure the chatgooglegenerativateai model with the gemini-1.5-flash “system of system” (low temperature and Let us also bring chatprompttemplate, runnableconfig, chain and jsonoutputparser from Langchain_core to structural prompts and outputs of the analysis model.

class CompetitiveAnalyzer:

def __init__(self):

self.results = ()

self.analysis_timestamp = datetime.now().strftime("%Y-%m-%d %H:%M:%S")

def scrape_competitor_data(self, url: str, company_name: str = None) -> Dict(str, Any):

"""Scrape comprehensive data from a competitor website"""

extraction_prompt = """

Extract the following information from this website:

1. Company name and tagline

2. Main products/services offered

3. Pricing information (if available)

4. Target audience/market

5. Key features and benefits highlighted

6. Technology stack mentioned

7. Contact information

8. Social media presence

9. Recent news or announcements

10. Team size indicators

11. Funding information (if mentioned)

12. Customer testimonials or case studies

13. Partnership information

14. Geographic presence/markets served

Return the information in a structured JSON format with clear categorization.

If information is not available, mark as 'Not Available'.

"""

try:

result = smartscraper.invoke({

"user_prompt": extraction_prompt,

"website_url": url,

})

markdown_content = markdownify.invoke({"website_url": url})

competitor_data = {

"company_name": company_name or "Unknown",

"url": url,

"scraped_data": result,

"markdown_length": len(markdown_content),

"analysis_date": self.analysis_timestamp,

"success": True,

"error": None

}

return competitor_data

except Exception as e:

return {

"company_name": company_name or "Unknown",

"url": url,

"scraped_data": None,

"error": str(e),

"success": False,

"analysis_date": self.analysis_timestamp

}

def analyze_competitor_landscape(self, competitors: List(Dict(str, str))) -> Dict(str, Any):

"""Analyze multiple competitors and generate insights"""

print(f"🔍 Starting competitive analysis for {len(competitors)} companies...")

for i, competitor in enumerate(competitors, 1):

print(f"📊 Analyzing {competitor('name')} ({i}/{len(competitors)})...")

data = self.scrape_competitor_data(

competitor('url'),

competitor('name')

)

self.results.append(data)

analysis_prompt = ChatPromptTemplate.from_messages((

("system", """

You are a senior business analyst specializing in competitive intelligence.

Analyze the scraped competitor data and provide comprehensive insights including:

1. Market positioning analysis

2. Pricing strategy comparison

3. Feature gap analysis

4. Target audience overlap

5. Technology differentiation

6. Market opportunities

7. Competitive threats

8. Strategic recommendations

Provide actionable insights in JSON format with clear categories and recommendations.

"""),

("human", "Analyze this competitive data: {competitor_data}")

))

clean_data = ()

for result in self.results:

if result('success'):

clean_data.append({

'company': result('company_name'),

'url': result('url'),

'data': result('scraped_data')

})

analysis_chain = analysis_prompt | llm | JsonOutputParser()

try:

competitive_analysis = analysis_chain.invoke({

"competitor_data": json.dumps(clean_data, indent=2)

})

except:

analysis_chain_text = analysis_prompt | llm

competitive_analysis = analysis_chain_text.invoke({

"competitor_data": json.dumps(clean_data, indent=2)

})

return {

"analysis": competitive_analysis,

"raw_data": self.results,

"summary_stats": self.generate_summary_stats()

}

def generate_summary_stats(self) -> Dict(str, Any):

"""Generate summary statistics from the analysis"""

successful_scrapes = sum(1 for r in self.results if r('success'))

failed_scrapes = len(self.results) - successful_scrapes

return {

"total_companies_analyzed": len(self.results),

"successful_scrapes": successful_scrapes,

"failed_scrapes": failed_scrapes,

"success_rate": f"{(successful_scrapes/len(self.results)*100):.1f}%" if self.results else "0%",

"analysis_timestamp": self.analysis_timestamp

}

def export_results(self, filename: str = None):

"""Export results to JSON and CSV files"""

if not filename:

filename = f"competitive_analysis_{datetime.now().strftime('%Y%m%d_%H%M%S')}"

with open(f"{filename}.json", 'w') as f:

json.dump({

"results": self.results,

"summary": self.generate_summary_stats()

}, f, indent=2)

df_data = ()

for result in self.results:

if result('success'):

df_data.append({

'Company': result('company_name'),

'URL': result('url'),

'Success': result('success'),

'Data_Length': len(str(result('scraped_data'))) if result('scraped_data') else 0,

'Analysis_Date': result('analysis_date')

})

if df_data:

df = pd.DataFrame(df_data)

df.to_csv(f"{filename}.csv", index=False)

print(f"✅ Results exported to {filename}.json and {filename}.csv")The CompetitiveAnalyzer class orchestra orchestrates end -to -end searches, scratching detailed information from the company using scrapegraph tools, compilation and cleaning of the results, then taking advantage of Gémini AI to generate structured competitive information. It also follows success rates and horodatages, and provides utility methods to export both raw data and summarized in JSON and CSV formats for easy -down reports and analyzes.

def run_ai_saas_analysis():

"""Run a comprehensive analysis of AI/SaaS competitors"""

analyzer = CompetitiveAnalyzer()

ai_saas_competitors = (

{"name": "OpenAI", "url": "https://openai.com"},

{"name": "Anthropic", "url": "https://anthropic.com"},

{"name": "Hugging Face", "url": "https://huggingface.co"},

{"name": "Cohere", "url": "https://cohere.ai"},

{"name": "Scale AI", "url": "https://scale.com"},

)

results = analyzer.analyze_competitor_landscape(ai_saas_competitors)

print("\n" + "="*80)

print("🎯 COMPETITIVE ANALYSIS RESULTS")

print("="*80)

print(f"\n📊 Summary Statistics:")

stats = results('summary_stats')

for key, value in stats.items():

print(f" {key.replace('_', ' ').title()}: {value}")

print(f"\n🔍 Strategic Analysis:")

if isinstance(results('analysis'), dict):

for section, content in results('analysis').items():

print(f"\n {section.replace('_', ' ').title()}:")

if isinstance(content, list):

for item in content:

print(f" • {item}")

else:

print(f" {content}")

else:

print(results('analysis'))

analyzer.export_results("ai_saas_competitive_analysis")

return resultsThe above function initiates competitive analysis by instantling competitiveness and defining the main AI / SaaS players to assess. He then performs the complete workflow of freezing and inspired, prints formatted summary statistics and strategic results, and finally exports the detailed results to JSON and CSV for subsequent use.

def run_ecommerce_analysis():

"""Analyze e-commerce platform competitors"""

analyzer = CompetitiveAnalyzer()

ecommerce_competitors = (

{"name": "Shopify", "url": "https://shopify.com"},

{"name": "WooCommerce", "url": "https://woocommerce.com"},

{"name": "BigCommerce", "url": "https://bigcommerce.com"},

{"name": "Magento", "url": "https://magento.com"},

)

results = analyzer.analyze_competitor_landscape(ecommerce_competitors)

analyzer.export_results("ecommerce_competitive_analysis")

return resultsThe above function sets up a competitive-analyzer to assess the main electronic commerce platforms by scraping the details of each site, generating strategic information, then exporting the results to the JSON and CSV files under the name of “Ecommerce_competiel_analyysy”.

@chain

def social_media_monitoring_chain(company_urls: List(str), config: RunnableConfig):

"""Monitor social media presence and engagement strategies of competitors"""

social_media_prompt = ChatPromptTemplate.from_messages((

("system", """

You are a social media strategist. Analyze the social media presence and strategies

of these companies. Focus on:

1. Platform presence (LinkedIn, Twitter, Instagram, etc.)

2. Content strategy patterns

3. Engagement tactics

4. Community building approaches

5. Brand voice and messaging

6. Posting frequency and timing

Provide actionable insights for improving social media strategy.

"""),

("human", "Analyze social media data for: {urls}")

))

social_data = ()

for url in company_urls:

try:

result = smartscraper.invoke({

"user_prompt": "Extract all social media links, community engagement features, and social proof elements",

"website_url": url,

})

social_data.append({"url": url, "social_data": result})

except Exception as e:

social_data.append({"url": url, "error": str(e)})

chain = social_media_prompt | llm

analysis = chain.invoke({"urls": json.dumps(social_data, indent=2)}, config=config)

return {

"social_analysis": analysis,

"raw_social_data": social_data

}

Here, this chained function defines a pipeline to bring together and analyze the footprints on the social networks of competitors: it uses the intelligent scraper of scrapgraph to extract the links of social media and the elements of engagement, then feeds this data in Gemini with a targeted prompt on the presence, the content strategy and the community tactics. Finally, it refers both gross scratched information and information on social media generated by the AI-AI in a single structured outing.

def check_credits():

"""Check available credits"""

try:

credits_info = credits.invoke({})

print(f"💳 Available Credits: {credits_info}")

return credits_info

except Exception as e:

print(f"⚠️ Could not check credits: {e}")

return NoneThe above function calls the getcreditstool to recover and display your API Scracegraph / Gemini credits available, print the result or a warning if the check fails, and returns credit information (or none on error).

if __name__ == "__main__":

print("🚀 Advanced Competitive Analysis Tool with Gemini AI")

print("="*60)

check_credits()

print("\n🤖 Running AI/SaaS Competitive Analysis...")

ai_results = run_ai_saas_analysis()

run_additional = input("\n❓ Run e-commerce analysis as well? (y/n): ").lower().strip()

if run_additional == 'y':

print("\n🛒 Running E-commerce Platform Analysis...")

ecom_results = run_ecommerce_analysis()

print("\n✨ Analysis complete! Check the exported files for detailed results.")Finally, the last part of code serves as an entry point of the script: it prints a header, checks the appropriations of the API, then launches the analysis of the AI / SaaS competitors (and possibly the analysis of the electronic commerce) before reporting that all the results have been exported.

In conclusion, the integration of scraphins scraping capacities with Gemini AI transforms a traditionally competitive intelligence work flow which takes time into an effective and reproducible pipeline. Scraphaph manages the lifting of recovery and standardization of web information, while understanding the language of Gemini transforms that raw data into high -level strategic recommendations. Consequently, companies can quickly assess the positioning of the market, identify the gaps in functionalities and discover emerging opportunities with minimum manual intervention. By automating these steps, users acquire speed and consistency, as well as flexibility to extend their analysis to new competitors or markets if necessary.

Discover the GitHub notebook. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our 95K + ML Subdreddit and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.