The video generation fueled by AI improves at a breathtaking pace. In a short time, we went from fuzzy clips and incoherent to videos generated with amazing realism. However, for all this progress, a critical capacity has been missing: Control and modifications

Although the generation of a beautiful video is one thing, the capacity of professional and realization to modify He – to change the lighting from day to night, exchange the material from an object from wood to metal, or insert a new element into the scene – has remained a great problem largely unresolved. This gap was the key barrier preventing AI from becoming a truly fundamental tool for filmmakers, designers and creators.

Until the introduction of Broadcasting!!

In a new revolutionary article, researchers from Nvidia, the University of Toronto, the Vector Institute and the University of Illinois Urbana-Champaign have revealed a framework that directly challenges this challenge. Diffusionerender represents a revolutionary jump forward, going beyond the simple generation to offer a unified solution to understand and manipulate 3D scenes in a single video. It actually fills the gap between generation and publishing, unlocking the real creative potential of the content focused on AI.

The old way against the new way: a paradigm shift

For decades, photorealism has been anchored in PBR, a methodology that meticulously simulates the flow of light. Although it produces amazing results, it is a fragile system. The PBR depends critically on the part of a perfect digital plan of a scene – take 3D geometry, detailed hardware textures and precise lighting cards. The process of capturing this real world plan, known as reverse renderingis notoriously difficult and subject to errors. Even small imperfections in these data can cause catastrophic failures in the final rendering, a key to a key strangulation which has the use of limited PBR outside controlled studio environments.

Previous neural rendering techniques and nerves, although revolutionary to create static views, have struck a wall with regard to assembly. They “cook” the lighting and the materials in the scene, making the post-capture changes almost impossible.

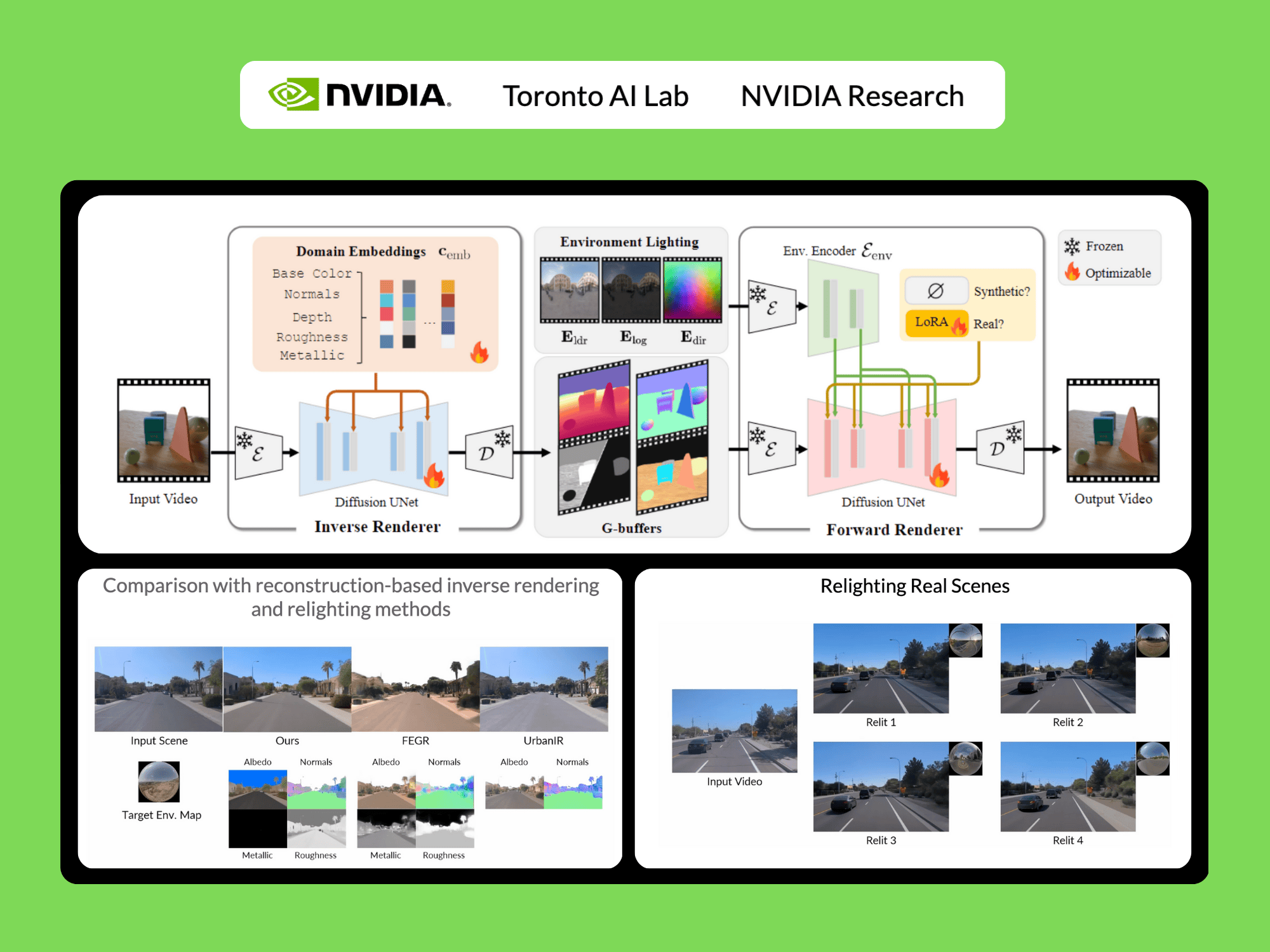

Broadcasting Treats the “what” (the properties of the scene) and the “how” (the rendering) in a unified setting built on the same powerful video diffusion architecture which underlies models as a stable video broadcast.

This method uses two neural rendering to process the video:

- Neurral reverse rendering: This model acts as a stage detective. He analyzes an input RGB video and intelligently estimates the intrinsic properties, generating essential data stamps (G-Buffers) which describe the geometry of the scene (normal, depth) and the materials (color, roughness, metallic) at the level of the pixel. Each attribute is generated in a dedicated pass to allow a high quality generation.

- Rendered before neural: This model works like the artist. He takes the G-Buffers of the opposite rendering, combines them with any desired lighting (an environmental card) and synthesizes a photorealist video. Above all, it was formed to be robust, capable of producing complex and complex light transport effects such as sweet shadows and inter-reflexions even when the input g-duals of the reverse rendering are imperfect or “noisy”.

This self-corrective synergy is the heart of the breakthrough. The system is designed for the disorder of the real world, where perfect data is a myth.

Secret sauce: a new data strategy to fill the reality gap

An intelligent model is nothing without intelligent data. Researchers behind Broadcasting Designed an ingenious data strategy with two -components to teach their models of perfect physics and imperfect reality.

- A massive synthetic universe: First of all, they built a high -quality wide -quality synthetic data set of 150,000 videos. Using thousands of 3D objects, PBR materials and HDR light cards, they created complex scenes and returned them with a perfect path traced engine. This gave the opposite rendering model an impeccable “manual” to learn, providing it with perfect data to the court to the earth.

- Automatically marginating the real world: The team noted that the opposite rendering, trained only on synthetic data, was surprisingly good to generalize the real videos. They triggered it on a massive data set of 10,510 real world videos (DL3DV10K). The model automatically generated G-Buffer labels for these real images. This created a colossal data set of 150,000 real scenes samples with corresponding intrinsic property cards – although imperfect.

By co-training the front rendering on perfect synthetic data and the real world of automatic marking, the model has learned to fill the critical “domain gap”. He learned the rules of the synthetic world and the appearance of the real world. To manage inevitable inaccuracies in automatic marking data, the team incorporated a LORA module (low -ranking adaptation), an intelligent technique that allows the model to adapt to the most noisy real data without compromising the knowledge acquired from the virgin synthetic whole.

Peak performance

The results speak for themselves. In rigorous head-to-face comparisons with classic and neuronal cutting-edge methods, Broadcasting is constantly out of all the tasks evaluated by a wide margin:

- Rendering before: During the generation of images from g and lighting puffs, Broadcasting Significantly, other neural methods, in particular in complex multi-objects, where inter-reflexions and realistic shadows are essential. Neuronal rendering has considerably surpassed other methods.

- Reverse rendering: THE model It turned out to be greater than the estimation of the intrinsic properties of a scene from a video, reaching higher precision on the Albédo, the material and the normal estimate than all the basic lines. The use of a video model (compared to a single image model) has proven to be particularly effective, respectively reducing the errors of metallic prediction and roughness of 41% and 20%, because it exploits movement to better understand the dependent effects of sight.

- Reaux: In the ultimate pipeline test, Broadcasting Produces quantitatively and qualitatively higher reduction results compared to the main methods such as Dilightnet and Neural Gaffer, generating more precise specular reflections and high -fidelity lighting.

What you can do with Broadcasting: Powerful edition!

This research unlocks a series of practical and powerful publishing applications that work from a single everyday video. The workflow is simple: the model first makes an opposite rendering to understand the scene, the user modifies the properties and the model then makes a rendering before to create a new photorealist video.

- Dynamic reaucoupe: Change the time of day, exchange studio lights for a sunset or completely change the atmosphere of a scene by simply providing a new environmental card. The framework realistically redesigned the video with all the corresponding shadows and reflections.

- Edition of intuitive equipment: Do you want to see what this leather chair in Chrome would look like? Or publish a metallic statue in rough stone? Users can directly modify G -Buffers materials – adjusting roughness, metal properties and colors – and the model will make changes in a photorealistic way.

- Insertion of seamless objects: Place new virtual objects in a real world scene. By adding the properties of the new object to the G-Buffers of the scene, the front rendering can synthesize a final video where the object is naturally integrated, by throwing realistic shadows and by picking up precise reflections of its environment.

A new base for graphics

Broadcasting represents a final breakthrough. By holistically resolving the opposite and forward rendering in a single robust framework and data based, it demolishes the long -standing barriers of the traditional PBR. He democratizes the photorealistic rendering, the moving in the exclusive field of VFX experts with powerful equipment to a more accessible tool for creators, designers and developers AR / VR.

In a recent update, the authors still improve the start and the re-evaluation by taking advantage of Nvidia Cosmos and improved data storage.

This demonstrates a promising scaling trend: as the underlying video diffusion model becomes more powerful, the quality of output improves, which gives sharper and more precise results.

These improvements make technology even more convincing.

The new model is published under Apache 2.0 and the Open Nvidia model license and is Available here

Sources:

Thank you to the NVIDIA team for leadership / opinion resources for this article. The NVIDIA team supported and sponsored this content / article.