Introduction: What is contextual engineering?

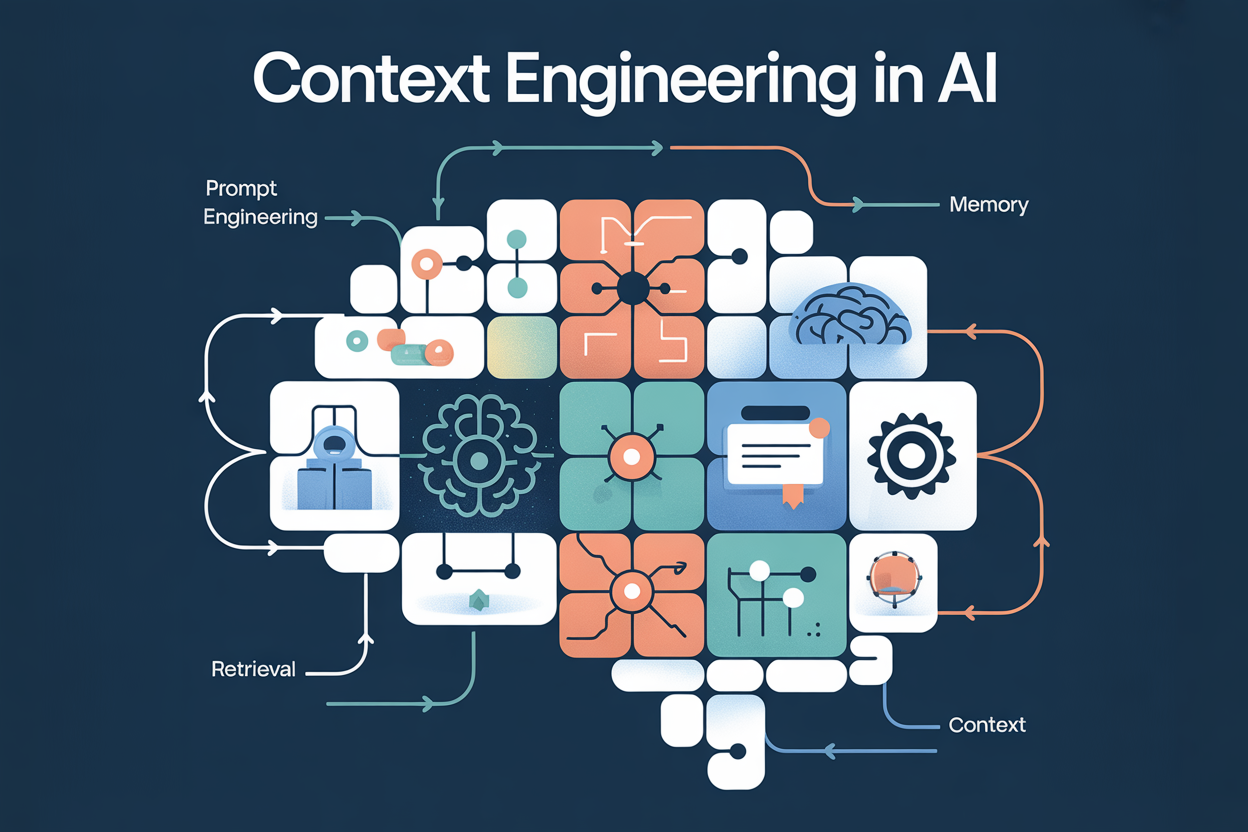

Context engineering refers to the discipline of the design, organization and manipulation of the context which is introduced in large languages (LLM) models to optimize their performance. Rather than adjusting the weights or architectures of the model, contextual engineering focuses on the to input—The prompts, system instructions, recovered knowledge, formatting and even the order of information.

Context engineering is not to make better prompts. It's about building systems that offer the right context, exactly when necessary.

Imagine that an AI assistant asked to write a performance review.

→ Bad context: He only sees instruction. The result is a vague generic feedback that lacks insight.

→ Rich context: He sees the instruction more The employee's objectives, past criticisms, project results, peers comments and manager's notes. The result? A nuanced review and backed by data that feels informed and personalized, because it is.

This emerging practice is gaining ground due to increasing dependence on models based on models like GPT-4, Claude and Mistral. The performance of these models often concerns their size less and more on the context quality They receive. In this sense, contextual engineering is the equivalent of rapid programming for the era of intelligent agents and generation with recovery (CLOTH).

Why do we need contextual engineering?

- Effective tokens: With expansion context windows but always delimited (for example, 128K in GPT-4-Turbo), effective context management becomes crucial. A redundant or poorly structured context is waste precious tokens.

- Precision and relevance: LLM are sensitive to noise. The more targeted and logically arranged the prompt, the higher the precise exit probability.

- AUGURATIVE GENERATION (RAG): In rag systems, external data is recovered in real time. Contextual engineering helps to decide what to recover, choose it and present it.

- Agent workflow: When you use tools like Langchain or Opens, autonomous agents are based on the context to maintain memory, objectives and the use of tools. A bad context leads to the failure of planning or hallucination.

- Specific adaptation: The fine adjustment is expensive. The structuring of better prompts or construction recovery pipelines allows models to work well in specialized tasks with zero or a few shots.

Key techniques in contextual engineering

Several methodologies and practices shape the field:

1 and 1 System prompt optimization

The system prompt is fundamental. It defines the behavior and style of the LLM. The techniques include:

- Assignment of roles (for example, “you are a tutor in data science”)

- Educational framing (for example, “think step by step”)

- Taxation of constraints (for example, “only JSON output”)

2 Quick composition and chaining

Langchain popularized the use of fast models and channels to modulate incentive. The chaining makes it possible to divide the tasks between the prompts – for example, to break down a question, to recover evidence, then answer.

3 and 3 Context compression

With limited context windows, we can:

- Use summary models to compress the previous conversation

- Integrate and cluster similar content to delete redundancy

- Apply structured formats (such as tables) instead of verbal prose

4 Dynamic recovery and routing

The rag pipelines (like those of Llamaindex and Langchain) collect documents from vector stores according to the intention of users. Advanced configurations include:

- Thank or expand for reformularity before recovery

- Multi-carrier routing to choose different sources or retrievers

- Recour

5 Memory genius

Short -term memory (which is in the prompt) and long -term memory (recovery history) need alignment. The techniques include:

- Context replay (injection of past relevant interactions)

- Memory summary

- Intentional memory selection

6. Tool context

In agent -based systems, the use of tools is aware of the context:

- Tool description formatting

- Summary of tool history

- Observations have been made between the stages

Quick engineering contextual engineering

Although linked, contextual engineering is wider and more in the system. Quick engineering generally concerns static entrance channels and handcrafted. Contextual engineering encompasses the construction of dynamic contexts using incorporation, memory, chaining and recovery. As Simon Willison noted, “contextual engineering is what we do instead of fine tuning.”

Real world applications

- Customer support agents: Supply of ticket summaries, customer profile data and KB documents.

- Assistant code: Injection of documentation specific to replenishment, previous commits and use of functions.

- Legal documents search: Question to the context with the history of the case and the previous ones.

- Education: Personalized tutoring agents with the memory of the behavior and the objectives of the learners.

Challenges in contextual engineering

Despite his promise, several points of pain remain:

- Latency: The recovery and formatting steps introduce the general costs.

- Classification: Bad recovery hurts downstream.

- Token budgeting: Choosing what to include / exclude is non -trivial.

- Tool interoperability: The mixture tools (Langchain, Llamaindex, personalized retrievers) add complexity.

Best emerging practices

- Mix the structured text (JSON, tables) and not structured for a better analysis.

- Limit each context injection to a single logical unit (for example, a document or conversation summary).

- Use metadata (horodatages, paternity) for better sorting and notation.

- Connection, trace and audit context injections to improve over time.

The future of contextual engineering

Several trends suggest that contextual engineering will be fundamental in LLM pipelines:

- Conscious contextual adaptation of the model: Future models can dynamically request the type or context format they need.

- Self-reflective agents: Agents who check their context, revise their own memory and signal the risk of hallucination.

- Standardization: Similar to the way JSON has become a universal data exchange format, context models can become standardized for agents and tools.

As Andrej Karpathy alluded in a recent post“The context is the new weight update”. Rather than recycling the models, we now program them via their context – creating contextual engineering from the dominant software interface in the LLM era.

Conclusion

Contextual engineering is no longer optional – it is essential to unlock all the capacities of modern language models. While toolboxes like Langchain and Mature Llamandex and agent workflows proliferate, the construction of master's context becomes as important as the selection of the model. Whether you create a recovery system, a coding agent or a personalized tutor, how you structure the context of the model will increasingly define its intelligence.

Sources:

- https://x.com/tobi/status/1935533422589399127

- https://x.com/karpathy/status/1937902205765607626

- https://blog.langchain.com/the-rise-of-context-ingineering/

- https://rlancemartin.github.io/2025/06/23/context_engineering/

- https://www.philschmid.de/context-ingineering

- https://blog.langchain.com/context-ingineering-for-agents/

- https://www.llamaindex.ai/blog/context-engineering-what-it-is-andtechniques-to-consider

Do not hesitate to follow us Twitter,, YouTube And Spotify And don't forget to join our Subseubdredit 100k + ml and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.