In this tutorial, we will exploit RizaThe execution of secure python like the cornerstone of a powerful tool agent with tools in Google Colarb. Starting with the management of the keys of the seamless API, via Colab Secrets, environmental variables or hidden prompts, we will configure your Riza identification information to allow the execution of Sandboxed code and ready to audit. We will join the Rizhain Execpyon tool in a Langchain agent alongside the Gemini generative model from Google, will define an AdvancedCallBackhandler which captures both tool invocations and RIZA execution newspapers, and build personalized utilities for complex mathematics and in -depth text analysis.

%pip install --upgrade --quiet langchain-community langchain-google-genai rizaio python-dotenv

import os

from typing import Dict, Any, List

from datetime import datetime

import json

import getpass

from google.colab import userdataWe will install and improve basic libraries, Langchain community extensions, Google Gemini integration, Riza's secure execution package and DotenV support, quietly in Colab. We then import standard utilities (for example, OS, Datetime, JSON), tapping annotations, secure input via GetPass and Colab user data API to manage environmental variables and user secrets in a transparent manner.

def setup_api_keys():

"""Set up API keys using multiple secure methods."""

try:

os.environ('GOOGLE_API_KEY') = userdata.get('GOOGLE_API_KEY')

os.environ('RIZA_API_KEY') = userdata.get('RIZA_API_KEY')

print("✅ API keys loaded from Colab secrets")

return True

except:

pass

if os.getenv('GOOGLE_API_KEY') and os.getenv('RIZA_API_KEY'):

print("✅ API keys found in environment")

return True

try:

if not os.getenv('GOOGLE_API_KEY'):

google_key = getpass.getpass("🔑 Enter your Google Gemini API key: ")

os.environ('GOOGLE_API_KEY') = google_key

if not os.getenv('RIZA_API_KEY'):

riza_key = getpass.getpass("🔑 Enter your Riza API key: ")

os.environ('RIZA_API_KEY') = riza_key

print("✅ API keys set securely via input")

return True

except:

print("❌ Failed to set API keys")

return False

if not setup_api_keys():

print("⚠️ Please set up your API keys using one of these methods:")

print(" 1. Colab Secrets: Go to 🔑 in left panel, add GOOGLE_API_KEY and RIZA_API_KEY")

print(" 2. Environment: Set GOOGLE_API_KEY and RIZA_API_KEY before running")

print(" 3. Manual input: Run the cell and enter keys when prompted")

exit()The above cell defines a Setup_Api_Keys () function which safely recovers your Google Gemini and Riza keys, first trying to load them from Colab secrets, then falling back to the existing environmental variables, and finally encouraging them to enter them via a hidden input if necessary. If none of these methods succeeds, it prints instructions on how to provide your keys and leaves the notebook.

from langchain_community.tools.riza.command import ExecPython

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain.agents import AgentExecutor, create_tool_calling_agent

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.messages import HumanMessage, AIMessage

from langchain.memory import ConversationBufferWindowMemory

from langchain.tools import Tool

from langchain.callbacks.base import BaseCallbackHandlerWe import the Riza EXPYTHON tool alongside the main components of Langchain for the construction of a reference agent, namely the gemini LLM packaging (Chatgooglegenerativeai), the executor of the agent and the creation functions (agentxecutor, create_tool_calling_agent), the driving complete and the conversation memory pad, the generic tool tractor, Driving hiding place and the conversation memory package, the generic tool tractor, and the driving hiding place and the conversation memory package, the generic tool tractor, and the driving hiding place and the messages and the conversation memory stamp, the generic tool tractor, and the driving call and the complement of monitoring of the agents' actions. These construction blocks allow you to assemble, configure and follow an AI Multi-tool agent compatible with memory in Colab.

class AdvancedCallbackHandler(BaseCallbackHandler):

"""Enhanced callback handler for detailed logging and metrics."""

def __init__(self):

self.execution_log = ()

self.start_time = None

self.token_count = 0

def on_agent_action(self, action, **kwargs):

timestamp = datetime.now().strftime("%H:%M:%S")

self.execution_log.append({

"timestamp": timestamp,

"action": action.tool,

"input": str(action.tool_input)(:100) + "..." if len(str(action.tool_input)) > 100 else str(action.tool_input)

})

print(f"🔧 ({timestamp}) Using tool: {action.tool}")

def on_agent_finish(self, finish, **kwargs):

timestamp = datetime.now().strftime("%H:%M:%S")

print(f"✅ ({timestamp}) Agent completed successfully")

def get_execution_summary(self):

return {

"total_actions": len(self.execution_log),

"execution_log": self.execution_log

}

class MathTool:

"""Advanced mathematical operations tool."""

@staticmethod

def complex_calculation(expression: str) -> str:

"""Evaluate complex mathematical expressions safely."""

try:

import math

import numpy as np

safe_dict = {

"__builtins__": {},

"abs": abs, "round": round, "min": min, "max": max,

"sum": sum, "len": len, "pow": pow,

"math": math, "np": np,

"sin": math.sin, "cos": math.cos, "tan": math.tan,

"log": math.log, "sqrt": math.sqrt, "pi": math.pi, "e": math.e

}

result = eval(expression, safe_dict)

return f"Result: {result}"

except Exception as e:

return f"Math Error: {str(e)}"

class TextAnalyzer:

"""Advanced text analysis tool."""

@staticmethod

def analyze_text(text: str) -> str:

"""Perform comprehensive text analysis."""

try:

char_freq = {}

for char in text.lower():

if char.isalpha():

char_freq(char) = char_freq.get(char, 0) + 1

words = text.split()

word_count = len(words)

avg_word_length = sum(len(word) for word in words) / max(word_count, 1)

specific_chars = {}

for char in set(text.lower()):

if char.isalpha():

specific_chars(char) = text.lower().count(char)

analysis = {

"total_characters": len(text),

"total_words": word_count,

"average_word_length": round(avg_word_length, 2),

"character_frequencies": dict(sorted(char_freq.items(), key=lambda x: x(1), reverse=True)(:10)),

"specific_character_counts": specific_chars

}

return json.dumps(analysis, indent=2)

except Exception as e:

return f"Analysis Error: {str(e)}"Above the cell brings together three essential parts: an AdvancedCallBackhandler which captures each invocation of the tool with a Horodomagian newspaper and can summarize the total actions taken; a Mathtool class which safely assesses complex mathematical expressions in a limited environment to prevent undesirable operations; And a textanelyzer class which calculates detailed text statistics, such as character frequencies, the number of words and the average length of the words, and returns the results as formatted JSON.

def validate_api_keys():

"""Validate API keys before creating agents."""

try:

test_llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0

)

test_llm.invoke("test")

print("✅ Gemini API key validated")

test_tool = ExecPython()

print("✅ Riza API key validated")

return True

except Exception as e:

print(f"❌ API key validation failed: {str(e)}")

print("Please check your API keys and try again")

return False

if not validate_api_keys():

exit()

python_tool = ExecPython()

math_tool = Tool(

name="advanced_math",

description="Perform complex mathematical calculations and evaluations",

func=MathTool.complex_calculation

)

text_analyzer_tool = Tool(

name="text_analyzer",

description="Analyze text for character frequencies, word statistics, and specific character counts",

func=TextAnalyzer.analyze_text

)

tools = (python_tool, math_tool, text_analyzer_tool)

try:

llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0.1,

max_tokens=2048,

top_p=0.8,

top_k=40

)

print("✅ Gemini model initialized successfully")

except Exception as e:

print(f"⚠️ Gemini Pro failed, falling back to Flash: {e}")

llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0.1,

max_tokens=2048

)

In this cell, we first define and execute validate_api_keys () to make sure that the gemini and riza identification information is working, trying a dummy LLM call and instantling the RIZA Execpyon tool. We leave the notebook if the validation fails. We then instance Python_Tool for the execution of secure code, wrap our Mathtool and textalyzer methods in Langchain tools and collect them in the list of tools. Finally, we initialize the Gemini model with personalized parameters (temperature, max_tokens, top_p, top_k), and if the “pro” configuration fails, we gracefully fall back to the lighter “flash” variant.

prompt_template = ChatPromptTemplate.from_messages((

("system", """You are an advanced AI assistant with access to powerful tools.

Key capabilities:

- Python code execution for complex computations

- Advanced mathematical operations

- Text analysis and character counting

- Problem decomposition and step-by-step reasoning

Instructions:

1. Always break down complex problems into smaller steps

2. Use the most appropriate tool for each task

3. Verify your results when possible

4. Provide clear explanations of your reasoning

5. For text analysis questions (like counting characters), use the text_analyzer tool first, then verify with Python if needed

Be precise, thorough, and helpful."""),

("human", "{input}"),

("placeholder", "{agent_scratchpad}"),

))

memory = ConversationBufferWindowMemory(

k=5,

return_messages=True,

memory_key="chat_history"

)

callback_handler = AdvancedCallbackHandler()

agent = create_tool_calling_agent(llm, tools, prompt_template)

agent_executor = AgentExecutor(

agent=agent,

tools=tools,

verbose=True,

memory=memory,

callbacks=(callback_handler),

max_iterations=10,

early_stopping_method="generate"

)

This cell builds the agent's “brain” and workflow: it defines a structured chatpromplate that educates the system on its tool set and its style of reasoning, sets up a sliding window conversation to keep the last five exchanges and instance the AdvancedCallBackhandler for real -time journalization. He then created a reference agent by linking the Gemini LLM, the personalized tools and the prompt model, and the envelope in an agentxecutor which manages the execution (up to ten stages), exploits the memory for the context, broadcasts a verbal outing and stops properly once the agent generates a final response.

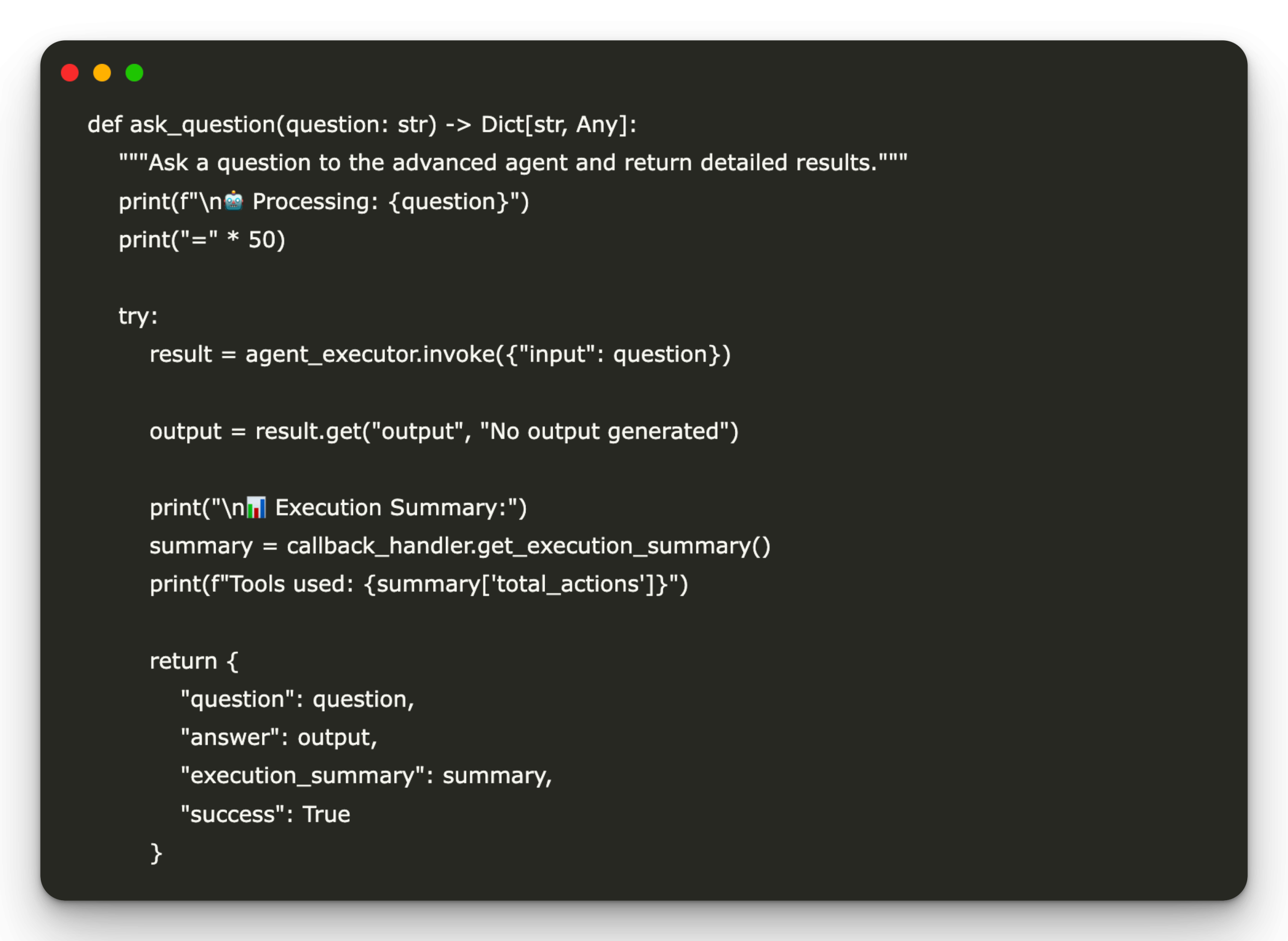

def ask_question(question: str) -> Dict(str, Any):

"""Ask a question to the advanced agent and return detailed results."""

print(f"\n🤖 Processing: {question}")

print("=" * 50)

try:

result = agent_executor.invoke({"input": question})

output = result.get("output", "No output generated")

print("\n📊 Execution Summary:")

summary = callback_handler.get_execution_summary()

print(f"Tools used: {summary('total_actions')}")

return {

"question": question,

"answer": output,

"execution_summary": summary,

"success": True

}

except Exception as e:

print(f"❌ Error: {str(e)}")

return {

"question": question,

"error": str(e),

"success": False

}

test_questions = (

"How many r's are in strawberry?",

"Calculate the compound interest on $1000 at 5% for 3 years",

"Analyze the word frequency in the sentence: 'The quick brown fox jumps over the lazy dog'",

"What's the fibonacci sequence up to the 10th number?"

)

print("🚀 Advanced Gemini Agent with Riza - Ready!")

print("🔐 API keys configured securely")

print("Testing with sample questions...\n")

results = ()

for question in test_questions:

result = ask_question(question)

results.append(result)

print("\n" + "="*80 + "\n")

print("📈 FINAL SUMMARY:")

successful = sum(1 for r in results if r("success"))

print(f"Successfully processed: {successful}/{len(results)} questions")Finally, we define an assistance function, ASK_QUESTION (), which sends a user request to the executor of the agent, prints the question of question, captures the agent's answer (or error), then publishes a brief execution summary (showing the number of tool calls). It then provides a list of examples of questions, covering the counting characters, calculating the interest of the compounds, analyzing the frequency of words and generating a sequence of fibonacci and itery through them, by invoking the agent on each and by collecting the results. After having executed all the tests, he prints a concise “final summary” indicating how many requests have been successfully processed, confirming that your advanced agent Gemini + Riza is operational in Colab.

In conclusion, by focusing architecture on the secure execution environment of Riza, we have created an AI agent that generates insightful responses via Gemini while executing an arbitrary Python code in a fully bac, supervised context. The integration of the Riza Execpyon tool guarantees that each calculation, from advanced digital routines to dynamic text analyzes, is executed with rigorous safety and transparency. With tool calls orchestrating Langchain and a memory pad holding the context, we now have a modular framework ready for real tasks such as automated data processing, research prototyping or educational demos.

Discover the Notebook. All the merit of this research goes to researchers in this project. Also, don't hesitate to follow us Twitter And don't forget to join our 99K + ML Subreddit and subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. as a visionary entrepreneur and engineer, AIF undertakes to exploit the potential of artificial intelligence for social good. His most recent company is the launch of an artificial intelligence media platform, Marktechpost, which stands out from its in-depth coverage of automatic learning and in-depth learning news which are both technically solid and easily understandable by a large audience. The platform has more than 2 million monthly views, illustrating its popularity with the public.