Great languages (LLM) models such as GPT-4 are considered as technological wonders capable of successfully passing the Turing test. But is this really the case?

Chatgpt is mounted on large heights in the field of artificial intelligence (AI). It may seem intelligent, fast and impressive. He skillfully demonstrates visible intelligence, engages in conversations with people, imitates human communication and even goes from exams in jurisprudence. However, it still provides completely false information in some cases.

So, can the Chatppt pass the Turing test, generating indistinguishable text from a human response?

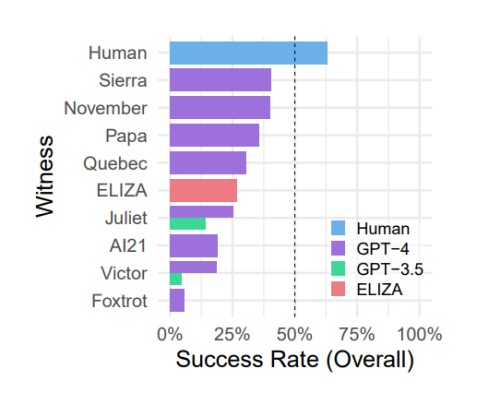

Two researchers from the University of California in San Diego explored this issue and discovered intriguing results. Cameron Jones, specializing in language, semantics and automatic learning, and Benjamin Bergen, professor of cognitive sciences, carried out an online Turing test. In this test, the participants tried to determine if the respondent was an AI or a human. GPT-3.5 or GPT-4 acted as an artificial intelligence.

The experience involved an online test which included conversations between 650 participants and the GPT models. Participants were invited to identify who they spoke, a human or an AI. Various configurations of GPT-3.5 and GPT-4 were used throughout the experience. For the most part, the researchers studied the prompt, which led the LLM to a specific subject and style of conversation. This prompt defined the nature of the interaction and the personality of the agent, and was varied to see how it affected the result.

Each dialogue had a time limit of 5 minutes and a message length in less than 300 characters. In total, 652 participants participated in the experience and finished 1810 “games”. After filming questionable results, the number of “games” was reduced to 1,405.

The results revealed that the GPT-4 models managed to deceive the participants 41% of the time, while the GPT-3.5 models have succeeded only 5 to 14% of the time. For comparison, the old Eliza model (1966) was recognized as a person in 27% of cases. In addition, an interesting fact was discovered: the participants managed to convince other participants that they were not machines only 63% of the time.

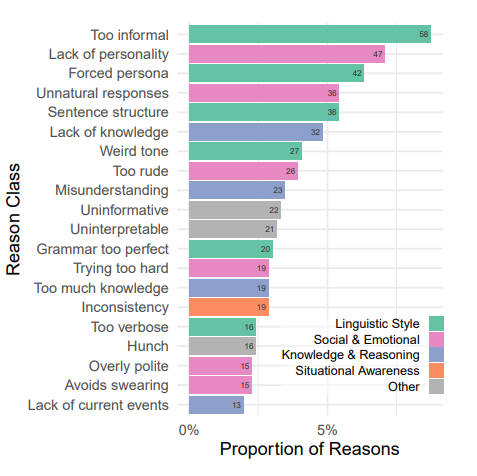

A fairly interesting aspect was the motivations for participants' decisions. The reasons why AI was considered to be the following. If the AI seemed too formal or informal, it could cause them anxiety. Likewise, the answers too verbose or too short, a strange tone of conversation, or a perfect grammar have become important factors to determine whether the interlocutor was human or machine. It should also be noted that a lack of knowledge has proven to be more important than an excess of it. This suggests that AI models should continue to learn. Test participants also showed sensitivity to generic responses.

The researchers concluded that on the basis of this experience, it cannot be said that GPT-4 succeeded in the Turing test. However, the success rate of 41% indicates that the use of AI for deception becomes more realistic. This is particularly relevant in situations where human interlocutors are less attentive to the possibility of communicating with a machine.

The models of AI able to imitate human responses have the potential for large -scale social and economic impacts. It will become more and more important to monitor AI models and identify factors that lead to deception, as well as develop strategies to mitigate it. However, researchers point out that the Turing test remains an important tool to assess the dialogue of machines and understand human interaction with artificial intelligence.

It is remarkable how fast we have reached a stage where technical systems can compete with humans in communication. Despite the doubts about the success of GPT-4 in this test, its results indicate that we get closer to the creation of AI which can compete with humans in conversations.

Learn more about the study here.