In this tutorial, we will implement an MCP client (Custom Model Context Protocol (MCP) using Gemini. At the end of this tutorial, you can connect your own AI applications with MCP servers, unlocking new powerful capacities to overeat your projects.

Gemini API

We will use the Flash Gemini 2.0 model for this tutorial.

To get your Gemini API key, visit Google gemini key page and follow the instructions.

Once you have the key, store it safely – you will need it later.

Node.js

Some of the MCP servers require that Node. Download the latest version of node.js of nodejs.org

- Run the installation program.

- Leave all the default settings and complete the installation.

National Park Services API

For this tutorial, we will exhibit the MCP Services Server from the National Park to our client. To use the API of the National Park Service, you can request an API key by visiting This link and fill out a short form. Once submitted, the API key will be sent to your email.

Be sure to keep this key accessible – we will use it shortly.

Installation of python libraries

In the command prompt, enter the following code to install Python libraries:

pip install mcp python-dotenv google-genaiCreation of the MCP.json file

Then create a file named McP.json.

This file will store configuration details on the MCP servers to which your customer will connect.

Once the file has been created, add the following initial content:

{

"mcpServers": {

"nationalparks": {

"command": "npx",

"args": ("-y", "mcp-server-nationalparks"),

"env": {

"NPS_API_KEY":

}

}

}

}Replace

Creation of a.

Create a .VEV file in the same directory as the MCP.json file and enter the following code:

Replace

We will now create a client.Py File to implement our MCP client. Make sure this file is in the same directory as McP.json And .G

Customer's basic structure

We will first import the necessary libraries and create a basic customer class

import asyncio

import json

import os

from typing import List, Optional

from contextlib import AsyncExitStack

import warnings

from google import genai

from google.genai import types

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from dotenv import load_dotenv

load_dotenv()

warnings.filterwarnings("ignore", category=ResourceWarning)

def clean_schema(schema): # Cleans the schema by keeping only allowed keys

allowed_keys = {"type", "properties", "required", "description", "title", "default", "enum"}

return {k: v for k, v in schema.items() if k in allowed_keys}

class MCPGeminiAgent:

def __init__(self):

self.session: Optional(ClientSession) = None

self.exit_stack = AsyncExitStack()

self.genai_client = genai.Client(api_key=os.getenv("GEMINI_API_KEY"))

self.model = "gemini-2.0-flash"

self.tools = None

self.server_params = None

self.server_name = NoneThe __init__ method initializes MCPGEMIAGENT by configuring an asynchronous session manager, by loading the API Gemini client and preparing the spaces reserved for the configuration of the model, the tools and the details of the server.

He laid the basics of server connections and interaction with the Gemini model.

MCP server selection

async def select_server(self):

with open('mcp.json', 'r') as f:

mcp_config = json.load(f)

servers = mcp_config('mcpServers')

server_names = list(servers.keys())

print("Available MCP servers:")

for idx, name in enumerate(server_names):

print(f" {idx+1}. {name}")

while True:

try:

choice = int(input(f"Please select a server by number (1-{len(server_names)}): "))

if 1 This method invites the user to choose a server from the available options listed in MCP.JSON. It loads and prepares the connection parameters of the server selected for subsequent use.

Connection to the MCP server

async def connect(self):

await self.select_server()

self.stdio_transport = await self.exit_stack.enter_async_context(stdio_client(self.server_params))

self.stdio, self.write = self.stdio_transport

self.session = await self.exit_stack.enter_async_context(ClientSession(self.stdio, self.write))

await self.session.initialize()

print(f"Successfully connected to: {self.server_name}")

# List available tools for this server

mcp_tools = await self.session.list_tools()

print("\nAvailable MCP tools for this server:")

for tool in mcp_tools.tools:

print(f"- {tool.name}: {tool.description}")This establishes an asynchronous connection to the MCP server selected using Stdio Transport. It initializes the MCP session and recovers the available tools of the server.

Management of user queries and tool calls

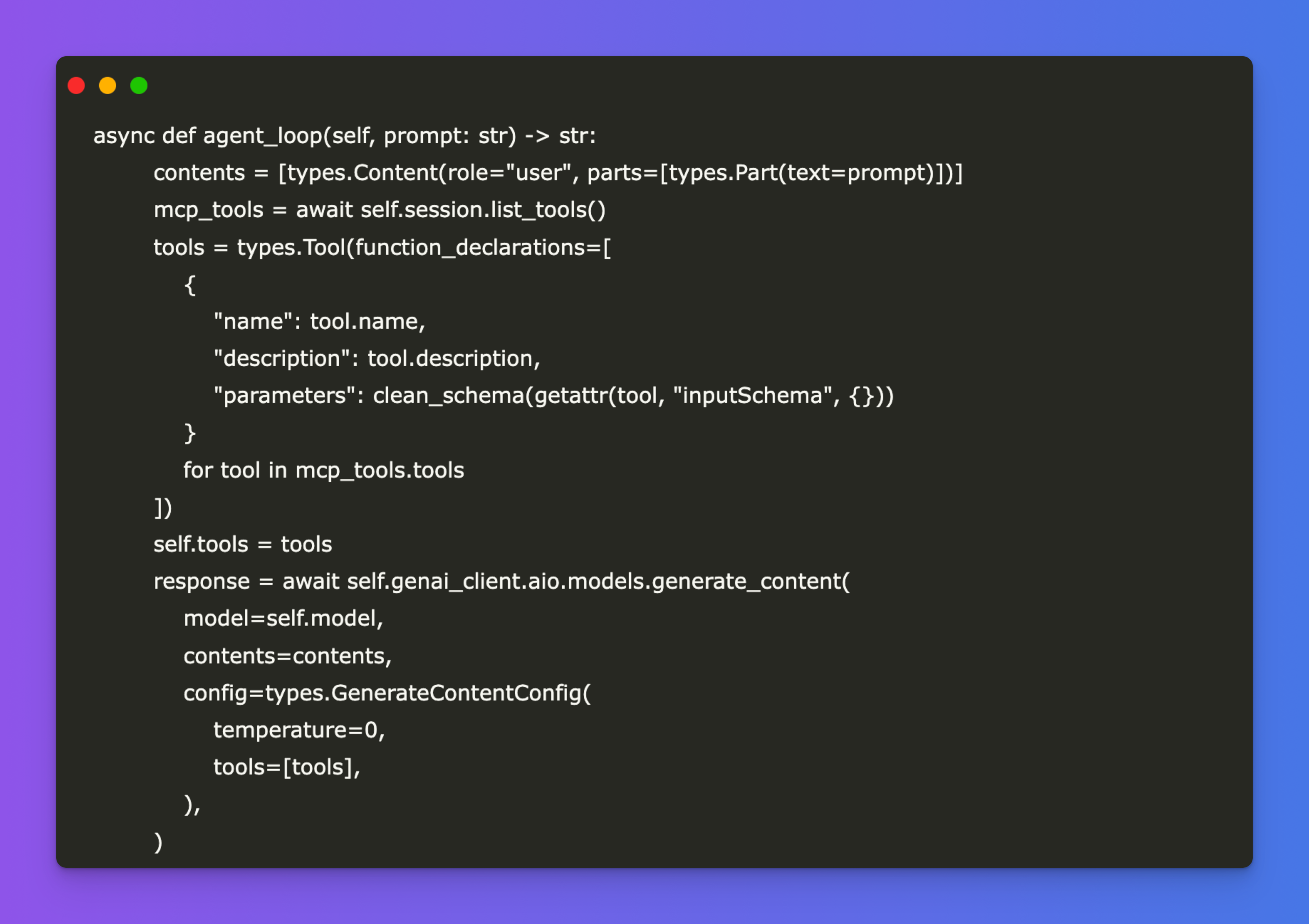

async def agent_loop(self, prompt: str) -> str:

contents = (types.Content(role="user", parts=(types.Part(text=prompt))))

mcp_tools = await self.session.list_tools()

tools = types.Tool(function_declarations=(

{

"name": tool.name,

"description": tool.description,

"parameters": clean_schema(getattr(tool, "inputSchema", {}))

}

for tool in mcp_tools.tools

))

self.tools = tools

response = await self.genai_client.aio.models.generate_content(

model=self.model,

contents=contents,

config=types.GenerateContentConfig(

temperature=0,

tools=(tools),

),

)

contents.append(response.candidates(0).content)

turn_count = 0

max_tool_turns = 5

while response.function_calls and turn_count = max_tool_turns and response.function_calls:

print(f"Stopped after {max_tool_turns} tool calls to avoid infinite loops.")

print("All tool calls complete. Displaying Gemini's final response.")

return responseThis method sends the user's prompt to Gemini, processes all tool calls returned by the model, performs the corresponding MCP tools and in an iterative way the answer. It manages multi-tours interactions between Gemini and server tools.

Interactive cat loop

async def chat(self):

print(f"\nMCP-Gemini Assistant is ready and connected to: {self.server_name}")

print("Enter your question below, or type 'quit' to exit.")

while True:

try:

query = input("\nYour query: ").strip()

if query.lower() == 'quit':

print("Session ended. Goodbye!")

break

print(f"Processing your request...")

res = await self.agent_loop(query)

print("\nGemini's answer:")

print(res.text)

except KeyboardInterrupt:

print("\nSession interrupted. Goodbye!")

break

except Exception as e:

print(f"\nAn error occurred: {str(e)}")This provides a command line interface where users can submit requests and receive gemini responses, continuously until they leave the session.

Clean resources

async def cleanup(self):

await self.exit_stack.aclose()This closes the asynchronous context and cleans all open resources such as session and connection with elegance.

Main entry point

async def main():

agent = MCPGeminiAgent()

try:

await agent.connect()

await agent.chat()

finally:

await agent.cleanup()

if __name__ == "__main__":

import sys

import os

try:

asyncio.run(main())

except KeyboardInterrupt:

print("Session interrupted. Goodbye!")

finally:

sys.stderr = open(os.devnull, "w")This is the main execution logic.

Apart from the hand (), all other methods are part of the Mcpgeminiagent class. You can find the complete customer.py file here.

Run the following prompt in the terminal to execute your client:

The customer will be:

- Read the MCP.json file to list the different MCP servers available.

- Invite the user to select one of the servers listed.

- Connect to the MCP server selected using the configuration and environmental settings provided.

- Interact with the Gemini model through a series of requests and answers.

- Allow users to make prompts, execute tools and process iterative responses with the model.

- Provide a command line interface so that users engage with the system and receive real -time results.

- Make sure an appropriate cleaning of resources after the end of the session, the closing of connections and the release of memory.

I graduated in Civil Engineering (2022) by Jamia Millia Islamia, New Delhi, and I have a great interest in data science, in particular neural networks and their application in various fields.